Google has grand plans for user experience. In May 2021, they will roll out new ranking signals based on their new Core Web Vitals.

But what counts as a good user experience? How ready are you for the incoming big change?

AUDIT YOUR SITE to expose flaws in your UX >>

We all use the Internet. We dig through websites we find in search, ditching the ones we don’t like. Many of us have our own websites, so we want users to have a good time and come back again.

Of course, you can’t please everyone. Some users will like your site’s design, others will go somewhere they feel more welcome.

What people universally like is a website that runs like clockwork. And that’s within every site owner’s reach.

The technical side of quality UX consists of three fronts:

- Getting rid of all errors and other undesirable effects;

- Maximizing your site’s loading speed;

- Making your site easy to use on any platform that can access it.

Your web designer can worry about the rest.

“Users universally like websites that run like clockwork. And that’s within every site owner’s reach.”

I. Tend to Your Technical Issues

Technical errors can have a variety of effects, none of them good.

- Content (and therefore valuable information) not displaying properly;

- Pages not doing their job – for example, a button refusing to work;

- Pages you want to convert not getting picked up in search.

The result is less user activity on your site. Whatever goals you may have are directly tied to user activity, so they will all fail, too.

The only way to prevent this is through timely technical audits. Performing them every week is optimal, though nothing stops you from doing it more often. All you need is the time to check up on the reports and fix any errors you find.

1. Indexing Issues

Is there anything wrong with how your site’s pages appear in search?

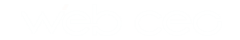

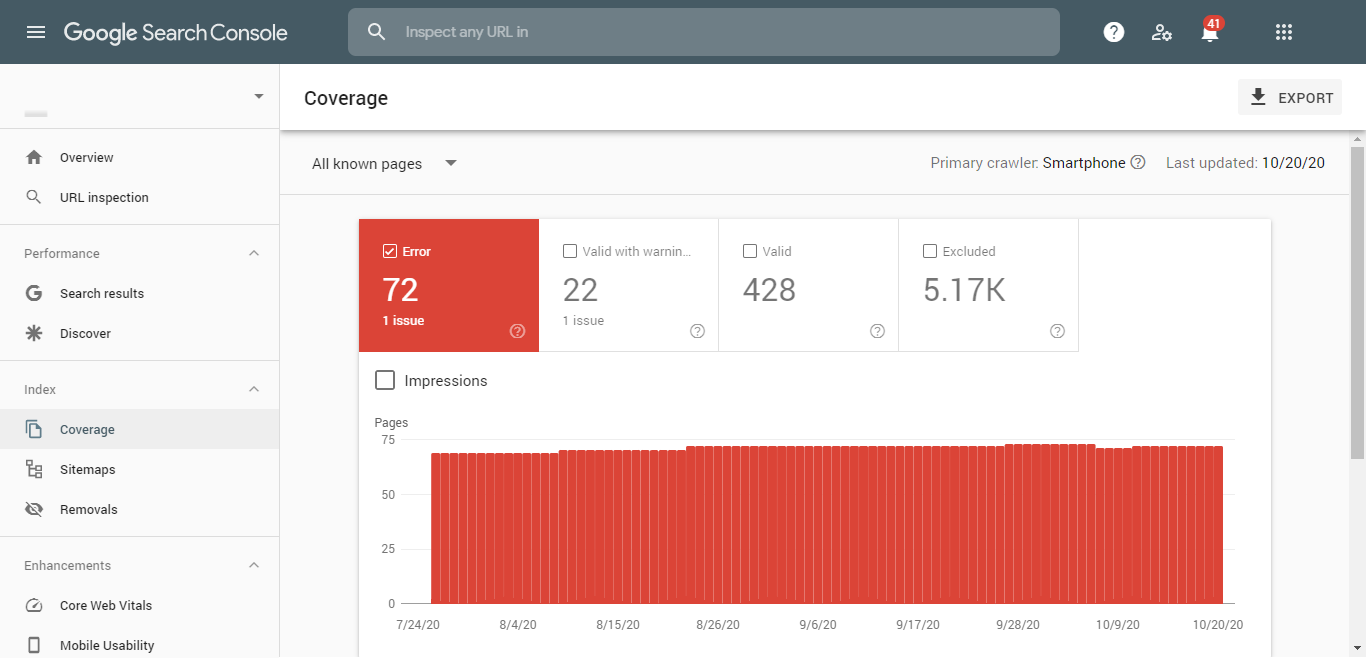

One of the best tools to find that out is Google Search Console. You can find your indexing issues in the Index > Coverage section.

Tick in the Error, Valid with warning, and Excluded checkboxes, and the Details section below will show every problem with how your site pages are indexed. If any individual pages pique your interest, click on the entries in Details to check up on them.

Fix your issues by re-adding the pages to the index or by removing them from it, then press Validate fix.

Changes to your index can be done in your sitemap. There can be three possible problems with it:

- You don’t have a sitemap;

- It doesn’t work;

- It’s outdated.

And all of them are solved by uploading an up-to-date sitemap to your site:

- Create your sitemap (either manually or by using a tool like XML-Sitemaps);

- Upload it to your site;

- Visit the Index > Sitemaps section in Google Search Console, enter your sitemap’s URL, and press Submit.

If you have any outdated sitemaps in the Submitted sitemaps section, remove them.

2. Robots.txt

This file may simply be missing from your site. In this case, you just need to upload it and make sure it works properly.

Since the purpose of robots.txt is to tell search engines what they can or can’t crawl on your site, it’s obvious what you can expect if you misuse this file. Open it and look for these problems:

- Search engine bots cannot crawl your site

This error is very easy to cause. When all bots can crawl your site, your robots.txt file has these lines:

User-agent: * Disallow:

And if there’s a slash after Disallow: the bots can do nothing at all:

User-agent: * Disallow: /

If any directories are denoted after Disallow: /, make sure you actually don’t want them to be crawled. Otherwise, the bots will be unable to index the parts of your site you want to appear in search.

- Bots crawl pages & folders that shouldn’t be crawled

This is the opposite of the problem described above. It means that the directories you want blocked are not denoted in the file. Make a line starting with Disallow: / for each of those directories and re-upload the file.

- Typos & syntax errors

Self-explanatory.

Once you’ve corrected everything, re-upload your robots.txt.

3. Duplicate Content

To find issues with duplicate content on your site, you will need tools like Screaming Frog or DeepCrawl.

Here’s what you may find:

- Page titles & meta descriptions

Different pages with the same names and descriptions will confuse users. Change all your duplicates to something unique.

- Content copied from your own pages or other sites

Same as above, it’s best to make your content unique. If that’s somehow not an option, then add a rel=”canonical” tag with the original’s URL in the <head> section of the page.

- Variations of the same URLs in the index

Sometimes Google will index the same page multiple times. It can happen when there are parameters after its URL or when it has both http and https versions. Get rid of any unwanted copies like so:

- Google Search Console > Legacy tools and reports > URL parameters: This is where you teach Google to ignore parameters. For instance, https://mysite.com?utm_source=google will not be considered different from https://mysite.com.

- Set up 301 redirects from the duplicate URLs to canonical ones.

4. Content Displaying Issues

There may also be issues with content displayed on your site, such as:

- Broken images;

- Broken anchor text;

- Broken JavaScript files;

- Broken links (and redirects);

- Broken or missing H1-H6 tags.

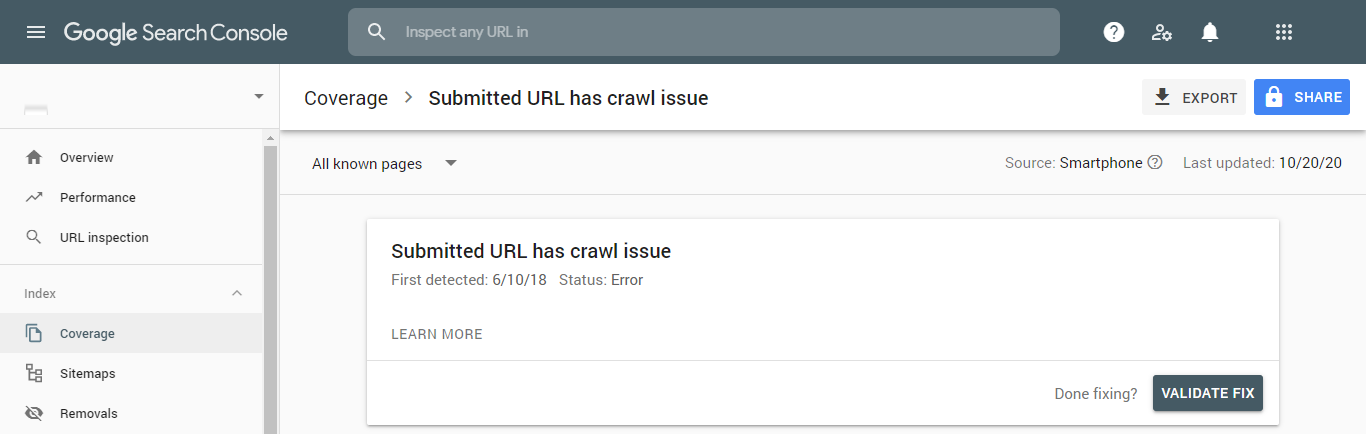

These problems can be found by SEO tools such as WebCEO. Simply create a project for your site and scan it in the Technical Audit tool.

Fix these errors by editing the HTML code on their respective pages.

5. Structured Data Errors

Adding structured data to your pages can be a lot of work. Therefore, it’s a real pain when errors ruin not just your efforts, but also what your site looks like in search.

Test the pages you’ve marked up for possible errors with Google’s free Rich Results Test tool.

6. HTML, CSS & Other Code Errors

Often errors in the code composing your webpages are easily noticed when your content isn’t displaying properly. But that isn’t always the case and the effect may be unseen.

Run your site through a free tool like W3C Validator to find all your problems (HTML, CSS, JavaScript, etc.) and then correct the offending pages.

II. Minimize Your Page Load time

Page loading speed is one of the most noticeable aspects of user experience, in addition to being a Google ranking factor. At its core, page speed means how easy it is for a website to interact with the server.

How can you make it easier?

1. Minimize the Number of Assets on the Page

Fewer assets means less time to process them all. It may be fun to pretty up your site, but all the extra fanfare could work against you. For instance, try being picky with the site theme you install.

2. Merge Assets Where Possible

A substep of the above point. If you have two images placed directly right after another, splice them into a single image. Likewise, multiple .css files can be combined into a single file.

3. Optimize Your Images

Pictures in high definition and quality are a must on any site. However, they must not take up more space than needed.

Trim those kilobytes without sacrificing the quality:

- Pick the file format which yields the smallest size;

- Resize images to make them no larger than needed;

- Compress them with software or online services like TinyPNG.

4. Optimize Your Page Code

Code optimization is an invaluable skill, and not only in programming. If you can simplify the code making up your site’s pages, do it.

5. Put JS Scripts at the End of Your Page Code

Let everything else load first. A faulty JS script can prevent an entire page from loading.

6. Invest in Good Hosting

Choose the best server or CDN you can afford.

7. Use Compression Software

For example, many sites use Gzip to compress their files and cut down their size by as much as a third.

8. Use Lazy Loading

When it’s implemented on a site, it postpones loading the elements that are not in your current scroll.

9. Have Fewer Redirects

If you have fixed your faulty redirects, that will help, too.

There are many tools for measuring your site’s loading speed:

- In-built browser tools, such as Chrome DevTools’ Lighthouse. Note that their performance may be affected by your browser’s active extensions.

- Google PageSpeed Insights.

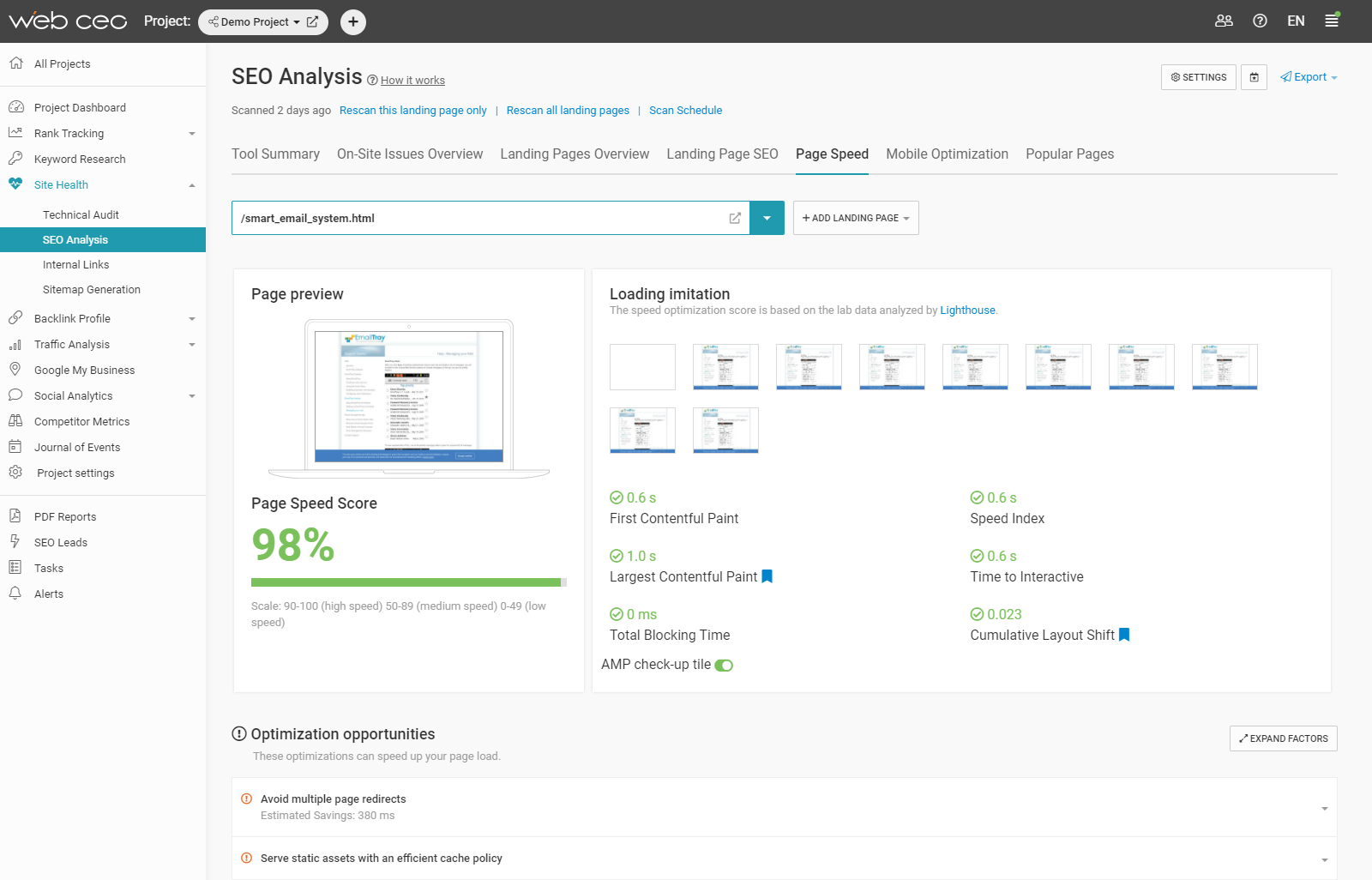

- Paid SEO tools. They will measure your loading speed and offer tips for increasing it. For example, here’s what you’ll see in WebCEO’s Page Speed tool:

III. Optimize Your Site for Mobile

Let’s not forget the difference in user experience between the various platforms. PCs and smartphones are the main contenders for online traffic, splitting it between the two nearly equally in half.

Optimizing your site for these two giants makes it good to use on the other types of devices too, so making your site mobile-friendly is enough for any sort of goal.

The main points of mobile optimization are:

- Page loading time;

- Responsive design;

- Optimized images;

- No content-obstructing popups;

- No unsupported content.

We have already described how to maximize your site’s loading speed. If you have done that, you have completed most of your technical SEO for mobile devices.

The same goes for image optimization – except that you also need to make them easily discernible on small mobile screens without zooming in.

Avoiding unsupported content can be tricky, as it requires you to know those types of content. For example, Flash has been the worst offender for years. Fortunately, Adobe plans to retire it at last. Keep an eye on such news in the future so you know when to scrap or replace something on your site.

As for responsive design, it automatically rearranges site elements to fit into a screen of any size while keeping them easy to view. Like this:

(Image source: Gratzer Graphics)

If you use a framework like Bootstrap when making your site, it will be automatically made responsive. Or you can do it yourself.

- Add this code in the <head> section on your site:

<meta name="viewport" content="width=device-width, initial-scale=1.0">

- Add the max-width property to your images and videos in their HTML code. Example:

<img src="photo.png" alt=”Product” style="max-width:100%; height:auto;">

- Set the font sizes on your site to vw units:

<p style="font-size:12vw">Lorem ipsum dolor sit amet</p>

After making these additions to your site, test it on actual devices or with a service like Am I Responsive?

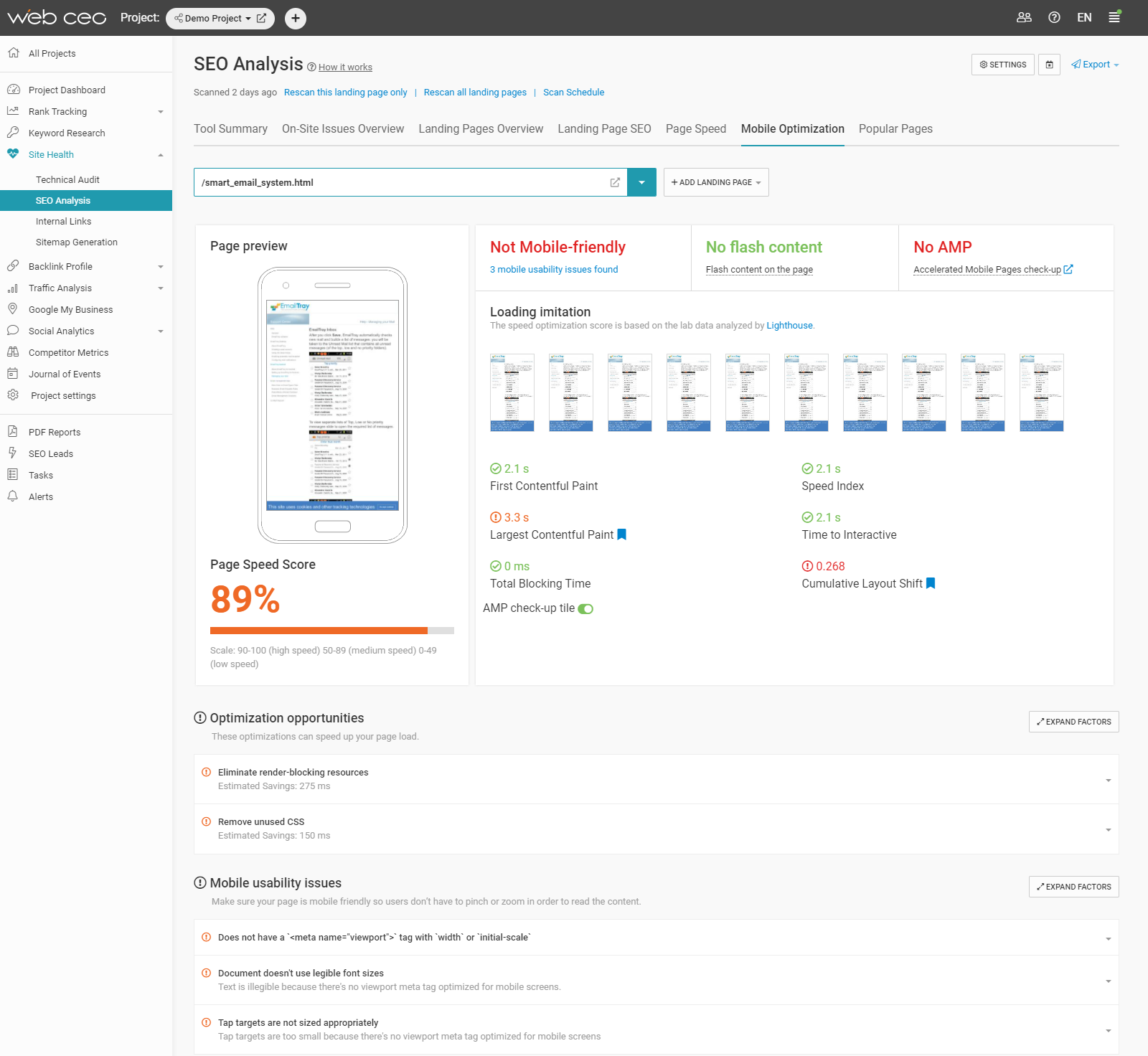

To receive a detailed analysis of your mobile UX with suggestions for solving problems, scan your site with a tool like WebCEO’s Mobile Optimization.

Afterword

Making websites user-friendly requires a deep understanding of your audience’s needs.

Then you make your site well-functioning and simplistic at the same time – two traits that are not easily reconciled.

Lastly, you use the necessary tools to keep your site running like a well-oiled machine.

But all this effort pays off when your users turn into regulars.

Let’s make your job easier with the tools to improve your user experience on two fronts: technical and SEO. Scan your site and see what you can do right now to help your users.

Your brains + tools for SEO.

Audit your site and make it user-friendly!

The opinions expressed in this article are the sponsor's own.