Editor Note: Searchmetrics is our partner for our SEJ Summit conference series this year, but we were not paid or perked in any way for this post.

In a world where “content is king,” traditional keyword research is not enough, and SEOs are increasingly responsible for developing a solid content strategy. Google’s Hummingbird update in 2013 made this move critical.

Search Metric’s annual 2015 Search Ranking Factors study launched early this month, and it does much to illuminate the importance of semantically related and comprehensive website content. It shows correlations with how readability and length of web pages are critical to the new, holistic way of writing and presenting content.

[pullquote]In Google-speak, we need “good content,” a vague and subjective yardstick. What is “good content”?[/pullquote]

SEO’s and writers need to be in step with what Google’s machine learning deems “good”. There are content metrics Google’s algorithms use that we as content creators should understand. This piece looks at several tools that help do just that.

Tools that score the quality of web page content help both SEOs and content creators discover content that will score high in Google Search (and be engaging for users) beyond their own websites. There are many tools to help evaluate your website, but my mission here is to help you find other high-quality web page content in your niche — as objectively scored by metrics — so you can learn from their success.

Marcus Tober‘s presentation at SEJ Summit Chicago identified two sites that represent the old keyword-research-only method versus the new semantic relevance for content creation. If you know of other websites that contrast old versus new content creation strategy, keywords versus holistic content, they are fantastic for understanding Google’s new ranking, which is no longer based on keywords.

Tober showed how eHow dominated keywords related to “how to boil eggs” before Google introduced the Hummingbird algorithm and how their entire website tanked in Google search results (SERP) in the past two years.

In contrast, TheKitchn.com was his example of a website meeting the criteria of the new “holistic” approach for content creation, and is killing it in the SERP for terms related to “boiled eggs,” at the same time eHow saw a stunning drop in ranking for the same related topics.

To go along with Tober’s examples, I use pages that rank from these sites for scoring with free tools. I also use a New York Times piece for some tests and a Wikipedia page for the last three. If the automated tools do their job, we should find poor scores for eHow and good scores for all others. Let’s see how the tools do.

Keyword Research Tools Find Related Phrases Ranking From a Webpage

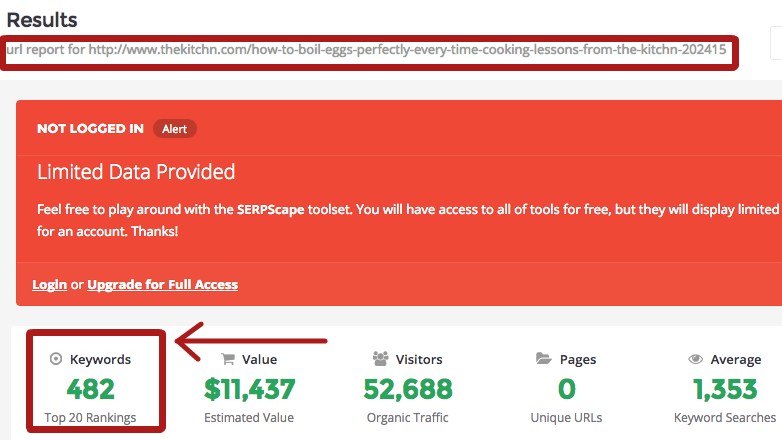

There are three free tools that look at how tens of millions of keywords rank on Google and then find associated metrics for the web pages ranking on the keywords. Using their data, I can determine if Google is willing to rank many phrases or topics from one page. All of the co-ranking phrases for the pages have good search volume when examined with these tools, as it is the “most popular” keyword phrases they track.

The Kitchn piece has 66 comments, so you need to determine if you want to include comments as part of the analysis. Since you’re evaluating the content you have control over, it’s probably best to leave this out. Of course, more comments show more engagement, and Google will use this as a positive signal.

If many related terms rank from a single web page it is more likely to have comprehensive content, as is the case with TheKitcn.com.

Sure, links are super important, but the Search Metrics 2015 Study shows that technical and on page factors are just as important. If all other ranking signals are equal, a page with more comprehensive content should have more phrases ranking. To score competitor’s holistic content based on number of related ranking keyword phrases from a page, you might also find the “link equity” score from Moz or Ahrefs to see the extent to which links are helping multiple words from a page rank.

I chose one of the pages on Wikipedia that drives the most traffic to the site (according to SEMrush data), their entry for “Facebook.” Here are the other three pages:

- How To Boil Eggs Perfectly Every Time – The Kitchn

- How to Boil Eggs Without Cracking Them – eHow

- How to Hard-Boil Eggs in a Microwave – eHow

SerpScape

This new-ish website says it looks at 40,000,000 SERPs on a rolling basis. That puts them in a class with only two other tools offering a “free” or “freemium” tool, which update 10s of millions of keywords per month. The others are SEMrush (where I previously worked) and SypFu.

SpyFu

The company says they are “Indexing over 4 billion results across 64 million domains” in the US and UK. They do not specify how they define results. Free users can only see the breakdown of related results for the top ten, but they do show the number of related terms they’ve found for the page.

SEMrush

The software company updates 40 million keywords in the US (120 million including other country databases) and also updates on a rolling basis, over the course of the month.

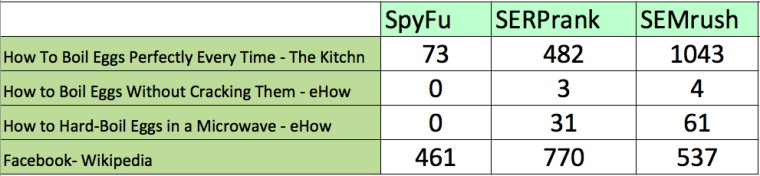

Wow, a lot of variance with these results. Keep in mind, when tools use “popular keywords”, their lists can be quite different from each other. This is the main reason for the variance.

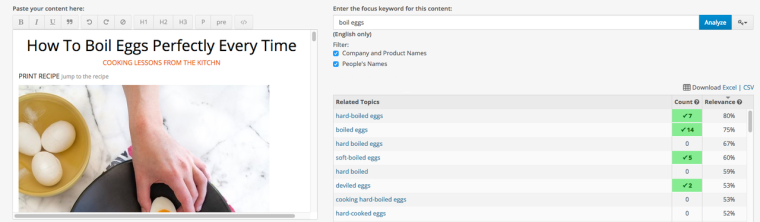

MarketMuse

This company offers a free version and upgrades to paid plans (Note: I do consulting for this company). Their developers are experts in the machine learning space and created their own knowledge graph technology to score and locate gaps in topic relatedness for websites and webpages.

Users can simply copy/paste from a page with images and other media to drop into a field for their “blog research” tool. You can also examine scoring for holistic content for all pages under one domain, allowing you to figure out where there are “gaps” in your content.

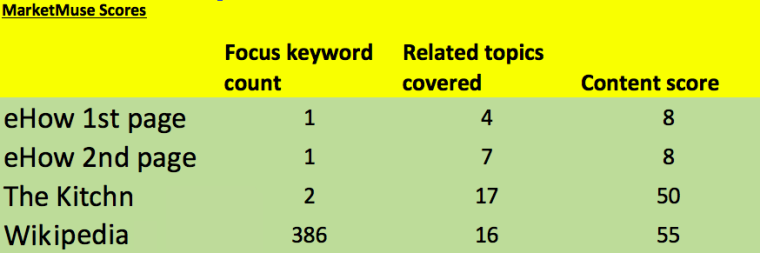

Here’s a chart of how the pages scored.

Their “focus keyword” is the word or phrase you searched as the main topic for the page. On the Wikipedia page, I searched “Facebook,” so the number would be most useful when compared to a web page on the same topic. Related topics are mentioned at least once. So, one page on The Kitchn has more than both of the eHow pages combined. Content score is the number of topics, but it also counts how often a related topic was mentioned, up to a max of five points per topic.

For the eHow article about microwaving eggs, the most important topical gap found was a lack of related topics to eggs or cooking. There are only two phrases related to these, and microwaves is mentioned four times. Their egg cooking method is an unorthodox use of microwaves, and the first sentence of the article says “boil eggs in the microwave oven at your own risk because there is a chance that the eggs can explode. “

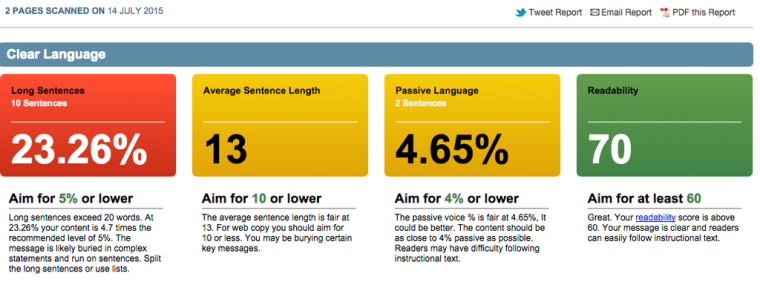

Clarity Grader

This tool judges readability. Tober points out that more general interest sites should be easier to read than academic or specialized sites, which makes sense. All three of the sites we are looking at in this article are general interest. The tool scores this largely on sentences being too long. This seems a bit subjective for an algorithmic determination given subtleties of what constitutes “general interest” versus reading level of users.

A new page was used here to see how content from another authoritative website know for comprehensive coverage would perform. A very important page one story from the New York Times was used which discussed the completion of the Iran Nuclear Deal the day it was announced.

Clarity Grader agrees with Tober: people like quick reads, so shorter is better. Our top two boiled egg pages for eHow scored 23.3 for long sentences, 13 for Average Sentence Length, 4.7 for Passive Language, and 70 for Readability. The 2015 Search Ranking Factors study found “the complexity of the content has decreased; according to the results of the Flesch readability analysis the texts are somewhat less demanding to read” than in 2014.

The Kitchn had similar scores — I expect they would be better. They were about 2% lower on long sentences, but 2% higher on “passive language” and their final readability score was just one point different from eHow at 69.

The Times page has 2050 words. Average sentence length is 16 words, so 37% are scored as long. 13% of the language on the page uses passive language according to the tool. Overall Readability is 49/100 and requires the reader be above a 10th grade reading level. If The Times did poorly on “Readability,” take these results with a grain of salt. The site says: “This means some content is complex and likely inaccessible when skimmed. Aim for 60 or above on the Flesch readability scale. Simplify by using simpler words / phrases and shorter sentences.”

Nibler

The tool finds the average number of words on a page, a sign of comprehensive coverage mentioned by the Search Metrics Report. They offer a plethora of metrics, but most measure technical aspects of a webpage. Nibler can’t single out one page’s length, but it’s useful to get metrics for many pages alongside a single page. It crawls a sample of 10 pages linked from the page you provide.

Nibler gives The Kitchn a 9 of 10 for “content quantity” for having an average of 785 words per page. eHow gets a 7.7 score for average number of words per page. The average page has 250 words, less than 1/3 the content found on the kitchn. NYtimes.com average page is 1371, giving them an 8.5 for content.

Scoring the Scorers: How Accurate Are The Tools and Techniques?

The purpose of automated grading is usually to get around hands-on reviews of web pages for quick spot checks. I’ll break down results into three key metrics.

Semantically Relevant and Comprehensive Wording

eHow did significantly worse with the keyword research tools for related phrases, as well as for “topic gaps” from MarketMuse. This metric is the most difficult to judge, and perhaps the most important.

Our results are correlational for the keyword tools: Google could be using other ranking signals to put many phrases from the same page high in the SERP. There may well be a feedback loop here: sites with pages that are more comprehensive will tend to get more links because users judge comprehensive as good content. Search Metrics demonstrated that this correlation is strong across hundreds of websites.

MarketMuse is not using correlations. Results are based on their own “knowledge graph” data. Do their machine results match a more complex read of a page by a human to judge success? From the pages I’ve analyzed beyond this article, the answer is yes. Judge for yourself.

Long Form or Higher Word-Count Content

This is a straightforward score and Nibler shows the eHow pages are too short.

Easy to Read Content

This is a bit tough to judge for accuracy. We saw the reverse of what we would expect, with eHow doing best and the New York Times doing poorly! The problem here is context and a website’s goals.

Some websites benefit from being more challenging to read. For example, the complexity draws people to The Times and means time on site is longer—a good ranking signal. The heavy use of “passive language” is not what I’d expect. I’d want to do a manual inspection of the page and see why it does so poorly on this metric. Further, The Kitchn should not have come in below, though close, to eHow.

Final Thoughts

These increasingly crucial content scoring capabilities are behind much of Moz’s upcoming stand-alone software, “Moz Content.” The company plans the launch for December 2015, and it will be one of very few tools in this burgeoning space priced below enterprise level (actual pricing and a possible free version have not been announced yet).

Machines are challenged in their grading process — ultimately some very, very complex artificial intelligence can be employed to evaluate content on a website. But, and it’s a big BUT, these results show how powerful automation can be for relevance and comprehensiveness.

Tober mentioned the eHow pages are written based on keyword placement without enough regard to content usefulness. Reading one of their egg articles, I immediately frowned on the big focus of using a microwave to cook eggs — that might explode. How much thought really went into that piece?

Holistic coverage of a topic is useless if the base advice given in a web page is questionable at best. That’s when you need to stop and decide: let’s not give advice just to rank for keywords. Why encourage people to do something dangerous if your site is all about advice? Doesn’t that ruin credibility? The second sentence of the article admits that explosions could occur if you follow their advice. There are eight comments from users, and two mention disasters: [sic] “4 minutes, 2 eggs covered with water in a 2 cup Pyrex. blew the microwave to smithereens. DON”T do this. It was a rival 900 watt micro R.I.P.”

Give computer algorithms a couple of years, and I bet they will be able to spot complex problems with on page logic. Perhaps Google is already able to find topic associations that are plain wrong?

Image Credits

Featured Image: Birdiegal/Shutterstock.com

Images #1 and #3: Images by Eric Van Buskirk

Screenshot by Eric Van Buskirk. Taken July 2015