PageRank was once at the very core of search – and was what made Google the empire it is today.

Even if you believe that search has moved on from PageRank, there’s no denying that it has long been a pervasive concept in the industry.

Every SEO pro should have a good grasp of what PageRank was – and what it still is today.

In this article, we’ll cover:

- What is PageRank?

- The history of how PageRank evolved.

- How PageRank revolutionized search.

- Toolbar PageRank vs. PageRank.

- How PageRank works.

- How PageRank flows between pages.

- Is PageRank still used?

Let’s dive in.

What Is PageRank?

Created by Google founders Larry Page and Sergey Brin, PageRank is an algorithm based on the combined relative strengths of all the hyperlinks on the Internet.

Most people argue that the name was based on Larry Page’s surname, whilst others suggest “Page” refers to a web page. Both positions are likely true, and the overlap was probably intentional.

When Page and Brin were at Stanford University, they wrote a paper entitled: The PageRank Citation Ranking: Bringing Order to the Web.

Published in January 1999, the paper demonstrates a relatively simple algorithm for evaluating the strength of web pages.

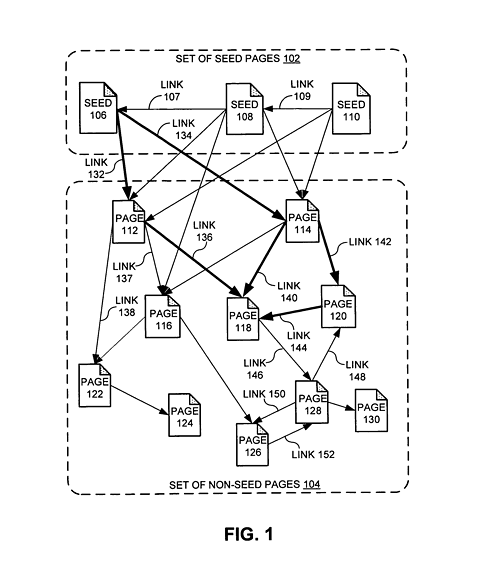

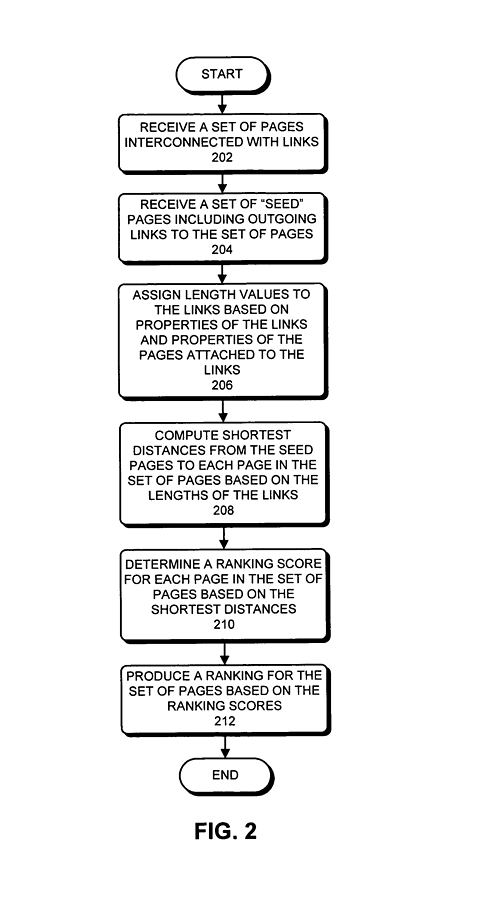

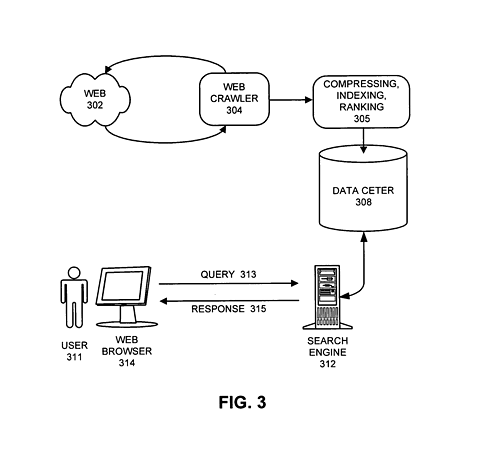

Image from patents.google.com, April 2023

Image from patents.google.com, April 2023The paper went on to become a patent in the U.S. (but not in Europe, where mathematical formulas are not patentable).

Image from patents.google.com, April 2023

Image from patents.google.com, April 2023Stanford University owns the patent and has assigned it to Google. The patent is currently due to expire in 2027.

Image from patents.google.com, April 2023

Image from patents.google.com, April 2023The History Of How PageRank Evolved

During their time at Stanford in the late 1990s, both Brin and Page were looking at information retrieval methods.

At that time, using links to work out how “important” each page was relative to another was a revolutionary way to order pages. It was computationally difficult but by no means impossible.

The idea quickly turned into Google, which at that time was a minnow in the world of search.

There was so much institutional belief in Google’s approach from some parties that the business initially launched its search engine with no ability to earn revenue.

And while Google (known at the time as “BackRub”) was the search engine, PageRank was the algorithm it used to rank pages in the search engine results pages (SERPs).

The Google Dance

One of the challenges of PageRank was that the math, whilst simple, needed to be iteratively processed. The calculation runs multiple times, over every page and every link on the Internet. At the turn of the millennium, this math took several days to process.

The Google SERPs moved up and down during that time. These changes were often erratic, as new PageRanks were being calculated for every page.

This was known as the “Google Dance,” and it notoriously stopped SEO pros of the day in their tracks every time Google started its monthly update.

(The Google Dance later became the name of an annual party that Google ran for SEO experts at its headquarters in Mountain View.)

Trusted Seeds

A later iteration of PageRank introduced the idea of a “trusted seed” set to start the algorithm rather than giving every page on the Internet the same initial value.

Reasonable Surfer

Another iteration of the model introduced the idea of a “reasonable surfer.”

This model suggests that the PageRank of a page might not be shared evenly with the pages it links out to – but could weight the relative value of each link based on how likely a user might be to click on it.

The Retreat Of PageRank

Google’s algorithm was initially believed to be “unspam-able” internally since the importance of a page was dictated not just by its content but also by a sort of “voting system” generated by links to the page.

Google’s confidence did not last, however.

PageRank started to become problematic as the backlink industry grew. So Google withdrew it from public view, but continued to rely on it for its ranking algorithms.

The PageRank Toolbar was withdrawn by 2016, and eventually, all public access to PageRank was curtailed. But by this time, Majestic (an SEO tool), in particular, had been able to correlate its own calculations quite well with PageRank.

Google spent many years encouraging SEO professionals away from manipulating links through its “Google Guidelines” documentation and through advice from its spam team, headed up by Matt Cutts, until January 2017.

Google’s algorithms were also changing during this time.

The company was relying less on PageRank and, following the purchase of MetaWeb and its proprietary Knowledge Graph (called “Freebase” in 2014), Google started to index the world’s information in different ways.

Toolbar PageRank Vs. PageRank

Google was initially so proud of its algorithm that it was happy to publicly share the result of its calculation to anyone who wanted to see it.

The most notable representation was a toolbar extension for browsers like Firefox, which showed a score between 0 and 10 for every page on the Internet.

In truth, PageRank has a much wider range of scores, but 0-10 gave SEO pros and consumers an instant way to assess the importance of any page on the Internet.

The PageRank Toolbar made the algorithm extremely visible, which also came with complications. In particular, it meant that it was clear that links were the easiest way to “game” Google.

The more links (or, more accurately, the better the link), the better a page could rank in Google’s SERPs for any targeted keyword.

This meant that a secondary market was formed, buying and selling links valued on the PageRank of the URL where the link was sold.

This problem was exacerbated when Yahoo launched a free tool called Yahoo Search Explorer, which allowed anyone the ability to start finding links into any given page.

Later, two tools – Moz and Majestic – built on the free option by building their own indexes on the Internet and separately evaluating links.

How PageRank Revolutionized Search

Other search engines relied heavily on analyzing the content on each page individually. These methods had little to identify the difference between an influential page and one simply written with random (or manipulative) text.

This meant that the retrieval methods of other search engines were extremely easy for SEO pros to manipulate.

Google’s PageRank algorithm, then, was revolutionary.

Combined with a relatively simple concept of “nGrams” to help establish relevancy, Google found a winning formula.

It soon overtook the main incumbents of the day, such as AltaVista and Inktomi (which powered MSN, amongst others).

By operating at a page level, Google also found a much more scalable solution than the “directory” based approach adopted by Yahoo and later DMOZ – although DMOZ (also called the Open Directory Project) was able to provide Google initially with an open-source directory of its own.

How PageRank Works

The formula for PageRank comes in a number of forms but can be explained in a few sentences.

Initially, every page on the internet is given an estimated PageRank score. This could be any number. Historically, PageRank was presented to the public as a score between 0 and 10, but in practice, the estimates do not have to start in this range.

The PageRank for that page is then divided by the number of links out of the page, resulting in a smaller fraction.

The PageRank is then distributed out to the linked pages – and the same is done for every other page on the Internet.

Then for the next iteration of the algorithm, the new estimate for PageRank for each page is the sum of all the fractions of pages that link into each given page.

The formula also contains a “damping factor,” which was described as the chance that a person surfing the web might stop surfing altogether.

Before each subsequent iteration of the algorithm starts, the proposed new PageRank is reduced by the damping factor.

This methodology is repeated until the PageRank scores reach a settled equilibrium. The resulting numbers were then generally transposed into a more recognizable range of 0 to 10 for convenience.

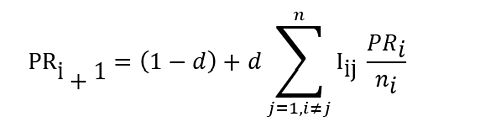

One way to represent this mathematically is:

-

Image from author, April 2023

Image from author, April 2023

Where:

- PR = PageRank in the next iteration of the algorithm.

- d = damping factor.

- j = the page number on the Internet (if every page had a unique number).

- n=total number of pages on the Internet.

- i = the iteration of the algorithm (initially set as 0).

The formula can also be expressed in Matrix form.

Problems And Iterations To The Formula

The formula has some challenges.

If a page does not link out to any other page, then the formula will not reach an equilibrium.

In this event, therefore, the PageRank would be distributed amongst every page on the Internet. In this way, even a page with no incoming links could get some PageRank – but it would not accumulate enough to be significant.

Another less documented challenge is that newer pages, whilst potentially more important than older pages, will have a lower PageRank. This means that over time, old content can have a disproportionately high PageRank.

The time a page has been live is not factored into the algorithm.

How PageRank Flows Between Pages

If a page starts with a value of 5 and has 10 links out, then every page it links to is given 0.5 PageRank (less the damping factor).

In this way, the PageRank flows around the Internet between iterations.

As new pages come onto the Internet, they start with only a tiny amount of PageRank. But as other pages start to link to these pages, their PageRank increases over time.

Is PageRank Still Used?

Although public access to PageRank was removed in 2016, it is believed the score is still available to search engineers within Google.

A leak of the factors used by Yandex showed that PageRank remained as a factor that it could use.

Google engineers have suggested that the original form of PageRank was replaced with a new approximation that requires less processing power to calculate. Whilst the formula is less important in how Google ranks pages, it remains a constant for each web page.

And regardless of what other algorithms Google might choose to call upon, PageRank likely remains embedded in many of the search giant’s systems to this day.

Dixon explains how PageRank works in more detail in this video:

Original Patents And Papers For More In-Depth Reading:

- Method for node ranking in a linked database.

- The PageRank Citation Ranking: Bringing Order to the Web

- The Anatomy of a Large-Scale Hypertextual Web Search Engine

More resources:

- Information Retrieval: An Introduction For SEOs

- Get to Know the Google Knowledge Graph & How it Works

- Advanced Technical SEO: A Complete Guide

Featured Image: VectorMine/Shutterstock

![AI Overviews: We Reverse-Engineered Them So You Don't Have To [+ What You Need To Do Next]](https://www.searchenginejournal.com/wp-content/uploads/2025/04/sidebar1x-455.png)