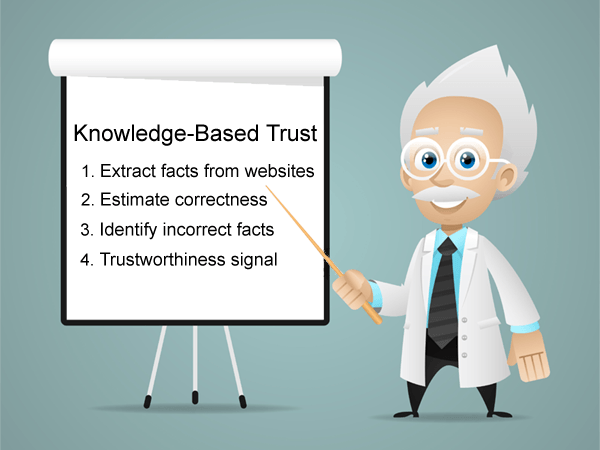

By now you may have read about Knowledge-Based Trust, a Google research paper that describes a method of scoring web documents according to the accuracy of facts. Knowledge-Based Trust has been referred to as the Truth Algorithm, a way to assign a Trust Score to weed out sites that contain wrong information.

According to the title of an article in New Scientist, “Google wants to rank websites based on facts not links.” The idea is to identify key facts in a web page and score them for their accuracy by assigning a trust score.

The algorithm researchers are careful to note in the paper that the algorithm does not penalize sites for lack of facts. The study reveals that it could discover relevant web pages with low PageRank that would otherwise be overlooked by current technology.

In current algorithms, links are a signal of popularity that implies authority in a particular topic. But popularity does not always mean a web page contains accurate information. A good example may be celebrity gossip websites. Getting past simple popularity signals and creating an algorithm that can understand what a website is about is a direction that search technology is moving in today, underpinned by research in artificial intelligence.

Ray Kurzweil, Google’s Director of Engineering, has been tasked with creating an artificial intelligence that can understand content itself without relying on third-party signals like links. Knowledge-Based Trust, a way to determine the accuracy of facts, appears to be a part of this trend of moving away from link signals and towards understanding the content itself.

There’s only one problem: The research paper itself states that there are at least five issues to overcome before Knowledge Based Trust is ready to be applied to billions of web pages.

Is Knowledge-Based Trust coming soon? Or will we see it integrated into current algorithms?

I asked Dr. Pete Meyers of Moz.com, and his opinion was:

“We tend to see each new ranking factor as replacing the old ones. We jump at everything as if it’s going to uproot links. I think the reality is that more and more factors are corroborating, and the system is becoming more complex.”

I agree with Dr. Meyers. Rather than seeing KBT as a replacement for current algorithms, it may be useful to view it as something that might be implemented as a corroborating factor. An important consideration about KBT is that it demonstrates Google is researching technologies that focus on understanding content, rather than relying on second-hand signals like links. Links measure popularity, but links only indirectly reflect relevance and accuracy, sometimes erroneously.

This research demonstrates that an accuracy score is possible and it proves that this approach can discover useful web pages with low PageRank scores. But the question remains, is Knowledge-Based Trust coming soon? The self-assessment written in the conclusion of the paper notes several achievements, but it also states five issues that need to be overcome. Let’s review these issues and you can make up your own mind.

Issue #1: Irrelevant Noise

The algorithm uses a method of identifying facts that examines three factors in order to determine it. It refers to them as “Knowledge Triples,” consisting of a subject, a predicate, and an object. A subject is a “real-world entity” such as people, places or things. A predicate describes an attribute of that entity. According to the research paper, an object is “an entity, a string, a numerical value, or a date.”

Those three attributes together form a fact, known in the research paper as Knowledge Triples and often referred to simply as Triples. An example of a triple is: Barack Obama was born in Honolulu. The problem with this method is that extracting triples from websites results in irrelevant triples, triples that diverge from the topic of the web page. The research study concludes:

“To avoid evaluating KBT on topic irrelevant triples, we need to identify the main topics of a website, and filter triples whose entity or predicate is not relevant to these topics.”

The paper does not describe how difficult it would be to weed out irrelevant triples. So, the difficulty and time frame for addressing this issue remains open to speculation.

Issue #2: Trivial Facts

KBT does not adequately filter trivial facts to set them aside and not use them as a scoring signal. The research paper uses the example of a Bollywood site that on nearly every page states that a movie is filmed in the Hindi language. That’s identified as a trivial fact that should not be used for scoring trustworthiness. This lowers the accuracy of the KBT score because a web page can earn an unnaturally high trust score based on trivial facts.

As in the first issue of noise, the researchers describe possible solutions to the problems but are silent as to how difficult those solutions may be to create. The important fact is that this second issue must be solved before KBT can be applied to the Internet, pushing back the date of implementation even further.

Issue #3: Extraction Technology Needs Improvement

KBT is unable to extract data in a meaningful way from websites outside of a controlled environment without being inundated with noise. The technology referred to here is called an Extractor. An extractor is a system that identifies triples within a web page and assigns confidence scores to those triples. This section of the document does not explicitly state what the problem with the extractors is, it only cites “limited extraction capabilities”. In order to apply KBT to the web, extractors need to be able to identify triples with a high certainty of accuracy. This is an important part of the algorithm that will need to be improved if it’s ever going to see the light of day. Here is what the research document says:

“Our extractors (and most state-of-the-art extractors) still have limited extraction capabilities and this limits our ability to estimate KBT for all websites.”

This is a significant hurdle. This is important information. The limitations of current extractor technology adds a third issue that must be solved before Knowledge-Based Trust can be applied to the World Wide Web.

Issue #4: Duplicate Content

The KBT algorithm cannot sort out sites containing facts copied from other sites. If KBT cannot sort out duplicate content then it may be possible that KBT can be spammed by copying facts from “trusted” sources such as Wikipedia, Freebase, and other knowledge sources. Here is what the researchers state:

“Scaling up copy detection techniques… has been attempted…, but more work is required before these methods can be applied to analyzing extracted data from billions of web sources….”

The researchers tried to apply scaled copy detection as part of Knowledge-Based Trust algorithm but it’s simply not ready. This is a fourth issue that will delay the deployment of KBT to Google’s search results pages.

Issue #5: Accuracy

In section 5.4.1 of the document, researchers examined one hundred random sites with low PageRank but high Knowledge-Based Trust scores. The purpose of this examination was to determine how well Knowledge-Based Trust performed in identifying high quality sites over PageRank, particularly low PageRank sites that would have otherwise been overlooked.

Among the one hundred random high trust sites picked for review, 15 of the sites (15%) are errors. Two sites are topically irrelevant, twelve scored high because of trivial triples, and one website had both kinds of errors (topically irrelevant and a high number of trivial triples). This means in a random sample of high trust sites with low PageRank, KBT’s false positive percentage is revealed to be on the order of 15%.

Many research papers whose algorithms eventually make it into an algorithm usually demonstrate a vast improvement over previous efforts. That is not the case with Knowledge-Based Trust. While a Truth Algorithm makes an alarming headline, the truth is there are five important issues that need to be solved before it makes it to an algorithm near you.

What Experts Think About Knowledge-Based Trust

I asked Bill Slawski, of GoFishDigital.com about Knowledge-Based Trust and he said:

“The Knowledge-Based Trust approach is one that seems to focus upon attempting to verify the correctness of content that might be used for direct answers, knowledge panel results and other ‘answers’ to questions by using approaches uncovered during the author’s research while working upon Google’s Knowledge Vault. It doesn’t appear to be attempting to replace either link-based analysis such as PageRank or Information Retrieval scores for pages returned at Google in response to a query.”

Dr. Pete Meyers shares a similar outlook on the future of Knowledge-Based Trust:

“This will be very important for 2nd-generation answer boxes (scraped from the index), because Google has to have some way to grow the Knowledge Graph organically and still keep the data as reliable as possible. I think KBT will be critical to the growth of the Knowledge Graph, and that may start to cross over into organic rankings to some degree. This is going to be a fairly long process, though.”

Is a Truth Algorithm Coming Soon?

Knowledge-Based Trust is an exciting new approach. There are several opinions on where it will be applied, with Dr. Pete observing that it may play a role in growing the Knowledge Graph organically. But on the question of whether Knowledge-Based Trust is coming to a search results page soon, we know there are issues that need resolving. Less clear is how long it will take to resolve those issues.

Now that you have more facts, what is your opinion, is a truth algorithm coming soon?

Image credit: Shutterstock.com. Used under license.

![[SEO, PPC & Attribution] Unlocking The Power Of Offline Marketing In A Digital World](https://www.searchenginejournal.com/wp-content/uploads/2025/03/sidebar1x-534.png)