One of the most thought-provoking presentations at TechSEO Boost was the keynote by Dr. Ricardo Baeza-Yates, the CTO of NTENT. It was entitled, “Biases in Search and Recommender Systems.”

Spoiler alert: Bias is bad; delivering and monetizing the most relevant content based on user intent is good.

Now, prior to joining NTENT in June 2016, Dr. Baeza-Yates spent 10 years at Yahoo Labs as Vice President of Research, ultimately rising to Chief Research Scientist.

He is an ACM and IEEE Fellow with over 500 publications, tens of thousands of citations, multiple awards and several patents.

He has also co-authored several books including “Modern Information Retrieval”, the most widely used textbook on search.

So, his presentation wasn’t insubstantial, unsupported, or sensational arm-waving. It was a careful examination by an expert in the field of most of the biases that affect search and recommender systems.

This includes biases in the data, the algorithms, and user interaction – with a focus on the ones related to relevance feedback loops (e.g., ranking).

And instead of accusing Google, YouTube, and Amazon of being biased and urging fair, impartial, and unbiased politicians to take drastic action, Dr. Baeza-Yates methodically covered the known techniques to ameliorate most biases – including ones in site search and recommender systems that can cost ecommerce businesses some serious money.

What Is Bias?

Dr. Baeza-Yates started by defining three different types of bias:

- Statistical: Significant systematic deviation from a prior (possibly unknown) distribution.

- Cultural: Interpretations and judgments phenomena acquired through our life.

- Cognitive: Systematic pattern of deviation from norm or rationality in judgment.

Now, most critics of search and recommender systems focus on cultural biases, including: gender, racial, sexual, age, religious, social, linguistic, geographic, political, educational, economic, and technological.

But, many people extrapolate results of a sample to the whole population – without considering statistical biases, including the gathering process, sampling process, validity, completeness, noise, or spam.

In addition, there is cognitive bias when measuring bias.

For example, one type of cognitive bias is confirmation bias, which is the tendency to search for, interpret, favor, and recall information in a way that affirms one’s prior beliefs or hypotheses.

So, how does this impact search and recommender systems?

Well, most web systems are optimized by using implicit user feedback. However, user data is partly biased by the choices that these systems make.

For example, we can only click on things that are shown to us.

Because these systems are usually based on Machine Learning, they learn to reinforce their own biases, yielding self-fulfilled prophecies and/or sub-optimal solutions.

For example, personalization and filter bubbles for users can create echo chambers for recommender systems.

In addition, these systems sometimes compete among themselves. So, an improvement in one system (e.g., user experience) might be just a degradation in another system (e.g., monetization) that uses a different (even inversely correlated) optimization function.

What Is Being Fair?

Dr. Baeza-Yates also tackled the question, “What is being fair?”

This is a non-technical question.

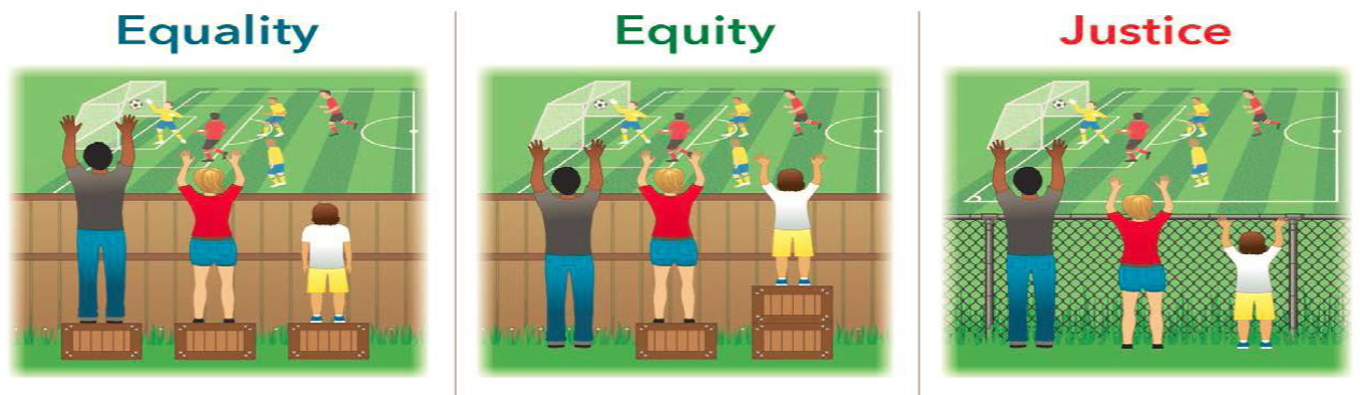

He used images of three kids watching a soccer match to illustrate the difference between:

- Equality, which assumes that everyone benefits from standing on boxes of the same height. This is the concept of equal treatment.

- Equity, which argues that each kid should get the box that they need to see over the fence. This is the concept of “affirmative action.”

- Justice, which enables all three kids to see the game without boxes because the cause of the inequity (the wooden fence) was addressed. This is the concept of removing the systemic barrier(s).

So, the users of search and recommender systems need to realize that removing bias involves more than just making engineers tune their algorithms. It also requires users to be aware of their own cultural and cognitive biases.

And this also means that search and recommender systems don’t need to be perfect, they just need to be better than humans who aren’t aware of their biases.

Biases Are Everywhere!

Then, Dr. Baeza-Yates shared some research that found bias in places that most of us wouldn’t expect. These findings would have made great headlines, if he’d been interested in generating clickbait.

But, a large part of the content of his presentation is available on his article, “Bias on the Web,” which was published in Communications of ACM in June 2018.

And, in the context of his keynote presentation, they served as additional case studies that supported his analysis.

For example, one study by Baeza-Yates, Castillo & López, which was published in Cybermetrics in 2005, found economic bias in links. (Specifically, it found that countries with more economic ties to Spain had more links to websites in Spain.)

Another study, which was published on the Language Connect blog in 2012, found a language bias in web content. (Although about 27% of internet users speak English, 55.4% of the web content on the top 1 million websites is in English.)

And a third study by Baeza-Yates & Saez Trumper, which was published in ACM Hypertext in 2015, found activity bias in user-generated content. (Forget the “wisdom of crowds.” Just 7% of Facebook users generated 50% of the posts in a small sample taken in 2008, 4% of Amazon users generated 50% of the movie reviews up to 2013, 2% of Twitter users generated 50% of the tweets in 2009, and only 0.04% of Wikipedia editors generated 50% of the English entries).

How Does Bias in Search & Recommender Systems Impact You?

Now, some of this research is older than dirt. So, if you use site search and recommender systems, then how does bias impact you today?

Well, Dr. Baeza-Yates provided a couple of real-world examples.

First, he looked at the “popularity bias” in many site search and recommender systems.

What’s that?

Well, if you only recommend a few of your most popular items on your website, then you are likely to undercut the sales of new items that haven’t had time to become popular yet – which is the ecommerce equivalent of eating your seed corn.

Or, if you have a long-tail of other items for sale that are individually less popular, but collectively generate the majority of your revenue, then the self-fulfilling prophecy of “popularity bias” in your site search and recommender system will make you a much smaller company with far fewer items for sale.

Dr. Baeza-Yates said there were several partial solutions for “popularity bias” – particularly in systems that use personalization. This includes replacing one or more of the popular items that you’re presenting today with other items that improve the diversity, novelty, and serendipity of what gets presented.

But, whatever you do, you want to avoid the echo chamber by empowering the long tail. And you want to avoid the “rich get richer and poor get poorer” syndrome.

Next, he looked at the cascade of biases on web interaction, particularly ecommerce. Among the data and algorithmic biases were:

- Presentation bias (i.e., which items get exposure).

- Position bias (which items are presented in the upper right corner of the page).

- Social bias (which items include four- or five-star reviews).

- And other interaction biases (i.e., which items are only seen by scrolling).

But wait, there’s more! There are also self-selection biases, including:

- Ranking bias (users assume that higher-ranked items are better choices).

- Click bias (clicks on an item are considered positive user feedback).

- Mouse movement bias (hovering over an item is considered positive user feedback).

Now, Dr. Baeza-Yates used Amazon as an example in his keynote presentation, but this cascade of biases occurs on other sites.

Nevertheless, Amazon now offers a number of self-service advertising solutions, including Sponsored Products, Sponsored Brands, Sponsored Display (which is in beta), Stores, Display Ads, Video Ads, Custom Ads, or Amazon DSP solutions.

So, considering all of the biases in their search and recommender system, should you advertise on Amazon?

Well, even though Dr. Baeza-Yates didn’t tackle this topic, you should probably test and measure a variety of keywords and targeting options if you do start advertising on Amazon to ensure that you are reaching the right person with the right message at the right time as he or she navigates a veritable corn maze of biases.

Key Takeaways

Dr. Baeza-Yates ended his keynote presentation with two key takeaways.

The first was for the designers of search and recommender systems. They covered:

- Data

- Analyze for known and unknown biases, debias, or mitigate when possible/needed.

- Recollect more data for difficult/sparse regions of the problem.

- Delete attributes associated directly/indirectly with harmful bias.

- Interaction

- Make sure that the user is aware of the biases all the time.

- Give more control to the user.

- Design and Implementation

- Let experts/colleagues/users contest every step of the process.

- Evaluation

- Do not fool yourself!

And for everyone in the audience at TechSEO Boost, he shared these messages:

- Systems are a mirror of us – the good, the bad, and the ugly.

- The Web amplifies everything, but always leaves traces.

- We need to be aware of our own biases.

- We must be aware of the biases and counteract them to stop the vicious bias cycle.

- There are plenty of open (research) problems!

Dr. Baeza-Yates then quipped, “Any biased questions?”

More Resources:

- What Is Ethical SEO?

- Harmonic Centrality vs. PageRank: Taking a Deeper Dive

- TF-IDF: Can It Really Help Your SEO?

Image Credits

In-Post Image #1: Taken by author, December 2019

In-Post Images #2-3: Dr. Ricardo Baeza-Yates