A year ago, I didn’t know there was a Whole Page Algorithm.

The concept had never occurred to me.

Turns out that search engines like Bing have whole page algorithms that filter the candidate sets and organize the content and organization of the SERP before displaying it to the user.

For me, that is the one single most stunning insight since Gary Illyes told me about how ranking rich elements (a.k.a., SERP features) works.

If you don’t know what I mean by “candidate set”, that article is a must-read since that concept is a must-know for appreciating the rest of this article. 🙂

And, as with a lot of other insights I heard in this series, once I had heard it, it is logical and now seems obvious.

Darwinism in Search Is a Good Way to Look at How a Modern, Rich SERP Is Built

Nathan Chalmers is the Program Manager for Bing’s Search Relevance Team. (And he thinks my theory about Darwinism in search is “really cool.”)

Chalmers confirmed I had got the overall ideas and concepts right, but that the process is less mechanical than I was suggesting and that I was missing some important details.

Details he proceeded to explain to me.

Candidate Sets Are Bidding for a Place on the SERP

There are multiple teams who manage the candidate sets that bid for a place on the Bing SERP.

Candidate sets simply mean SERP features (or rich elements as I will call them) that are bidding for a place on the final SERP.

Those candidate sets / rich elements (video boxes, image boxes, recipes, knowledge cards, people also ask, etc.) get a place on the SERP if they can bring more value to the user than the incumbent blue links.

Up to there, all very Darwinian.

But then the Whole Page Algorithm sits on top, acting as a kind of “last-step referee” to filter out, define SERP layout, and maintain balance.

In short, it molds the SERP to best serve the user on a query-by-query basis.

It is building the product.

What do I mean?

If you bear in mind that each and every search engine results page (SERP) is a product that Bing and Google are selling to their users (albeit free to the user, paid for directly or indirectly by advertising), then the question the Whole Page Algorithm answer can be summarized as:

“What product does Bing want to provide to its customers?”

The Whole Page algorithm’s role is to produce the best product possible from the available parts proposed by the candidate sets.

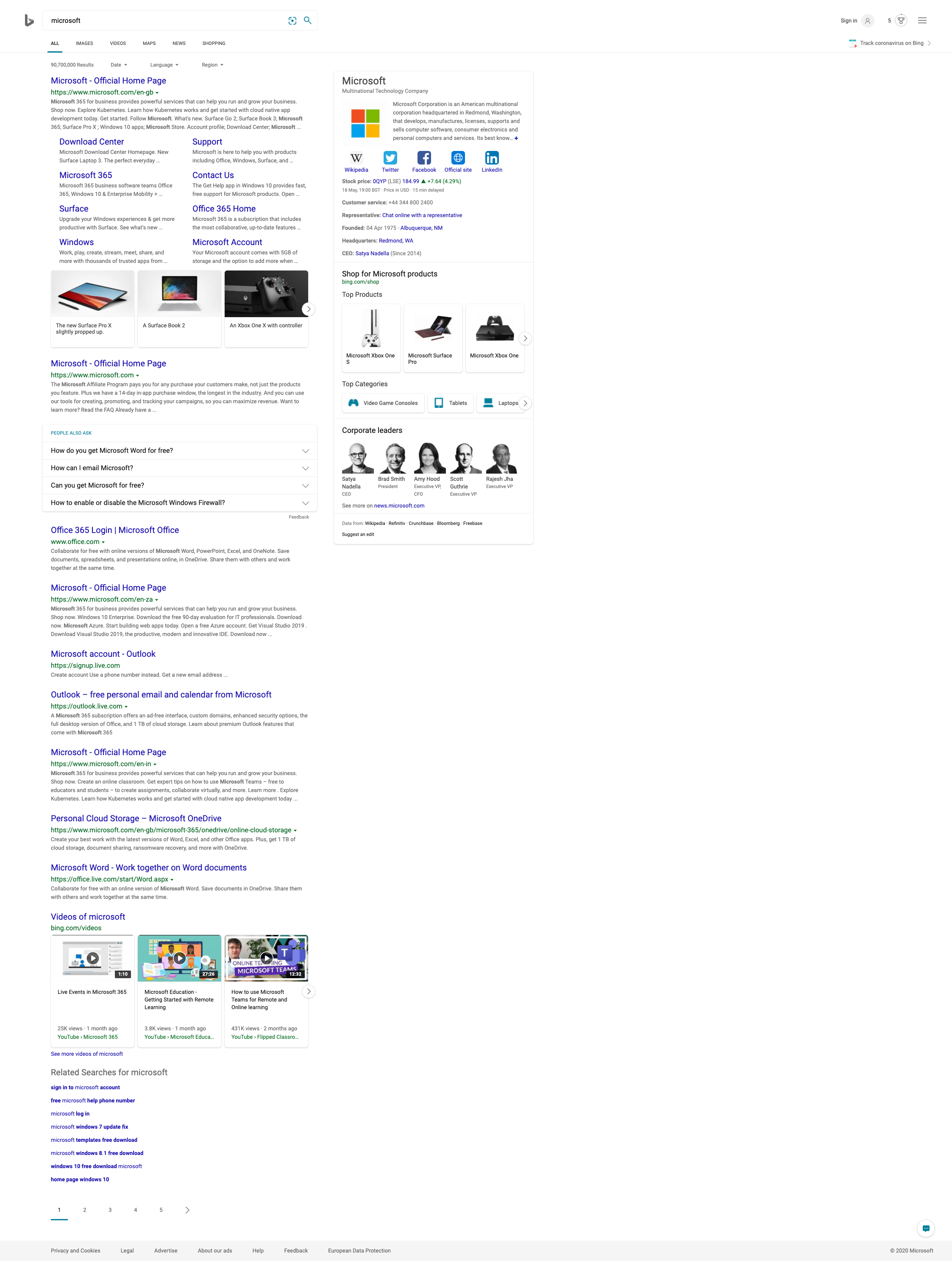

This is a nice, rich, and well-balanced SERP that serves the user very well. As luck would have it, it is a Brand SERP – my pet topic at Kalicube.pro

This is a nice, rich, and well-balanced SERP that serves the user very well. As luck would have it, it is a Brand SERP – my pet topic at Kalicube.proLet’s Break It Down

Blue Links Always Have a Place

The blue links are the foundation upon which everything else is built and the standard to which every candidate / rich element is compared.

For any other rich element to take the place of a blue link, it has to provide the user with a “better” experience than the blue link.

Chalmers phrases it this way: “get the user to satisfaction more efficiently.”

Importantly, blue links always have a place.

Perhaps as few as 3, perhaps as many as 10 or 12, but they are always there, and will be for the foreseeable future since they are the foundation.

A Winning Bid From a Candidate Set Can Be Ignored

Once all the candidate sets have submitted their bid, I had assumed that any candidate / rich element that put in a winning bid (i.e., prove that it provides a better experience for the searcher than a blue link), then it got its place. But not necessarily.

Chalmers reveals that the whole page algo makes the final decision on whether a rich element gets a place on the SERP.

Its algorithms (there are multiple Whole Page teams and algorithms) aim to sculpt the ideal user experience from these bids.

When it receives winning bids from rich elements, the algorithm can choose to cull them. So even a winning bid can be rejected.

Several reasons for culling a rich element that has darwinistically “won” a place on the SERP that spring to mind are:

- They would not truly satisfy the user’s intent.

- Another rich element does the job better (perhaps a Q&A / featured snippet would be culled in favor of a knowledge panel).

- The SERP is getting too crowded.

- The SERP is getting too long.

- It would damage the profitability of the SERP.

My idea that pure Darwinism would work its magic and create the perfect page turns out to be naive.

“Darwinian culling” is part of the deal.

Positions for Rich Elements on the SERP

One question Illyes didn’t address was “how do you decide where each rich element ranks / is positioned on the SERP at Google?” (I didn’t ask him, so that is not a criticism. :))

Chalmers provides the answer (for Bing, at least). They have an algorithm named “Darwin” that decides on the placement of elements on that SERP.

Once a rich element (i.e., video boxes, image boxes) gets its place on the SERP, the position it is shown is dependent on the probable usefulness to the user.

That is based on metrics for user satisfaction given the understood intent.

Something like “Weather in Seattle” is unambiguous and the weather box goes right at the top. That’s a no-brainer.

But “Seattle” is ambiguous and could bring up weather, images, videos… and that, according to Chalmers, is where the whole page team proves its worth.

By segmenting historical data it benchmarks each candidate against the blue links and positions the rich element according to the probability it will outperform the blue link in a head-to-head.

If it wins in this predictive head-to-head battle, it takes that place, pushing the blue link down.

And the fundamental question the algo is trying to answer?

According to Chalmers, “is a user going to be successful when they engage with this result… If the answer’s no, then we don’t show it on the page or we rank it lower.”

Ads Are a Special Case

In an ideal (Peter-Pan-esque) world, ads would be “just another candidate set bidding for a place.”

They would submit a bid, just like the others. And would-be victims of Darwinian culling based on merit, just like the others.

Chalmers says this is true up to a point.

They are present only when they demonstrate to the Whole Page Algorithm that they help get that user to satisfaction more efficiently than the organic blue links.

But he also suggests that they get special treatment.

My guess is that ads are given a lower bar to hit in the bidding than the other candidate sets and that they have a lower likely-hood of falling victim of the culling.

That said, it’s vital that the Whole Page Algorithm maintains the right balance. If Bing allows ads to dominate and damage the user experience, that ruins the product and puts the survival of the search engine in danger.

Page Length – Ambiguity & Ads

Some SERPs are very long and some are very short.

That is mostly down to ambiguity: more ambiguous queries make for longer page and vice versa.

As Frédéric Dubut explains in the first part of the series:

“For unambiguous intent, SERPs have tended to become richer and shorter. The total number of results tends to drop as the number of rich elements rises. Since the intent is clear – shorter, richer, more focused results do the job of satisfying their user best.

For ambiguous intent, SERPs have tended to become richer and longer – rich elements tend to add to the page of results and remove little. Since the intent is unclear – more results with a range of intents will do the job of satisfying their user best.”

But Chalmers indicates that the presence of ads can play a role as well.

A SERP may be artificially shortened by the Whole Page algo in order to increase clicks on the ads and drive revenue.

What Are the Metrics for the Whole Page Algorithms?

Something that comes back in every conversation in this series is that the entire process – from crawling to indexing to ranking to building the whole page is (almost) end-to-end machine learning.

Frédéric Dubut suggested right at the start that the key to all these algorithms is metrics, and not ranking factors.

And here we have an algorithm that is very clearly driven by metrics.

Online

On SERP user behavior is a very, very important metric for the Whole Page Algorithm.

The success of failure of any combination of blue links and rich elements is measured by how the user interacts with it.

So, click-through rate doesn’t affect rankings in the ‘traditional’ sense that we have tended to understand it.

On-SERP behavior doesn’t affect the blue link rankings, nor the bids submitted by the candidate sets aiming to replace them.

But they are a very big part of how that whole page is organized.

User behavior on the SERP is fed back into the algorithm (Darwin), which gives the machine the corrective or reinforcement signals it needs to improve its performance.

Its goal is, as Chalmers says, getting the user to satisfaction as efficiently as possible.

Offline

As with all the other algorithms, the Whole Page team has human judges (the equivalent of Google quality raters) who give feedback about the quality and relevancy of a SERP – in terms of the relevancy of the content, its quality and its position on the page.

That is fed back into the algorithm, along with the on-SERP signals.

The Whole Page quality guidelines seek to answer this question: “How good does this page look as a solution to the problem the user expressed?”

The fact that each team at Bing has its own team of human judges, each with a different set of guidelines that are specific to the needs of the algorithm, strongly suggests that there are other quality raters guidelines at Google that we have not seen.

Bing certainly has them. Google probably has them, too – specific quality guidelines for different parts of the overall search product, including blue links, rich elements, and (this is the one I love) the Whole Page.

The Role of the Whole Page Algorithm in a Nutshell

My summary of this conversation was:

The aim is to take in the bids from the different candidate sets, filter according to whether that result type is appropriate to the intent of the user, then evaluate the probable performance against your benchmark (the blue link that currently occupies each position).

Chalmers confirms with “In a nutshell. Exactly.”

Watch the video this article is based on: How the Whole Page Algorithm Works at Bing.

Conclusion: Darwinism in Search Works in Three Simple Steps

- Darwinian survival of the fittest based on value the content brings to the user for their query.

- Darwinian culling based on best-fit-for-intent in the context of the SERP.

- Darwinian shaping of the anatomy of the SERP based on user satisfaction.

It seems to me that only the first of those is how we have traditionally approached SEO – blue links competing for a place at the top by proving to the algorithm that they are better than their peers.

This series, from Illyes in May 2019 to Chalmers in May 2020 has expanded my view to not only understand how rich elements fit into that initial survival of the fittest step, but just as importantly, to understand how the anatomy of the final SERP is defined.

Darwinism turns out to be a very, very good analogy.

Everything on the SERP will live, fade, and die by their performance.

Ultimately, for our content (and the rich elements themselves), there is no hiding from a bad performance.

Failure to get the user to satisfaction efficiently for a given query intent will surface sooner or later, and extinction “naturally” follows.

Darwinism in search

Darwinism in searchThank You for Reading This Series

I am absolutely stunned and happy to have been able to produce this series.

I learned more than I could possibly have hoped about how search works, and how the different elements all fit together.

Everything I learned has given me a better understanding as to what I am trying to achieve when optimizing for Bing and Google, and in turn that has made my approach to my job as a consultant more intelligent.

I hope it has done the same for you.

Read the Previous Articles in the Bing Series

- How Ranking Works at Bing – Frédéric Dubut, Senior Program Manager Lead, Bing

- Discovering, Crawling, Extracting and Indexing at Bing – Fabrice Canel, Principal Program Manager, Bing

- How the Q&A / Featured Snippet Algorithm Works – Ali Alvi, Principal Lead Program Manager AI Products, Bing

- How the Image and Video Algorithm Works – Meenaz Merchant, Principal Program Manager Lead, AI and Research, Bing

Image Credits

Featured & In-Post Images: Véronique Barnard, Kalicube.pro

![AI Overviews: We Reverse-Engineered Them So You Don't Have To [+ What You Need To Do Next]](https://www.searchenginejournal.com/wp-content/uploads/2025/04/sidebar1x-455.png)