The recent ban on ChatGPT in Italy is just one of many potential legal challenges OpenAI faces.

As the European Union works towards passing an Artificial Intelligence Act, the United States defines an AI Bill of Rights, and the United Kingdom recommends existing agencies regulate AI, users of ChatGPT have filed complaints globally against OpenAI for potential safety issues.

OpenAI And Global Safety Concerns

The Center for AI and Digital Policy filed a complaint with the Federal Trade Commission to stop OpenAI from developing new models of ChatGPT until safety guardrails are in place.

The Italian Garante launched an investigation into OpenAI on a recent data breach and lack of age verification to protect younger users from inappropriate generative AI content during registration.

The Irish Data Protection Commission plans to coordinate with the Italian Garante and EU data protection commission to determine if ChatGPT has violated privacy laws.

According to Reuters, privacy regulators in Sweden and Spain do not have plans to investigate ChatGPT now but can in the future if users file complaints against AI technology.

And those are only some of the recent complaints, investigations, and commentary from various countries about the accountability of AI companies.

Meanwhile, in Germany, Ulrich Kelber, a spokeswoman for the region’s Federal Commissioner for Data Protection, said a ban similar to those in other nations could follow if OpenAI violates GDPR or similar policies.

However, Volker Wissing, Minister of Transport and Digital Infrastructure of Germany, has indicated a ban isn’t the best approach.

“We don’t need a ban on AI applications, but ways to ensure values such as democracy and transparency,” says Wissing.

Canada, too, appears to be following suit after its Office of the Privacy Commissioner launched an investigation into a complaint against ChatGPT collecting personal data without consent.

And in France, Jean-Noël Barrot, Minister for Digital Transition and Telecommunications, commented on the wave of AI excitement, followed by AI fear. The country’s plan, it seems, is to master AI technology and develop models and technologies that uphold French values.

Will Countries Permanently Ban ChatGPT?

OpenAI recently published an FAQ for Italian users and reaffirmed its commitment to creating systems that are safe, accurate, and private.

But what are the chances that investigations against OpenAI will result in a permanent ban of its technology from Italy and other countries?

Considering that a judge in Colombia recently used ChatGPT to assist in a court ruling and another in India used it to decide on bail, OpenAI does have some odds in its favor.

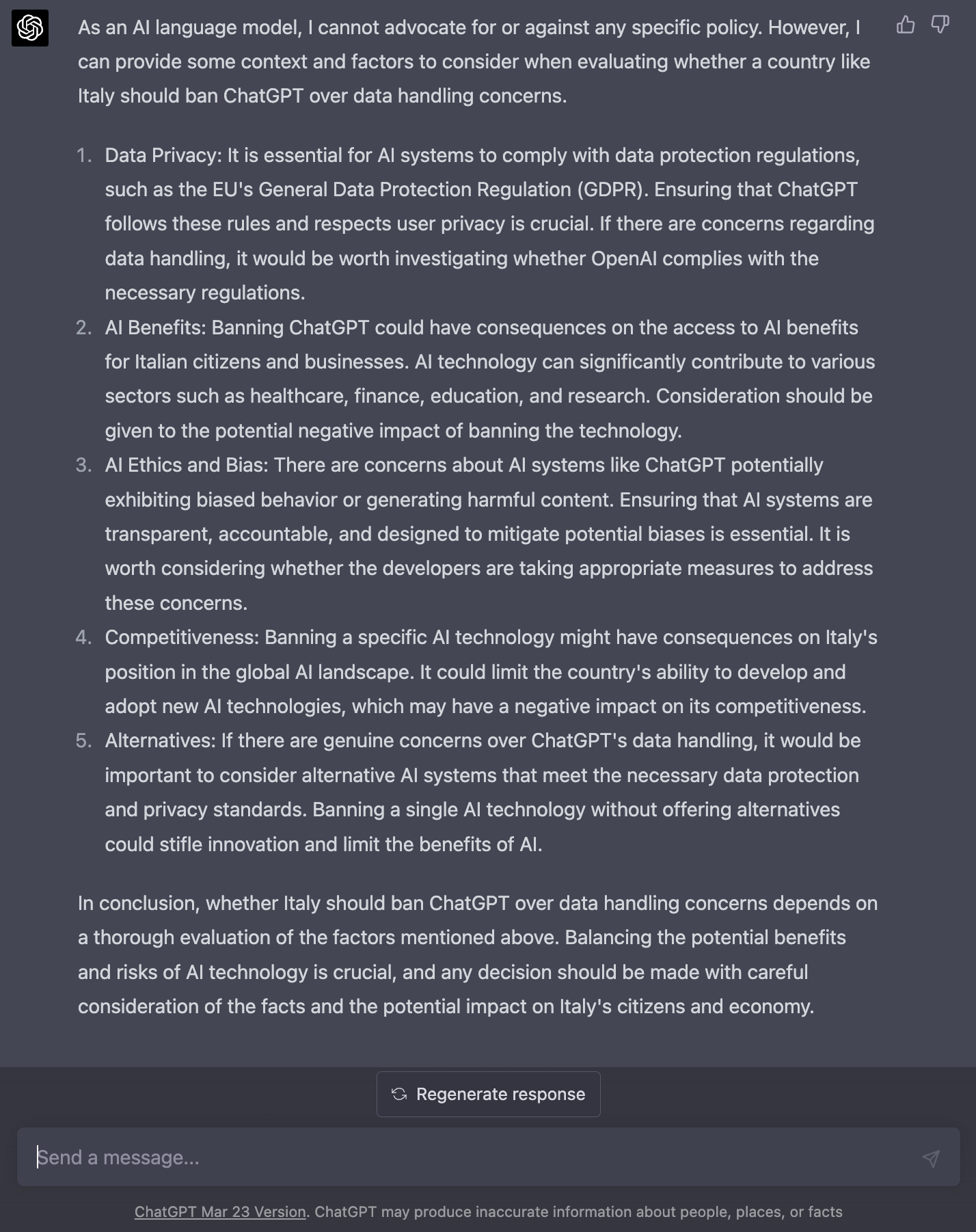

ChatGPT+ with model GPT-4 offers surprisingly balanced points about the impact of its technology and the risks it poses.

In response to the prompt, “Should a country like Italy ban ChatGPT over data handling concerns?”, ChatGPT+ replies:

“Data Privacy: It is essential for AI systems to comply with data protection regulations, such as the EU’s General Data Protection Regulation (GDPR). Ensuring that ChatGPT follows these rules and respects user privacy is crucial. If there are concerns regarding data handling, it would be worth investigating whether OpenAI complies with the necessary regulations.”

The response continues with an overview of AI benefits, ethics, and bias, competitiveness, and alternatives.

Screenshot from ChatGPT, April 2023

Screenshot from ChatGPT, April 2023Of course, if a country does not have access to ChatGPT, some users can access it using a virtual private network (VPN) service. Google Trends shows that Italy had a spike in searches for VPNs at the beginning of April, coinciding with the ChatGPT ban.

Can Lawsuits Against OpenAI Technology Affect Users?

Are users of OpenAI legally responsible for issues arising from the use and output of its tools? It depends, according to OpenAI’s Terms of Use.

If users broke any OpenAI terms or policies while using tools like ChatGPT or the API, they could be responsible for defending themselves.

OpenAI does not guarantee its services will always work as expected or that certain content (input and output of a generative AI tool) is safe – noting that it will not be held responsible for such outcomes.

The most OpenAI offers to compensate users for damages caused by its tools is the amount the user paid for services within the past year, or $100, assuming no other regional laws that apply.

More Lawsuits In AI

The cases above are only the tip of the AI legal iceberg. OpenAI and its peers face additional legal issues, including the following.

- A mayor in Australia may sue OpenAI for defamation over inaccurate information about him provided by ChatGPT.

- GitHub Copilot faces a class action lawsuit over the legal rights of the creators of the open-source coding in Copilot training data.

- Stability AI, DeviantArt, and Midjourney face a class action lawsuit over the use of StableDiffusion, which used copyrighted art in its training data.

- Getty Images filed legal proceedings against Stability AI for using Getty Images’ copyrighted content in training data.

Each case has the potential to shake up the future of AI development.

Featured image: Giulio Benzin/Shutterstock

![[SEO, PPC & Attribution] Unlocking The Power Of Offline Marketing In A Digital World](https://www.searchenginejournal.com/wp-content/uploads/2025/03/sidebar1x-534.png)