How does a typical ecommerce business measure online campaign performance?

It’s simple.

They track users who click their ads and measure how many of them made a purchase within a certain period of time, called conversion window.

It works particularly good for SEM campaigns.

We believe that the correlation between click and conversion is hard proof of effectiveness and justifies virtually any budget spent on search engine ads.

Post-Click & Post-View

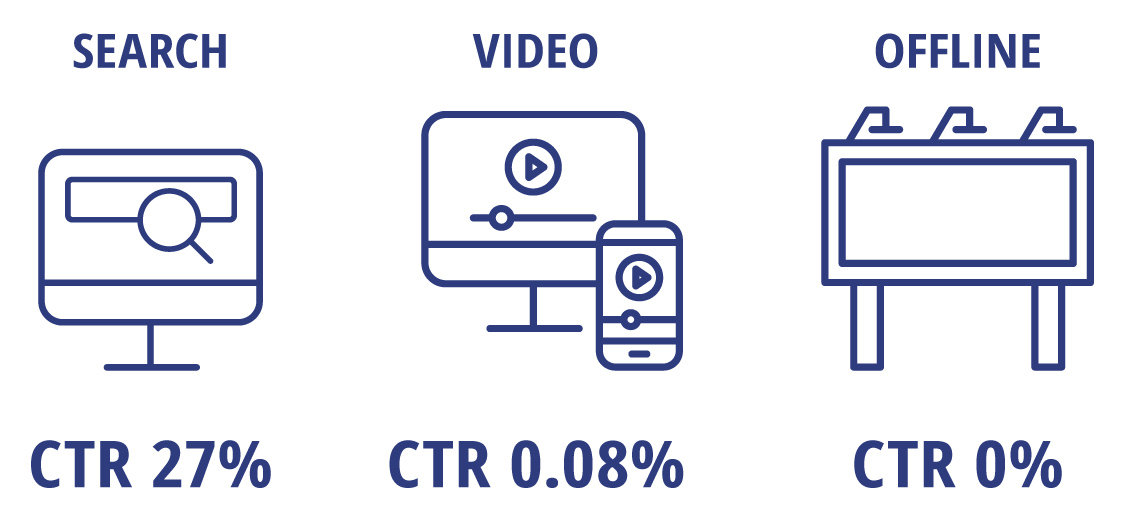

The post-click performance of YouTube ads is usually low. Users simply don’t click this type of ad.

On the other hand, nobody clicks radio ads, TV ads, billboards on the roads, or press advertisements – but we know they work.

Indeed, in case of the display and video ads, we observe a high number of post-view conversions – but somehow we don’t believe in it as much as we trust the post-click data.

Yes, we know there was an impression of a display ad. We even know it was in the viewable area.

But how do we know that the ad was actually seen? How do we know that it attracted interest?

If there is any other interaction on the path, we attribute the conversion to clicks rather than to the fact that the user potentially saw our ad.

Attribution Modeling

We already know about attribution modeling.

We are aware that there may be many interactions on the conversion path.

There are also a number of tools on the market to track impressions and viewability.

We can create custom attribution models where we can treat clicks, views, and impressions differently.

The problem with these models is that they require arbitrary decisions regarding the importance of traffic sources on the conversion path and weights assigned to particular interactions (clicks, impressions, or video views).

There was a big hope in algorithmic models such as data-driven model or Markov chain analysis.

The biggest problem of these algorithms is that they interpret the correlation of interactions as causation. It can sometimes lead to wrong conclusions.

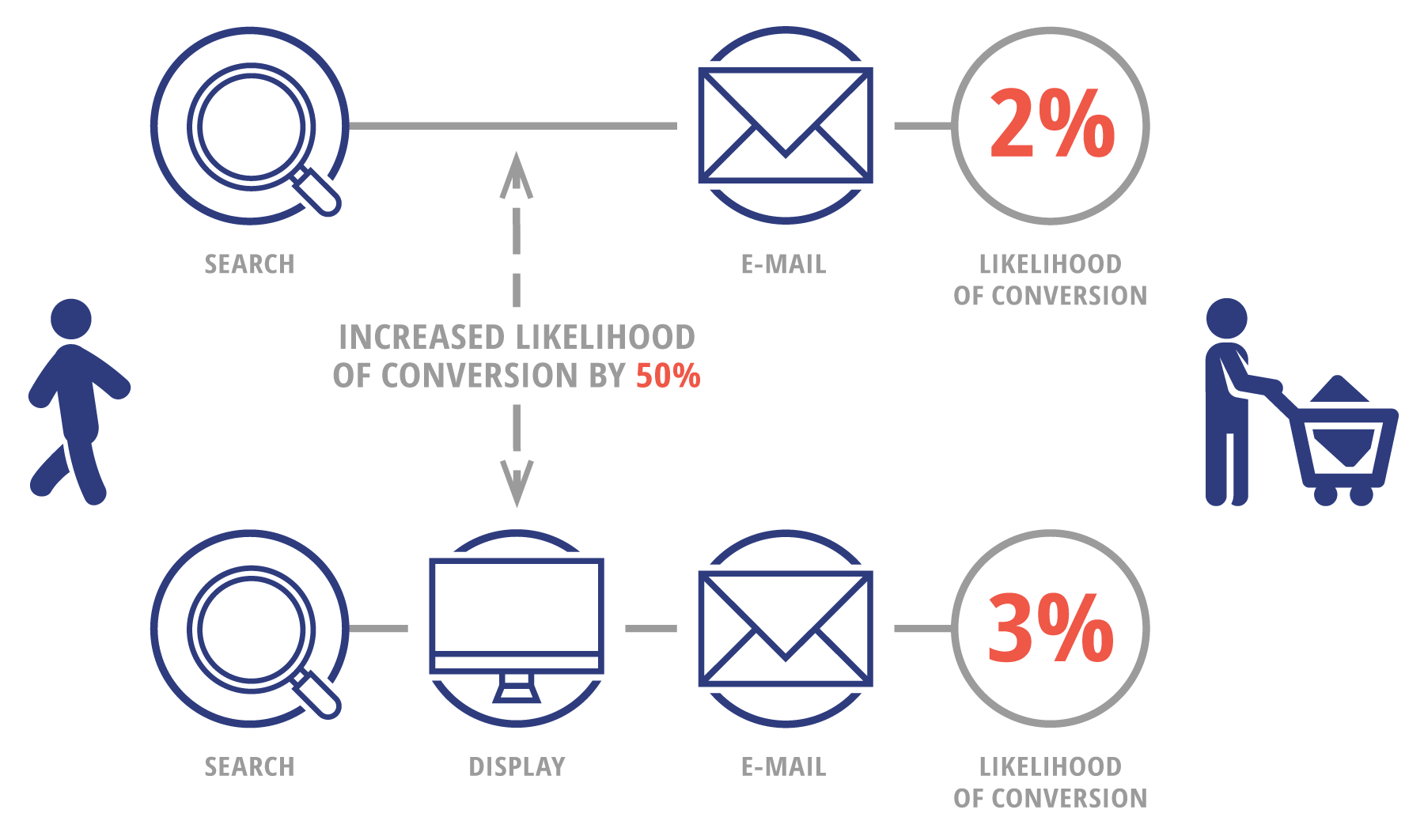

Let’s have a look at Google’s data-driven attribution methodology. The algorithm compares paths to conversion.

For example, say that the combination of search and email touchpoints leads to a 2 percent probability of conversion.

If there is also display on the path, the probability increases to 3 percent. The observed 50 percent increase serves as the basis for attribution of the display channel.

Illustration based on Google’s Analytics Help article, Data-Driven Attribution Methodology.

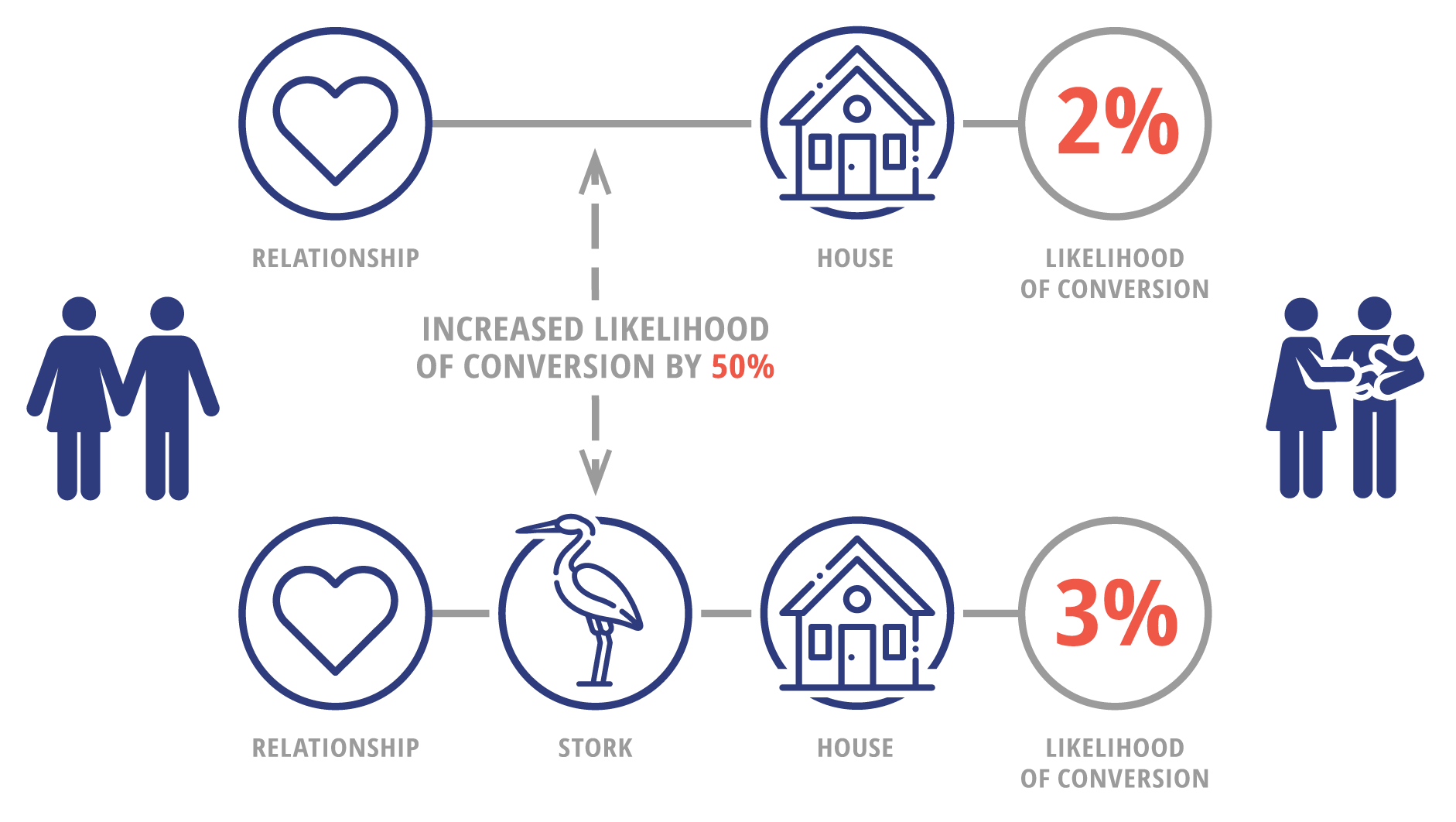

Illustration based on Google’s Analytics Help article, Data-Driven Attribution Methodology.

Let’s look at another example.

We have observed that people who enter a relationship and find a house, have 2 percent probability of having a baby within two years. However, if there are storks in the neighborhood, the probability increases to 3 percent.

We conclude that storks increase the chance of being pregnant by 50 percent. It actually means that these birds can be credited for at least a number of the childbirths.

Naturally, we do not believe that storks bring babies or have any impact on fertility.

The reason for this observation is different. There is, for many other reasons, a higher birth rate in rural areas, and storks rarely decide to have their nests in cities.

Of course, the data-driven attribution algorithms are more complex than the examples above, but they still often misinterpret signals, especially if there are both inbound and outbound marketing touchpoints on the path (for example, branded search terms clicks, remarketing ads, and regular prospecting campaigns).

Conversion Lift

In the past years, Facebook and Google made available conversion lift tests. This feature makes it possible to conduct a controlled experiment and to measure the incremental value of marketing campaigns.

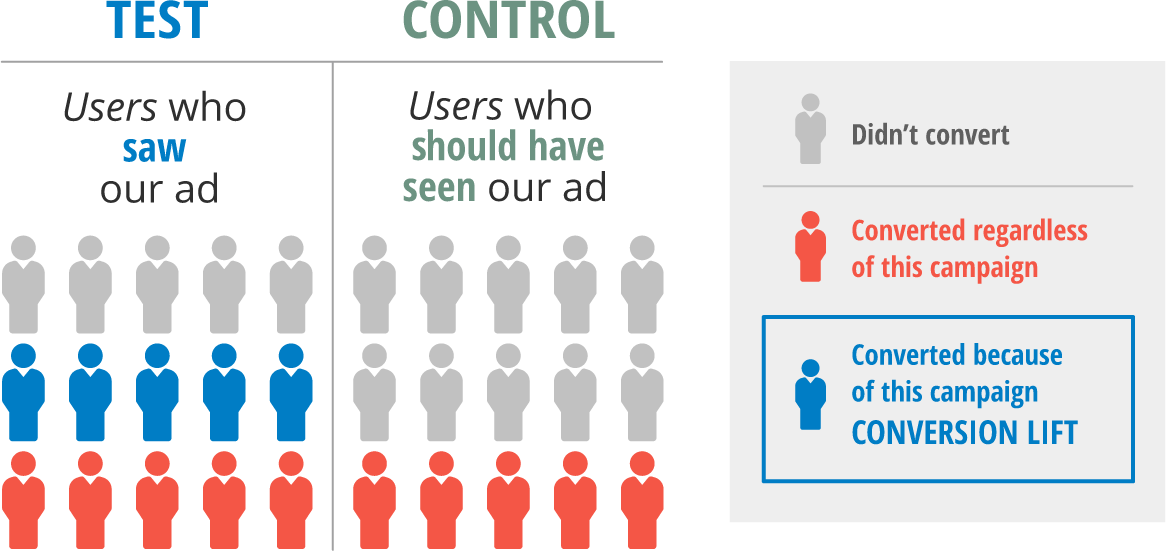

Conversion lift test creates a control group out of the pool of users that would have seen our ad (because we have won the auction) but instead of showing the ad, they show the next ad in the ranking.

Afterward, you can track and compare the differences in the downstream behaviors of both of those users who actually saw your ad (test group) and those that should have, but didn’t (control group).

A simple comparison of conversions in these two groups shows the incremental value of the tested campaign.

This concept is so simple and obvious.

It’s hard to believe that the online marketing industry has had to wait for this feature for so long.

Case Study: The Value of Video Views

My company made a conversion lift test of a YouTube campaign for an online travel agency.

The test showed that the real impact of the campaign was significantly lower than the number of post-view conversions.

It also revealed the inaccuracy of algorithmic attribution models.

However, it has proven the efficiency of video ads in driving sales, much beyond the post-click effect.

User Buckets

The conversion lift experiment isn’t so far available for all advertisers and all types of campaigns.

However, a long time ago, Google Analytics introduced user buckets. This feature makes it possible to conduct controlled experiments for remarketing campaigns.

Google Analytics randomly assigns each of your users to one of 100 buckets. The User Bucket dimension (values 1 to 100) indicates the bucket to which the user has been assigned.

User Bucket range can be used as a condition in audience definitions, and these audiences can be used as remarketing lists in Google Ads.

For example, the 1-50 range audience can serve as control and the 51-100 audience will be our experiment. You can then compare the effects of remarketing displayed only to the experiment audience.

Testing Remarketing Effectiveness

In order to conduct the conversion lift experiment for remarketing campaigns, you simply have to:

- Make the control list (User Bucket range) as a negative audience.

- Observe the differences in conversions between these two segments.

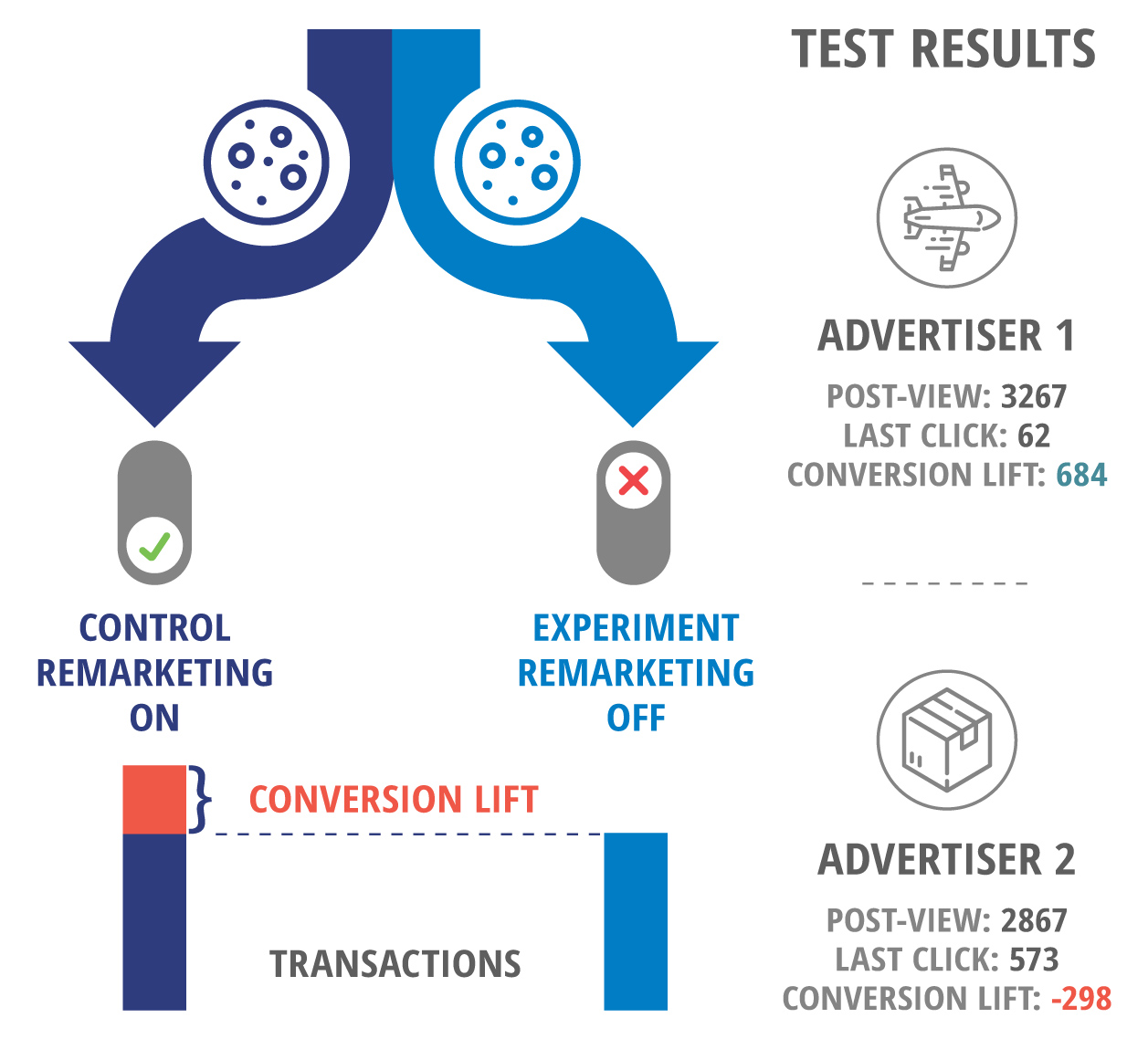

Depending on the advertiser and the campaign, this test can produce completely different results.

For example:

- In the case of the online travel agency, remarketing has generated a high number of incremental conversions, more than 10 times higher than the post-click conversions.

- A similar experiment made by a parcel service business showed that the users who saw remarketing ads had even fewer conversions than the control group, despite a significant number of post-click conversions.

So, is the remarketing effective?

These results clearly show that there is no universal answer to this question.

Although it’s unlikely for the ads to have a negative effect on conversion rate, the observation of a post-click conversion does not always mean that the sale wouldn’t happen without this click.

Is SEM PPC Worth Its Cost?

Branded term campaigns (i.e., when an advertiser uses its own brand name as a keyword in search engine ads) often have excellent KPIs.

The CTR is high and the conversion rate is usually above average.

However, what would happen if we wouldn’t pay for this ad?

Our website should normally be listed in the first position in organic search results for our own name. It will be placed below our competitors’ ads if they appear there.

Will the average user click the competitor’s ad instead? Or maybe rather scroll a couple of lines down to find our organic listing?

If not, will this user buy from the competitor? Or rather go back to the search engine and try to find what he or she was looking for?

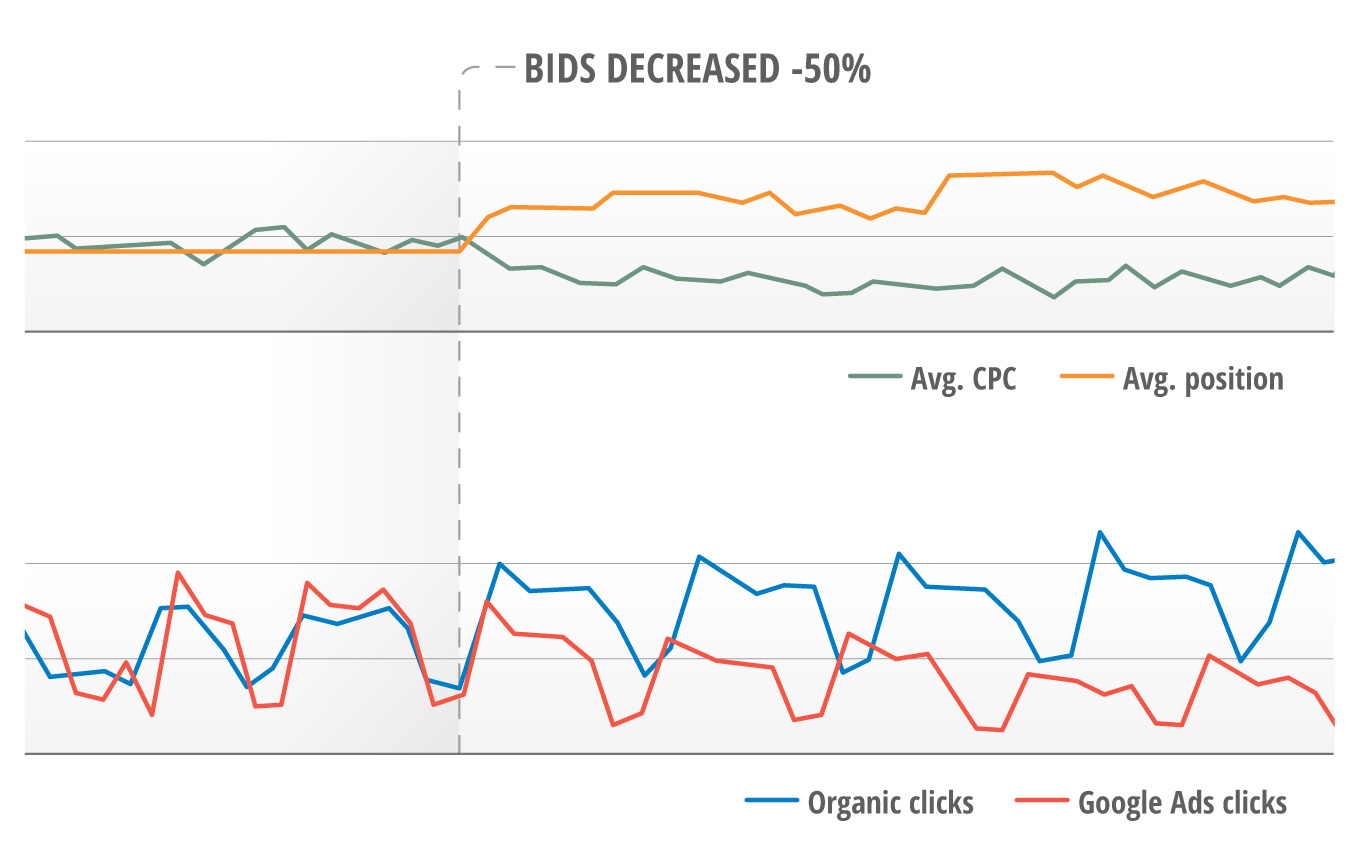

If we turn off or limit the branded term campaign, we can often observe an increased number of organic clicks for branded search terms.

In the example below, the bids in the branded term campaign have been decreased by approximately 50 percent.

So, how many of these users who clicked our branded term ads would convert even if we wouldn’t pay for our own brand keywords?

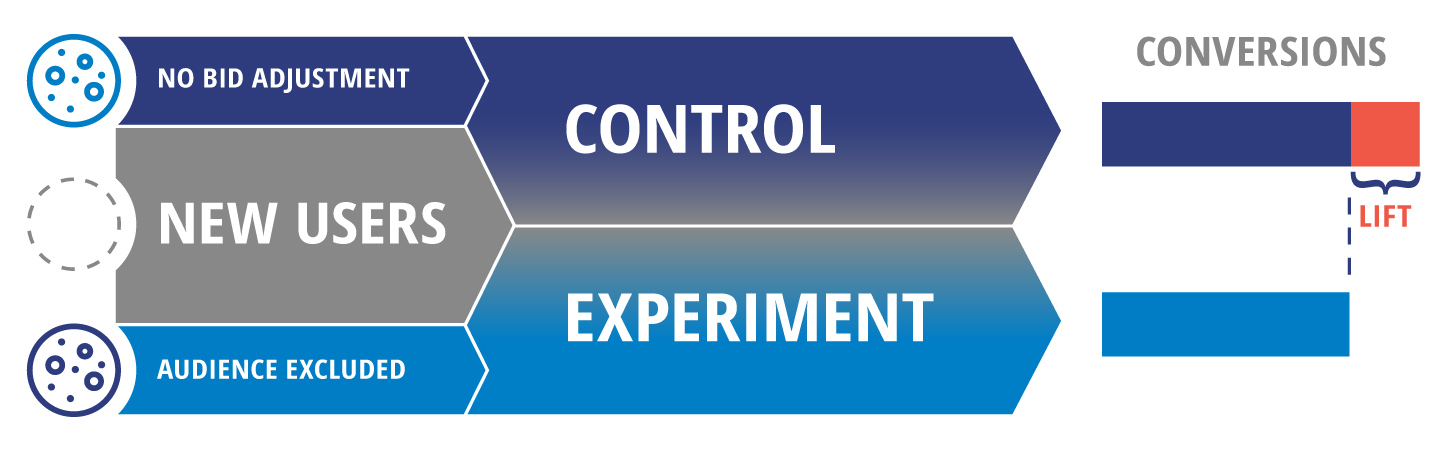

Conversion lift experiments are not yet available for search campaigns. However, the user buckets can be used to create remarketing lists for search ads (RLSA) and at least test the incremental impact on returning users.

This experiment is a little more complicated. As opposed to remarketing, the search engine ads target also new users.

These users have not visited our website before and, for this reason, are not in any of the User Buckets yet. Not yet because immediately after the click, they will be assigned to a random User Bucket.

Therefore, in this test, we should exclude the experiment remarketing lists and use the control list in observation setting.

- The experiment list: Excluded.

- The control list: Observation, no bid adjustment.

The result of this experiment for branded terms campaigns usually shows that the actual incremental value of this campaign is significantly lower than the value of post-click conversions.

Quite often you may see that actual lift is statistically insignificant. So, do you still believe in post-click conversions?

You can also try to test any SEM campaign using this method. Please note that it only tests the behavior of the returning users.

The impact of the campaign on new users can be different. However, based on results you may ad least decide to modify your RLSA bid adjustments.

Do We Need Attribution Modeling?

Attribution modeling isn’t the art for its sake. The purpose of these calculations is to determine the incremental value of each channel.

Marketers need to know how many customers they gain by adding a given campaign to their marketing plan. It makes it possible to calculate how much they can spend on this campaign.

This is exactly what the results of a conversion lift experiment show. Then, why are we still building complicated models if we could actually measure it?

Unfortunately, we can’t use conversion lift to measure the efficiency of all types of ads yet.

Why Do We Trust in Clicks?

Numerous conversion lift experiments have shown that the post-view effect exists. We could also observe that certain clicks have almost no impact on the overall result.

Our strong belief in post-click conversions is being massively abused by some performance marketers and affiliates.

They use branded terms ads, discount coupons, or any other type of conversion hijacking techniques in order to swindle CPA commission.

Hopefully, conversion lift experiments will become a standard in the online advertising industry in the future.

If we will be able to conduct a controlled experiment for any campaign, keyword, or audience, there will be less guesswork in estimating the actual incremental value of advertising.

More Resources:

- What Search Marketers Need to Know About Attribution in 2019

- 5 Useful & Easy Google Ads Metrics You Should Monitor

- PPC 101: A Complete Guide to Pay-Per-Click Marketing Basics

Image Credits

In-Post Images: Created by author, March 2019