The official documentation for how Core Web Vitals are scored was recently updated with new insights into how Interaction to Next Paint (INP) scoring thresholds were chosen and offers a better understanding of Interaction To Next Paint.

Interaction to Next Paint (INP)

Interaction to Next Paint (INP) is a relatively new metric, officially becoming a Core Web Vitals in the Spring of 2024. It’s a metric of how long it takes a site to respond to interactions like clicks, taps, and when users press on a keyboard (actual or onscreen).

The official Web.dev documentation defines it:

“INP observes the latency of all interactions a user has made with the page, and reports a single value which all (or nearly all) interactions were beneath. A low INP means the page was consistently able to respond quickly to all—or the vast majority—of user interactions.”

INP measures the latency of all the interactions on the page, which is different than the now retired First Input Delay metric which only measured the delay of the first interaction. INP is considered a better measurement than INP because it provides a more accurate idea of the actual user experience is.

INP Core Web Vitals Score Thresholds

The main change to the documentation is to provide an explanation for the speed performance thresholds that show poor, needs improvement and good.

One of the choices made for deciding the scoring was how to handle scoring because it’s easier to achieve high INP scores on a desktop versus a mobile device because external factors like network speed and device capabilities heavily favor desktop environments.

But the user experience is not device dependent so rather than create different thresholds for different kinds of devices they settled on one metric that is based on mobile devices.

The new documentation explains:

“Mobile and desktop usage typically have very different characteristics as to device capabilities and network reliability. This heavily impacts the “achievability” criteria and so suggests we should consider separate thresholds for each.

However, users’ expectations of a good or poor experience is not dependent on device, even if the achievability criteria is. For this reason the Core Web Vitals recommended thresholds are not segregated by device and the same threshold is used for both. This also has the added benefit of making the thresholds simpler to understand.

Additionally, devices don’t always fit nicely into one category. Should this be based on device form factor, processing power, or network conditions? Having the same thresholds has the side benefit of avoiding that complexity.The more constrained nature of mobile devices means that most of the thresholds are therefore set based on mobile achievability. They more likely represent mobile thresholds—rather than a true joint threshold across all device types. However, given that mobile is often the majority of traffic for most sites, this is less of a concern.”

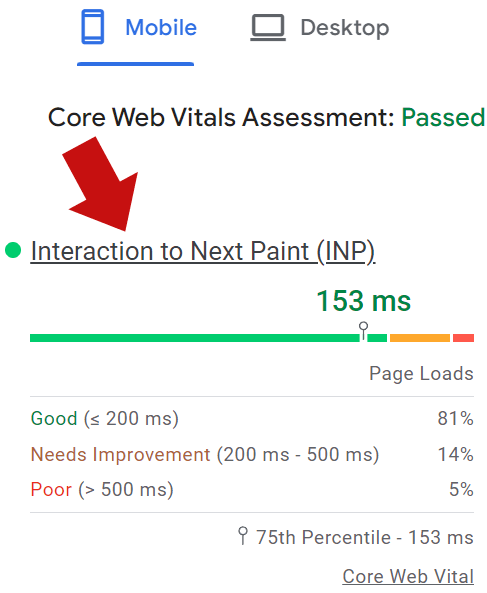

These are scores Chrome settled on:

- Scores of under 200 ms (milliseconds) were chosen to represent a “good” score.

- Scores between 200 ms – 500 ms represent a “needs improvement” score.

- Performance of over 500 ms represent a “poor” score.

Screenshot Of An Interaction To Next Paint Score

Lower End Devices Were Considered

Chrome was focused on choosing achievable metrics. That’s why the thresholds for INP had to be realistic for lower end mobile devices because so many of them are used to access the Internet.

They explained:

“We also spent extra attention looking at achievability of passing INP for lower-end mobile devices, where those formed a high proportion of visits to sites. This further confirmed the suitability of a 200 ms threshold.

Taking into consideration the 100 ms threshold supported by research into the quality of experience and the achievability criteria, we conclude that 200 ms is a reasonable threshold for good experiences”

Most Popular Sites Influenced INP Thresholds

Another interesting insight in the new documentation is that achievability of the scores in the real world were another consideration for the INP scoring metrics, measured in milliseconds (ms). They examined the performance of the top 10,000 websites because they made up the vast majority of website visits in order to dial in the right threshold for poor scores.

What they discovered is that the top 10,000 websites struggled to achieve performance scores of 300 ms. The CrUX data that reports real-world user experience showed that 55% of visits to the most popular sites were at the 300 ms threshold. That meant that the Chrome team had to choose a higher millisecond score that was achieveable by the most popular sites.

The new documentation explains:

“When we look at the top 10,000 sites—which form the vast majority of internet browsing—we see a more complex picture emerge…

On mobile, a 300 ms “poor” threshold would classify the majority of popular sites as “poor” stretching our achievability criteria, while 500 ms fits better in the range of 10-30% of sites. It should also be noted that the 200 ms “good” threshold is also tougher for these sites, but with 23% of sites still passing this on mobile this still passes our 10% minimum pass rate criteria.

For this reason we conclude a 200 ms is a reasonable “good” threshold for most sites, and greater than 500 ms is a reasonable “poor” threshold.”

Barry Pollard, a Web Performance Developer Advocate on Google Chrome who is a co-author of the documentation, added a comment to a discussion on LinkedIn that offers more background information:

“We’ve made amazing strides on INP in the last year. Much more than we could have hoped for. But less than 200ms is going to be very tough on low-end mobile devices for some time. While high-end mobile devices are absolute power horses now, the low-end is not increasing at anywhere near that rate…”

Related: Core Web Vitals: A Complete Guide

A Deeper Understanding Of INP Scores

The new documentation offers a better understanding of how Chrome chooses achievable metrics and takes some of the mystery out of the relatively new INP Core Web Vital metric.

Read the updated documentation:

How the Core Web Vitals metrics thresholds were defined

Featured Image by Shutterstock/Vectorslab

![AI Overviews: We Reverse-Engineered Them So You Don't Have To [+ What You Need To Do Next]](https://www.searchenginejournal.com/wp-content/uploads/2025/04/sidebar1x-455.png)