Every so often the SEO community will erupt into an uproar at the publication of a new ranking factors study.

The usual cry – “correlation is not the same as causation!”

You may be familiar with the terms.

Correlation is the “mutual relation of two or more things” and causation is “the action of causing or producing.”

Essentially, is something genuinely the cause of a result, or does it just happen to change in line with the result?

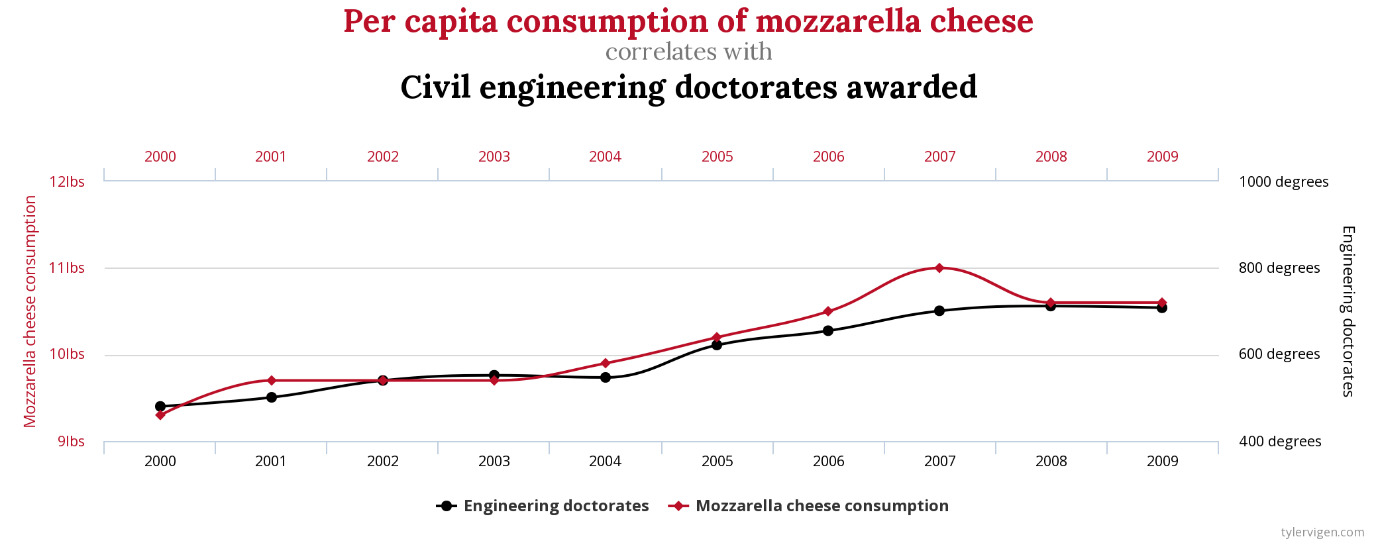

To put it clearly, here is an unusual example of correlation.

According to the data gathered by Tylervigen.com from the U.S. Department of Agriculture and National Science Foundation, there is a direct correlation between the number of Civil Engineering doctorates awarded in the U.S. and the per-person consumption of mozzarella cheese.

That’s right.

Want more civil engineers to graduate in the U.S.?

You’d better start eating more cheese.

We can all quickly identify that it’s likely being a coincidence rather than a causal link.

This is a good example of correlation not being the same as causation.

Why Are Correlation & Causation a Concern in SEO?

A lot of SEO activity is based on trial and error, experience, and statements from search engine representatives.

Due to this, there are often assertions made like “SEO activity X has a positive effect on your webpage rankings.”

For example: “links from authoritative websites will improve your website’s SERP rankings.”

Sometimes, these will be accurate – the stated activity will be what has caused the ranking increase.

Other times, it is purely coincidental.

The issue with this is that there can be substantial time and money invested in carrying out SEO activities that will never pay off.

For instance, what if there was an SEO study that suggested the number of JPEGs on a page was a ranking factor.

This hypothetical study suggested that the more JPEGs the higher you are likely to rank in Google.

This might cause SEO professionals to start adding images to their pages regardless of whether they would benefit the end-user.

And paying for photographs or a premium image service could be expensive.

No to mention the time taken to upload the images to every page could also be costly.

How to Avoid Mistaking Correlation for Causation

How then do you decide if Y is affected by X, or if changes are mere coincidences?

Consider the Claim

First of all, consider what is being claimed.

Sometimes a commonsense check of what is being discussed will be enough to determine whether the correlation is a coincidence.

The following two questions can go a long way to working this out:

- How could the search engines measure this?

- How would it benefit the end user and therefore the search engines?

This is not an exhaustive list.

The cynical us might ask, “would this financially benefit the search engine?”

Or you might wonder, “would this be the case for my industry?”

It might be that a supposed ranking factor would not make sense for the industry you are in.

For instance “your money or your life” (YMYL) pages, ecommerce, or entertainment sites might have been subject to different weightings for different ranking factors.

What Does Your Experience Say?

Have you experienced SEO results in a way that rings true with the causation statement made?

Your experience is just as valid as anyone else’s.

If you have seen the opposite happen – for instance, removing unnecessary JPEGs from a page caused your page’s ranking to increase – then that is reason enough to investigate the claim further.

Identify Other Factors

With the example given above, there might be other reasons why adding JPEGs to a page correlated with an increase in SERP rank for a page.

For instance, maybe the images included alt attributes and that was actually what made the difference in rank.

Perhaps the images were part of a page’s redesign and other elements like unique copy were also introduced at the same time.

It’s also hard with studies run by third parties to know the true methodology.

Perhaps there were other variables in play that the researchers did not account for.

That alone could call into question the validity of the experiment.

How Big Is the Sample?

When running statistical experiments a researcher will be looking for results that have reached a statistical significance.

That is, an assurance that the relationship between X and Y did not occur through chance.

In order to achieve a reliable level of statistical significance, your sample size has to be large enough.

If your sample size is not large enough your experiment may be subject to a sampling error.

A correlation may emerge that would simply not be there in a larger sample size.

In our example, what if only three websites were used as part of the study into how JPEGs affect rankings.

If two of them had seen ranking increases when adding JPEGs to a page and one of them did not then you could conclude that JPEGs have a positive effect on rankings.

However, what if you added a further seven websites to your study, and each of those did not show an increase in rankings when JPEGs were added to the page.

That would bring the result to two which showed rank increases and eight that did not.

What would happen to the results with another 10 websites?

Three is simply too small a sample size to make declarations about all the websites in the search engine index.

How Varied Is the Sample?

Similarly, if your sample is not a diverse enough representation of the entire data set then you risk sampling errors.

For instance, what if the websites chosen for our hypothetical study were all ecommerce sites.

Would it be a reliable enough experiment to apply those findings to information only sites?

Could they be applied to YMYL sites?

What if the websites sampled were all built on WordPress?

Would it be a fair conclusion to assume that websites running on Magento would rank in a similar way?

A Caution on Third-Party Studies

It is very easy to see studies shared around the likes of Twitter and LinkedIn and assume the study is thorough.

Similarly, listening to a speaker share their case study at a conference may inspire confidence that their conclusions are valid.

You can’t be sure of this, however.

A third party study may have experimental flaws.

One case study is too small a sample size.

Conclusion

Whenever you hear a claim about what is or isn’t important in SEO and there is data used to back it up, make sure you bring yourself back to your school science experiments.

Was there a large enough sample used?

Were all the variables accounted for and controlled?

Would you get an “A” grade for that study?

If there is any doubt then make sure you do not take the conclusions as fact.

Instead, continue to experiment on your own.

Monitor the effect of any changes you make to your sites to see if they have the result you expected.

More Resources:

- A Complete Guide to SEO: What You Need to Know

- Emergence: The Scientific Concept All SEO Pros Should Understand

- How to Spot SEO Myths: 7 Common SEO Myths, Debunked

Image Credits

Screenshot taken by author, October 2020