It’s all about happiness metrics

A while back I wrote here about how search engines go about discovery, the act of finding your pages. I thought we should continue that with a look at how they crawl and index them as well. With a twist, we’re going to discuss the uber fast world of real time.

Over the last while we’ve seen a distinct interest in search for social and so-called real time search. Actually, even efforts to index the web at ever increasing rates has been a mainstay for many years now (remember when Google refreshed once/month? Unheard of these days). To that end (infrastructure) we can look at the latest effort; Caffeine.

There is a decided need for speed that is evident in many areas including;

- Caffeine

- Pubsubhubub

- Social (for discovery)

- RSS from any page

- Page load speed (?)

You get the idea. One thing that one has to consider when it comes to processing is to enable systems that are optimally efficient. The real problem comes in deciding what is and isn’t important to people in ‘real time’.

Search engines need to look at;

- Cost of crawling

- Re-fresh rates

- Query space freshness requirements

- Value of changes

- Content type

It is certainly an interesting discussion and one that is part of, if we realize it or not, our collective futures (as search marketers and people). One problem we often see in the search space I that we’re more reactive than pro-active. We see all of this evolution around us, but seldom go below the surface.

Start your engines

Now, since we’re warmed up, let’s take a quick look at Greg Linden’s post; Re-crawling and keeping search results fresh – and in particular;

“The core idea here is that people care a lot about some changes to web pages and don’t care about others, and search engines need to respond to that to make search results relevant.”

So, search engine folks need to consider not only the ‘if’ and ‘when’ it is crawled/indexed, but where and how they are returned, re-crawled etc… (especially in heavy universal situations). But at the same time crawling all the pages of the web is inefficient and costly.

In the case of real time, or even the regular index, the update frequency is going to be a huge consideration. Websites that are active, tend to have higher crawl rates. This means we should have a strong content strategy in place if we want to make the most from the new speeder search engines.

The real time web puts a premium on discoverability and indexation. This means, as discussed before, adopting more push technology in your efforts is likely a good idea. A few of the aspects that search engines (such as Google) look at are;

- Trigger Events – this would be elements such as hot news, query analysis that set off the actual event for indexing/displaying results.

- Quality Signals – establishing a Trust/Harmonic Rank type of concept for links in social (trusted domains etc a la OneRiot)

- Update Frequency – obviously consistency would play a roll in how often a page is visited (and hopefully indexed).

It is certainly evident that search engines are going to have to find not only better signals to make decisions that produce a valuable result, but find ways to create inclusion/interfaces that better attract users.

The future of real time search

This all does seem to point to the fact that we’re not quite there yet. But let’s continue the trail and see where it leads.

The next consideration we need to take in is that efficacy of the information being presented. The obvious element here is going to be the more fluid data points. What one Googler said they refer to as ‘Happiness Metrics’. These are the user metrics such as query analysis, click data and other forms of implied user feedback.

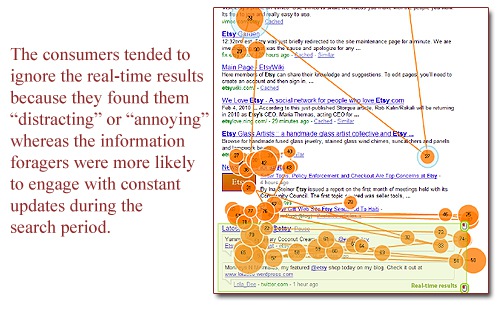

But the question remains, how much value to RTS results have? In a recent study done by One Up Web, they discovered;

- 73% had never heard of real-time results before participating in this study

- Only a quarter of the consumers cared for the real-time results compared to 47% of the information foragers

- The majority of the participants surveyed were indifferent to the real-time results

- Yet, we also know that consumer choices are influenced by their social graph. But interestingly enough, it was the consumers that were least interested in the social component of the real-time results.

- The consumers tended to ignore the real-time results because they found them “distracting” or “annoying” whereas the information foragers were more likely to engage with constant updates during the search period.

One thing that comes to mind is finding some type of popularity measure for the social world. Or, taking it to a more granular level with personalization. It stands to reason that users that are like-minded will produce more usable results. At this point the real time and social search realms are divided. I’d have to believe some type of hybrid, inclusive of a personalization aspect, would be the future of RTS.

To some degree at least (combining user feedback signals with the social graph).

Grab and Hold

If it’s for real time search, news or just a faster regular index search, speed is a concept worth noting for SEOs.

If you’ve ever played ‘grab and hold’ with the QDF (query deserves freshness) then you would be familiar with velocity concepts. If you haven’t, let’s review. This analogy comes from military concepts of taking a piece of land and holding it. For our purposes, we’re looking to get a foot hold with temporal advantages, then maintain the ground we’ve gained.

If you’ve ever noticed a relatively new page that ranks early one, but degrades over (a short period of time), then you’ve seen it in action.

Getting the most from RTS is often much the same, though not as stable. You will want to have all your ducks in a row from SEOs to PR peeps and Social Media depts. Given the flux and temporal nature to RTS, this is at a premium. That combined with what we know of trust signals, is a natural part of any RTS targeting component.

It’s a pushy kind of world

And so we, as SEOs, need to start to consider not only how push technologies work, but the (potential) evolution of the world of real time search. It is part and parcel to any universal search strategy. We need to get in tune with the PR and social peeps. But do we need to do it now??

Nope… not really. In the current incarnation it is the least of my worries as far as universal search strategies are concerned. We’re much better off looking at verticals such as news, video and shopping. I do think it is something we should be watching as it has the potential to become a valued segment in the near future. Besides, understanding how to make the most of push technologies, syndication and visibility in the modern age of need for speed, will only help us in the long run.

On a side note, as I tried to actually get some RTS results on Google as I wrote this last night, it seems to have been scaled way back. It took some Twitter trending terms to actually produce one. This seems to speak to how valued it is in the current SERP landscape.

Anyway, I hope you enjoyed this little adventure, and if you have questions on crawling or thoughts on real time search – please do sound off in the comments.

Resources

As always, I have some interesting patents for those looking to dig deeper into the various methods used in crawling/indexing;

Googly Patents

- Duplicate content search

- Method and system for query data caching and optimization in a search engine system

- Search result ranking based on trust

- Multiple index based information retrieval system

- Systems and methods of synchronizing indexes

- Duplicate document detection in a web crawler system

Microsoft Patents

- Using core word to extract key phrases from documents

- Mining information based on relationships

- Acquiring web page information without commitment to downloading the web page

- Classifying search query traffic

Yahoo Patents

- Segmentation of search topics in query logs

- Systems and methods for search query processing using trend analysis

- Optimizing ranking functions using click data

- System for refreshing cache results

![AI Overviews: We Reverse-Engineered Them So You Don't Have To [+ What You Need To Do Next]](https://www.searchenginejournal.com/wp-content/uploads/2025/04/sidebar1x-455.png)