Getting deindexed by Google can hit you like a bolt from the blue.

One day everything is fine. The next, all your organic traffic has vanished.

Why does this happen?

What can you do to fix it – fast?

The first order of business is to take a deep breath. Try not to panic.

Your site can bounce back from being deindexed, although it might take a while to recover your previous rankings.

Start the recovery process by figuring out why your site was deindexed in the first place.

Why Did Google Deindex Your Site?

A website is typically deindexed for one of two reasons. Either:

- Google has taken a manual action on your site.

- Someone accidentally made a mistake in the code of the website to cause the deindexation.

If a manual action has been applied, you would have received a notification in the Search Console detailing the infraction.

The most common reason for this is because your site has done something to break Google’s webmaster quality guidelines.

If you aren’t already familiar with Google’s guidelines, now is a good time to look them over.

You should follow these guidelines to the letter – they’re the key to maintaining a good standing with Google.

Maddy Osman provided a wonderful write-up of 16 possible reasons your site might have become deindexed.

This article will provide solutions on how to fix some of the major causes of deindexation and then show you the steps to recovery.

Unnatural Links to/from Your Website

This could mean a number of things:

- Low-quality guest posting.

- You acquired too many inbound links to your site in a short timeframe. To Google, this can look like you’re buying links.

- Spam blog commenting.

- Participating in link exchanges or link farms.

Solution:

If you received a manual action for unnatural links, the first thing you need to do is perform an audit on your backlink profile.

During this process, you need to identify what links are relevant and natural, and which ones are unnatural or spammy.

By going through this analysis you should ultimately end up with a list of links that need to be disavowed.

After submitting your disavow file you should file a reconsideration request explaining what happened and what steps you have taken to rectify the violation.

Content Concerns: Duplicate, Thin, Auto Generated & Non-Original

These are all a violation of Google’s rules.

Spun or scraped content, spammy content, and thin content all fall under the low-quality umbrella.

Bad spelling and grammar can negatively impact your rankings.

Solution

If you are participating in Auto-Generated or Non-Original content creation, you probably know what pages it is affecting.

On the other hand, it can be a little more difficult to identify thin or duplicate content pages.

Use an SEO crawler (e.g., Deep Crawl, Screaming Frog, Site Bulb) to uncover these types of pages quickly.

Once identified, you either need to spend the time and resources to develop real value-driven copy, or you need to remove the low-quality pages.

Finally, a reconsideration request must be filed.

Cloaking

If you’re providing the search engines and users two different sets of content or URLs, this is considered cloaking and can most definitely result in a manual action against a website.

Solution

Sometimes this can occur through no fault of your own.

For example, if your website has content behind a paywall for subscribers, it might look like cloaking. In this case, you would need to structure your website using JSON-LD to specify the hidden content. Google has provided detailed instructions for this type of website.

Another cause through no fault of your own could be that your website has been hacked. Hackers commonly use cloaking as a way to direct users to spammy websites.

This can usually be fixed pretty quickly by running a scan on your website to identify and fix the vulnerable pages. Another option is to use a service like Sucuri and let them clean up the malware on your behalf.

Upon clean-up, you will need to file a reconsideration request notifying of the steps that were taken to resolve the issues.

Spammy Structured Markup

As with the other issues mentioned, there are general guidelines that apply to structured data.

Failure to follow the guidelines can most certainly result in a manual action and possible deindexing of a website.

Solution

If you received a manual action, your first step should be to look at some of the common causes of structured data manual actions and based off the message that was provided to you in search console, see where the issues lie.

Another way of identifying potential issues is to use the Structured Data Testing Tool to see what errors show up.

After fixing the items in question a reconsideration request must be filed as with any manual action.

Other Reasons Your Site May Have Been Deindexed

If none of the above issues apply to your site, you may have gotten deindexed through bad luck.

Ask yourself:

Did You Tell Google to Deindex Your Site?

If you accidentally use the noindex directive on any of your pages, Google will remove them.

You can check this by looking in the <head> tag of each page on your site.

If you’ve used the noindex directive, the code will look like this:

Bonus Tip for WordPress Sites

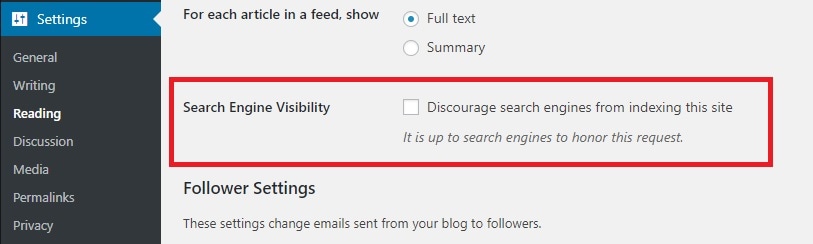

Check your settings to make sure Google is given access to crawl your site.

Click Settings in the left sidebar and go to Reading.

About halfway down the page, you’ll see a Search Engine Visibility option. Make sure the box is unchecked.

Make sure your WordPress settings allow Google to crawl your site.

Did Your Domain Expire?

If your domain name expires and you don’t renew it, your site will inevitably vanish from Google’s results.

Solution

Either setup a reminder in your calendar for a few weeks prior to your expiration date or utilize a service like StatusCake’s domain monitoring, where it will alert you when your domain is about to expire.

Did Your Server Crash?

If your site goes down for an extended period of time, it may disappear from Google’s index.

Solution

Invest in a website monitoring service where you can get alerted if your website goes down.

Although there are many options, I have found that StatusCake is great for the price and as mentioned above, you would get the website monitoring and the domain monitoring in one solution.

Has Google Changed Their Algorithm Lately?

If Google has recently released a new algorithm update, that could have something to do with the problem.

Check whether the update came with any new guidelines that your site isn’t following.

Solution

The best way to identify this is to cross reference when your declines occurred and compare that to an algorithm update.

The Panguin Tool is a great free resource for this.

Next Steps After Fixing the Above Mentioned Issues

- Submit a reconsideration request (if a manual action). If your site got deindexed for not following quality guidelines, you’ll need to submit a reconsideration request for Google to reindex it. Make sure that you’ve cleaned up all the issues (and documented how you did so) before you take this step.

- Submit sitemaps to Google. If your site got deindexed for another reason, such as a server crash, resubmit your sitemaps to Google to start the reindexation process.

- Look for alternate ways to get traffic to your site in the meantime. Social media can be a good way to drive traffic to your site while your organic traffic is still growing – plus, if you build a strong social media presence now, it will only help your site rank better after you’ve made a full recovery.

Summary

Getting deindexed by Google is bad news, but it’s not the end of the world.

If you take action quickly, your site can recover.

Start by identifying and fixing the issue that caused your site to be deindexed.

Then ask Google to reindex your site while doubling down on your SEO efforts.

More SEO Resources:

- 16 Ways to Get Deindexed by Google

- Your Indexed Pages Are Going Down – 5 Possible Reasons Why

- The Complete List of Google Penalties & How to Recover

Image Credits

Featured Image: DepositPhotos.com

All screenshots taken by Adam Heitzman, June 2018

![AI Overviews: We Reverse-Engineered Them So You Don't Have To [+ What You Need To Do Next]](https://www.searchenginejournal.com/wp-content/uploads/2025/04/sidebar1x-455.png)