SEO can be quite tricky.

Today, your sites could deliver impeccable results in terms of traffic and CTR.

But your Google rankings could drop overnight, leaving you to wonder:

- Is it a Google algorithm update or a manual action?

- Did you lose backlinks?

- Is it possible that someone plagiarized your content?

- Is it your developers’ problem?

An immediate rankings drop can be caused by anything, from poorly written content to a hacked website.

As the owner of a digital marketing agency, I realize how important maintaining high (or at least stable) search rankings are to an SEO professional’s self-esteem.

Basically: If you can’t increase rankings and drive traffic, what kind of an SEO expert are you?

This simple but efficient seven-step SEO guide will help you find out what you should do to survive and recover from a drop in rankings and traffic.

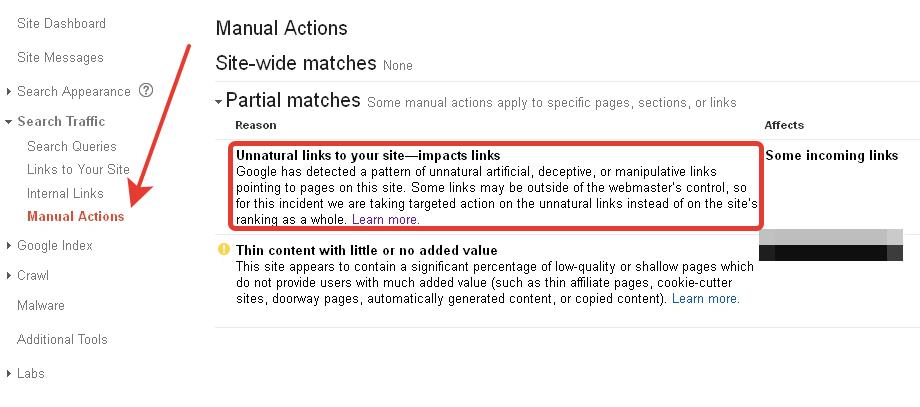

1. Check for a Manual Action

When Google takes manual action on a specific page or your entire site, you will receive a note in the Search Console. Like this one:

Google is pretty descriptive about the causes of this particular manual penalty. In this case, figuring out why your site has received worse rankings or been entirely deindexed won’t be a problem.

The bad news is: manual action penalties can be hard to get rid of. Sometimes, it makes more sense to delete the pages in question than to fight the penalty. This is the case if your site has been hit by a Pure Spam penalty.

If you don’t see any Manual Action messages in the Search Console, there are only two options left:

- Google has updated its algorithm.

- You or someone from your team is responsible for the rankings drop.

2. Was Your Site Hit by an Algorithm Update?

Before you start to panic and revamp your entire SEO strategy or analyze on-page, off-page, and technical SEO factors, identify whether Google is responsible for the rankings drop.

Basically, provide a well-thought reply to the question: Was it a global algorithmic update?

I can’t stress enough how important the right answer is. Because if you fail to come to a conclusion, you will spend dozens of work hours and thousands of dollars to no avail.

Everybody makes mistakes. One single error in your rankings tracker may have caused the problem.

Alternatively, Google might have picked up your site for rankings experiments. An ordinary server error could also be the answer:

Your site might be perfectly fine from an SEO perspective, but if you fail to locate the true cause of a rankings drop, you risk ruining your SERPs for real.

Here is what you can do to avoid mistakes:

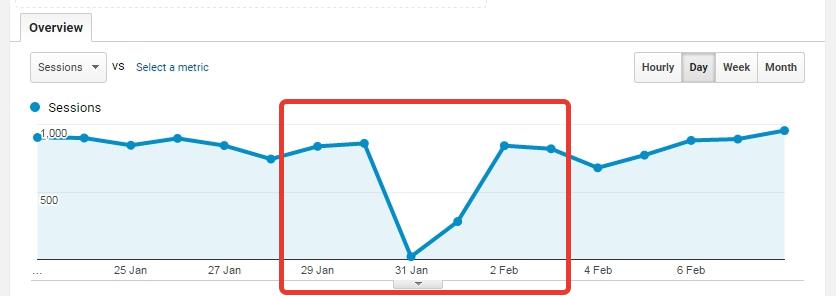

- Go to Google Analytics.

- Sift out pages that have suffered a loss of organic traffic.

- Analyze traffic dynamics for these pages (week by week and day by day).

- Review selected pages to find similarities (or differences).

- Create a list of hypothetical reasons for why your SERPs suffered.

Now that you understand why your rankings may have dropped, check out Google Search Console to compare the data. Pay specific attention to clicks and impressions. Checking out the average position for a selected group of keywords is also recommended.

After that, analyze how your targeted keywords behave in search results. Type in targeted keywords and phrases one by one to gain a clear picture of what’s going on with the rankings.

Is there a mismatch between the Google Analytics, Google Search Console, and Google search results?

You can use the following tools to assess and analyze your data more efficiently:

- Fruition’s Google Penalty Checker

- Barracuda

- Stat

If your rankings are substantially declining in Google Analytics, Google Search Console, and search results, a tectonic shift in the algorithm may be in place.

Consider adjusting your SEO strategy but don’t rush. I strongly recommend allocating at least a couple of hours to conducting a more detailed analysis. Who knows? Maybe Google is not to blame.

Bonus tip! Consult fellow SEO pros to see if they have mentioned any changes in rankings. If they say yes, this is a strong indicator that Google has upgraded its algorithm. To make certain, check out trustworthy SEO resources such as Search Engine Journal, Search Engine Land, or Moz.

3. Run a Detailed Backlink Analysis

Links from trustworthy websites are one pillar that enables a site to perform well in search results.

However, if a high-quality resource stops linking to your site or nofollows what was previously a followed link, a considerable drop in rankings is possible.

To figure out if that’s the case, use one of these link analysis tools:

- Ahrefs

- Majestic SEO

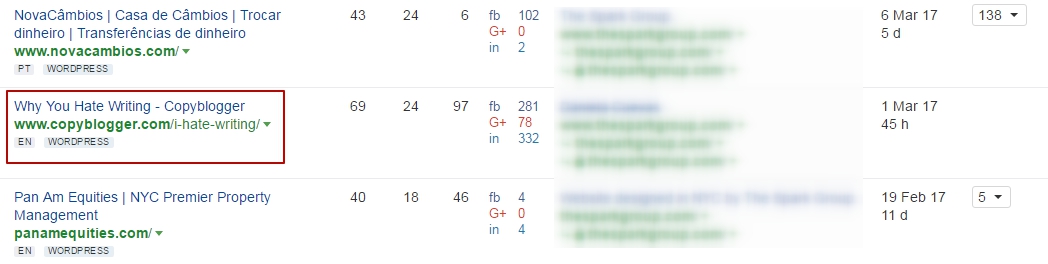

The screenshot below demonstrates the dynamics of referring domains in Ahrefs. The arrow shows the time period where rankings started to drop. Obviously, there should be a correlation.

You can also combine results from both Ahrefs and Majestic to pinpoint every problem in your backlink profile. Your goal is to check to see if:

- Your site has suffered a sitewide link drop.

- A link drop has affected a group of pages or a specific page.

- Links have been removed from a particular site or several related sites.

After you locate the problem(s), analyze every page that has lost backlinks. Consider their content, structure, visual elements, and so on.

More importantly, list pages that are linked to the affected ones on your site. Screaming Frog SEO spider tool will help you to do this.

Now that you have two lists of pages (those that lost links and the ones that are internally connected with them), analyze your backlink sources. You need to figure out why they stopped pointing to your site.

- Were the original pages deleted?

- Did they change URLs?

- Was their content updated?

- Was their design and structure enhanced?

From then, start working to regain the lost backlinks:

- Contact site owners or webmasters and ask them to link back to you (in case links were removed by mistake).

- Update the affected pages to convince site owners or webmasters that they should place links to your site (this will work if links have been deleted intentionally).

- Replace lost backlinks (these link building methods will help).

Bonus tip! Check to make sure your site hasn’t been attacked with spammy backlinks. This negative SEO method can be effective. Your competitors simply purchase tons of low-quality links and point them toward your site. As a result, your linking profile gets worse, and Google downgrades your entire website. If that’s the case, check DA and SPAM Score to weed out low-quality backlinks.

Although revamping your entire backlink profile can be a daunting task, don’t turn a blind eye. Keep building new backlinks until you soothe the effects of the rankings drop. The faster you do it, the better.

4. Audit Your Content

Content has a major influence on the SERPs of any website.

First, it feeds to search engines with data about your site. The better search spiders can understand how your site fits in with a specific niche, the higher rankings they will render.

Second, content is what brings visitors to your site. They need information and the higher quality this information is, the better engagement metrics your site will end up with. CTR, bounce rate, and average time on page metrics can impact rankings.

Useful and valuable content has the ability to build backlinks from trustworthy resources. Dozens of trustworthy backlinks lead to higher search results, always.

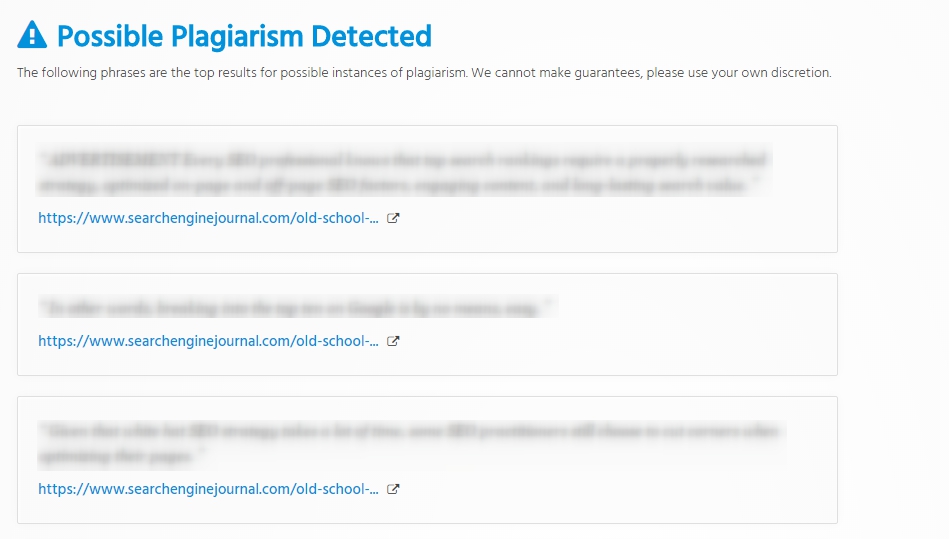

My point is that content is pretty important, and you have to analyze it to figure out if it might have somehow triggered a ranking drop. Specifically, check to make sure your content is unique.

To ensure uniqueness, simply run your site through a plagiarism scanner such as Viper, Quetext, or Plagiarisma. You can also use a built-in plagiarism checker at the Small SEO Tools website.

The example below displays results for one of my articles at SEJ. These URLs all point to the originally posted article so I know everything is fine.

However, if you find your content on third-party sites, act immediately. Here is what you can do:

- Reach out to the site owner and ask them to delete the content that is used without your permission.

- Contact a hosting provider to report plagiarized content and request that they remove it.

If this doesn’t help, you can choose a legal route (i.e. sue the site owner) or simply update the content on your site. Reporting the violation to Google is also an option. Just use the Webspam report form.

Dealing with plagiarized content is fairly simple. Neither site owners nor hosting providers want you to take legal action against them.

And yet, sometimes you will have to give in and just update the content on your own website. This could be the case if you target third-world markets where reaching out to the authorities is a futile task.

5. Analyze Your Site Structure & Usability

Nobody likes clumsy websites — neither users nor search crawlers.

Out-of-style visuals elements, mile-long conversion forms, confusing CTAs, links that lead to nowhere, and pages that take forever to load — all of these are problems that users come across every single day, and they hate it.

These elements all lead to poor engagement and lower rankings.

As an SEO professional, you should work with designers, developers, and usability experts to make sure every change and fix in a site’s design is justified from an SEO perspective.

Your top-priority task is to ensure a site is redesigned in such a way that both users and search crawlers can navigate it easily.

Remember: Website structure impacts your SEO.

A site’s structure isn’t your only concern, though.

Implementing any change on a website (even if it is just content) without an SEO process behind it is a big no-no. This is because SEO is responsible for:

- Ensuring every page can be crawled and indexed.

- Maintaining proper interlinking of inner pages (e.g. anchor text, page depth, link paths).

- Keeping high-quality backlinks pointing to necessary pages.

- Dealing with duplicate pages and redirects.

- Optimizing URL structure and length.

- Making content SEO-friendly.

Your site’s structure and usability are tricky. They can trigger a rankings drop at any time, but you won’t be able to identify the cause. Thus, if anything goes wrong, contact your designers and UX pros immediately to analyze what happened with the affected pages. For instance, someone from your team might have:

- Changed a page’s URL.

- Added an intrusive pop-up that triggered a high bounce rate.

- Removed targeted keywords from content and tags.

- Unintentionally merged several pages.

- Placed content that has not been optimized.

- Tweaked a page’s design, resulting in code errors.

- Messed up the internal linking structure and backlinks.

- Deleted a crucial piece of content.

Keep your finger on the pulse of everything that happens or is due to happen on your site. Designers and usability professionals may not know much about search engine optimization, so you should coordinate with them to avoid SEO-related mistakes.

6. Review Your Site Code

Your developers could also be responsible for poor search rankings. Mistakes can happen, such as:

A site is hidden from indexation in robots.txt

Every site that is updated on a regular basis should have a dev version. Basically, this is a copy of the site that is used to implement and test new features before moving them to the live website.

A dev site is closed from indexation in .htaccess and robots.txt files to prevent crawling and indexing of duplicate pages.

Mistakes sometimes occur when developers move new functionality to the site’s main version, forgetting to provide access in robots.txt.

A specific page or even an entire section of the website can remain hidden from search bots, eventually leading to a rankings drop.

To prevent a mistake like that, pay attention to what your developers do. Check that .htaccess and robots.txt files are set up correctly after your developers implement new features on the site.

A site’s pages are tagged with “noindex, nofollow”

The same scenario is often triggered by “noindex, nofollow” meta tags.

Developers noindex, nofollow a specific page when releasing new functionality onto the site and then forget to index, follow it. Search bots’ access to your page gets restricted and eventually, your site drops significantly in search results.

The solution is fairly simple.

Check to ensure that your developers haven’t accidentally made any SEO-specific mistakes in your website’s code after every update or fix.

Make it a rule that your developers should notify you to look through the updated pages every time a change is made.

301 redirects are placed incorrectly

Google and other search engines should be instructed to crawl and index a site with www and without www as one, using 301 redirect command. If you are into technical SEO, you can try to do it on your own, but I strongly advise that you ask a certified developer for help.

The only problem is, sometimes, even developers place 301 redirects incorrectly. As a result, you can end up with duplicate pages, which are immediately downgraded by search spiders.

Ensure that your developers are properly instructed on how to place 301 redirects. One single error can ruin it all for your site, so be careful.

7. Conduct a Competitor Analysis

Meanwhile, you and your team might not be to blame for a rankings drop.

Sometimes, your competitors do such a good job with their site, content, UX, and SEO that your website immediately drops in search results because of their fierce competition.

When your competitors grow stronger, conducting a competitive SEO analysis is the next logical step.

Here is what you should do:

- Use SimilarWeb to see where your traffic and engagement stand.

- Analyze backlinks (new and lost) and content with Ahrefs.

- Check your social media stats in BuzzSumo.

Bonus tip! To avoid unpleasant surprises, I recommend monitoring competitor sites on a regular basis. You can do it manually or use Versionista, which unfortunately is not free but is an efficient instrument that compares site differences.

Discover and analyze top-performing traffic sources. Most likely, a competitor managed to get a high-quality link from a trustworthy resource or one of their posts became a success on social networks and it now drives droves of new visitors.

Figure out if your competition has updated their pages (e.g., design, usability, content structure, crawlability).

- Has content become longer or better optimized?

- Are there any changes in their internal link structure?

- What about their engagement metrics?

Now that you have located and analyzed what pages work for your competitors, emulate their success.

Be careful, though.

If a change has paid off for your competitor, the same tactic might not guarantee instant success for you.

Magic tricks are always kept hidden; but if you and your team work hard enough, you will be able to figure them out and eventually best your competition.

Conclusion

A drop in rankings is a challenge to every SEO professional. However, there are sure-fire methods to pinpoint and eradicate the issues that tanked your site’s performance in the search results.

Analyze your SEO campaign step by step, and I guarantee that you will locate the problem and put yourself back on the path to success.

Image Credits

Featured Image: garagestock/DepositPhotos

Screenshots by Sergey Grybniak. Taken March 2017.

![[SEO, PPC & Attribution] Unlocking The Power Of Offline Marketing In A Digital World](https://www.searchenginejournal.com/wp-content/uploads/2025/03/sidebar1x-534.png)