Google is rolling out what it says is the biggest step forward for search in the past 5 years, and one of the biggest steps forward in the history of Search altogether.

Google is using a new technology it introduced last year, called BERT, to understand search queries.

BERT stands for bidirectional encoder representations from transformers. Transformers refer to models that process words in relation to all other words in a sentence.

That means BERT models can interpret the appropriate meaning of a word by looking at the words that come before and after. This will lead to a better understanding of queries, compared to processing words one-by-one in order.

What Does This Mean for SEOs and Site Owners?

Google utilizing BERT models to understand queries will affect both search rankings and featured snippets. However, BERT will not be used for 100% of searches.

For now, BERT will be used on 1 in 10 searches in the US in English. Google says BERT is so complex that it pushes the limits of Google’s hardware, which is probably why it’s only being used on a limited amount of searches.

Google search users in the US should start to use more useful information in search results:

“Particularly for longer, more conversational queries, or searches where prepositions like “for” and “to” matter a lot to the meaning, Search will be able to understand the context of the words in your query. You can search in a way that feels natural for you.”

For featured snippets, Google is using a BERT model to improve results in all of the two dozen countries where featured snippets are available.

Google says BERT went through rigorous testing to ensure that the changes are actually more helpful for searchers. You can see some before and after examples in the next section.

Examples of BERT in Action

In testing, Google found that BERT helped its algorithms better grasp the nuances of queries and understand connections between words that it previously couldn’t.

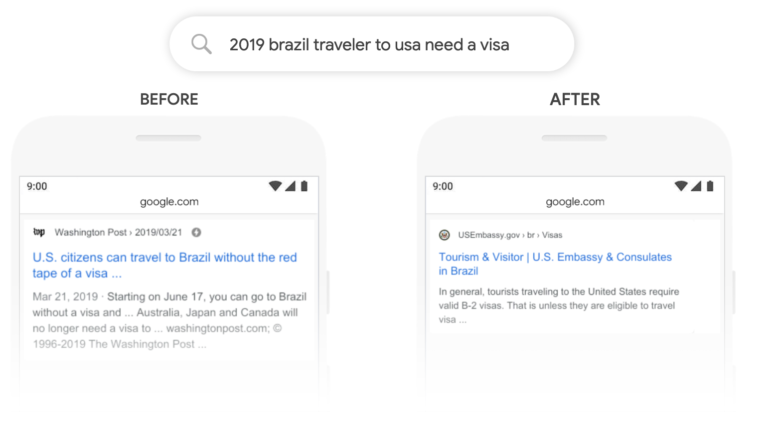

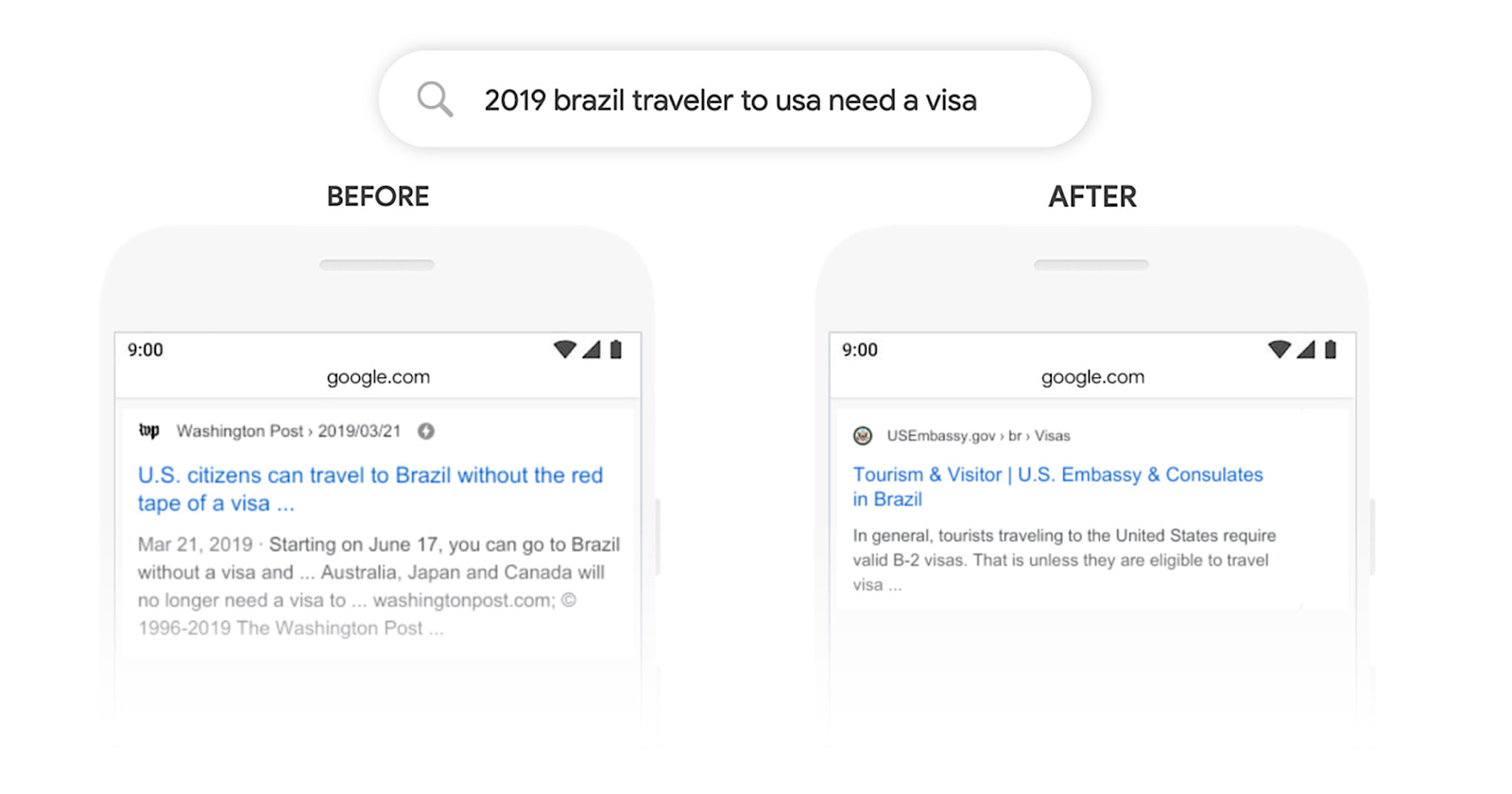

Here’s a search for “2019 brazil traveler to usa need a visa”. You can see how BERT helped Google understand that the query is about a Brazilian traveling to USA, not the other way around.

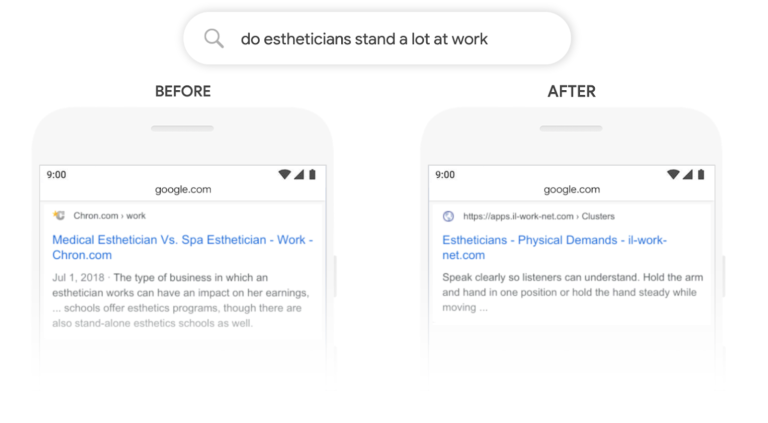

Here’s another example using the query “do estheticians stand a lot at work”. Previously, Google would interpret the words “stand” and “stand-alone” as meaning the same thing, which lead to irrelevant search results.

Using BERT, Google can better interpret how the word “stand” is being used and understand that the query is related to the physical demands of being an esthetician.

Here are some more before/after examples of queries with and without BERT.

Here are some more before/after examples of queries with and without BERT.

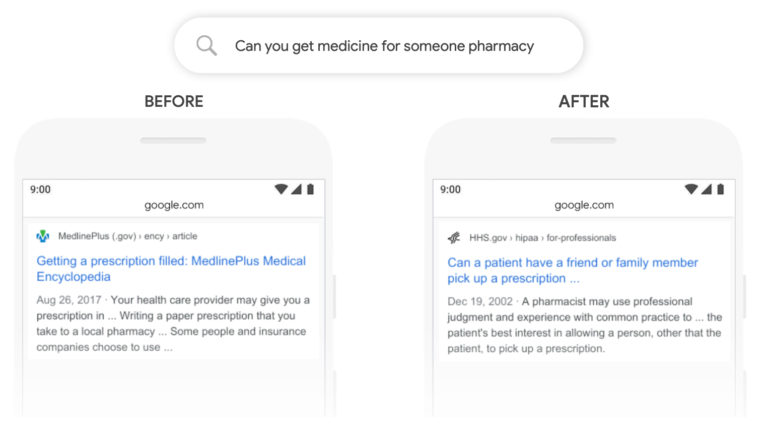

“Can you get medicine for someone pharmacy”: With the BERT model, we can better understand that “for someone” is an important part of this query, whereas previously we missed the meaning, showing general results about filling prescriptions.

“Can you get medicine for someone pharmacy”: With the BERT model, we can better understand that “for someone” is an important part of this query, whereas previously we missed the meaning, showing general results about filling prescriptions.

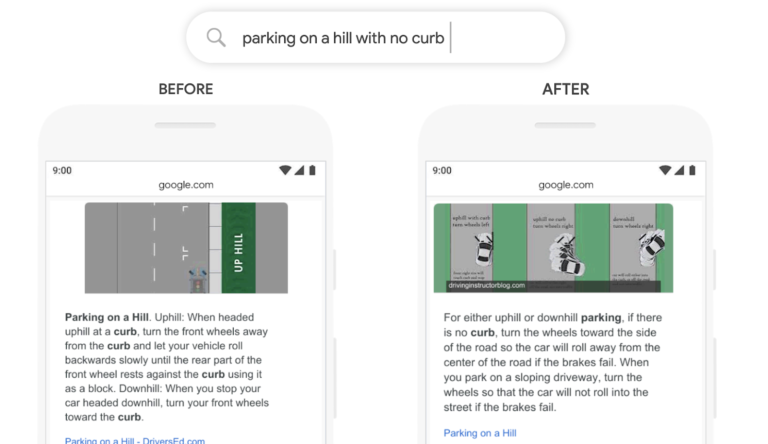

“Parking on a hill with no curb”: In the past, a query like this would confuse our systems–we placed too much importance on the word “curb” and ignored the word “no”, not understanding how critical that word was to appropriately responding to this query. So we’d return results for parking on a hill with a curb!

“Parking on a hill with no curb”: In the past, a query like this would confuse our systems–we placed too much importance on the word “curb” and ignored the word “no”, not understanding how critical that word was to appropriately responding to this query. So we’d return results for parking on a hill with a curb!

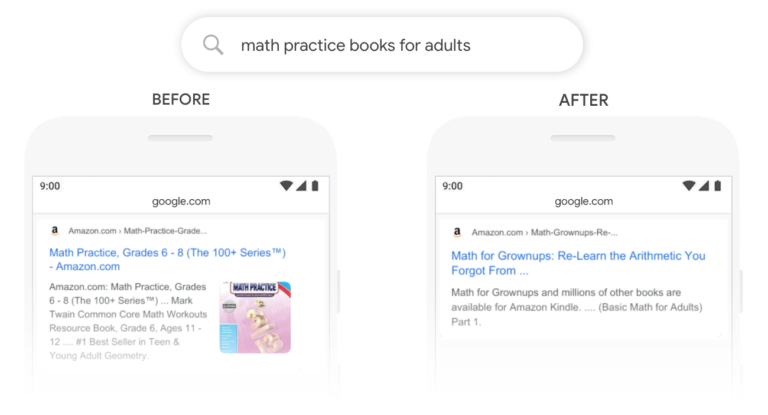

“math practice books for adults”: While the previous results page included a book in the “Young Adult” category, BERT can better understand that “adult” is being matched out of context, and pick out a more helpful result.

“math practice books for adults”: While the previous results page included a book in the “Young Adult” category, BERT can better understand that “adult” is being matched out of context, and pick out a more helpful result.One thing to note about these examples is they were from Google’s evaluations, and might not 100% mirror what is displayed live in search results.

A Google spokesperson tells me the examples are simply meant to illustrate the types of language understanding challenges that BERT helps with, but there are of course many other queries where BERT will have an impact.

Looking Ahead

With this change Google aims to improve the understanding of queries, deliver more relevant results, and get searchers used to entering queries in a more natural way.

Google did not say to what extent this change will affect search rankings. Given that BERT is only being used on 10% of English queries in the US, the impact should be minimal compared to a full-scale algorithm update.

Understanding language is an ongoing challenge, and Google admits that, even with BERT, it may not get everything right. Though the company is committed to getting better at interpreting the meaning of queries.

![[SEO, PPC & Attribution] Unlocking The Power Of Offline Marketing In A Digital World](https://www.searchenginejournal.com/wp-content/uploads/2025/03/sidebar1x-534.png)