Today at Google I/O, the company announced features in Google Search that utilize Google Lens and augmented reality (AR).

The new features use a combination of the camera, computer vision, and AR to overlay information and content onto one’s physical surroundings.

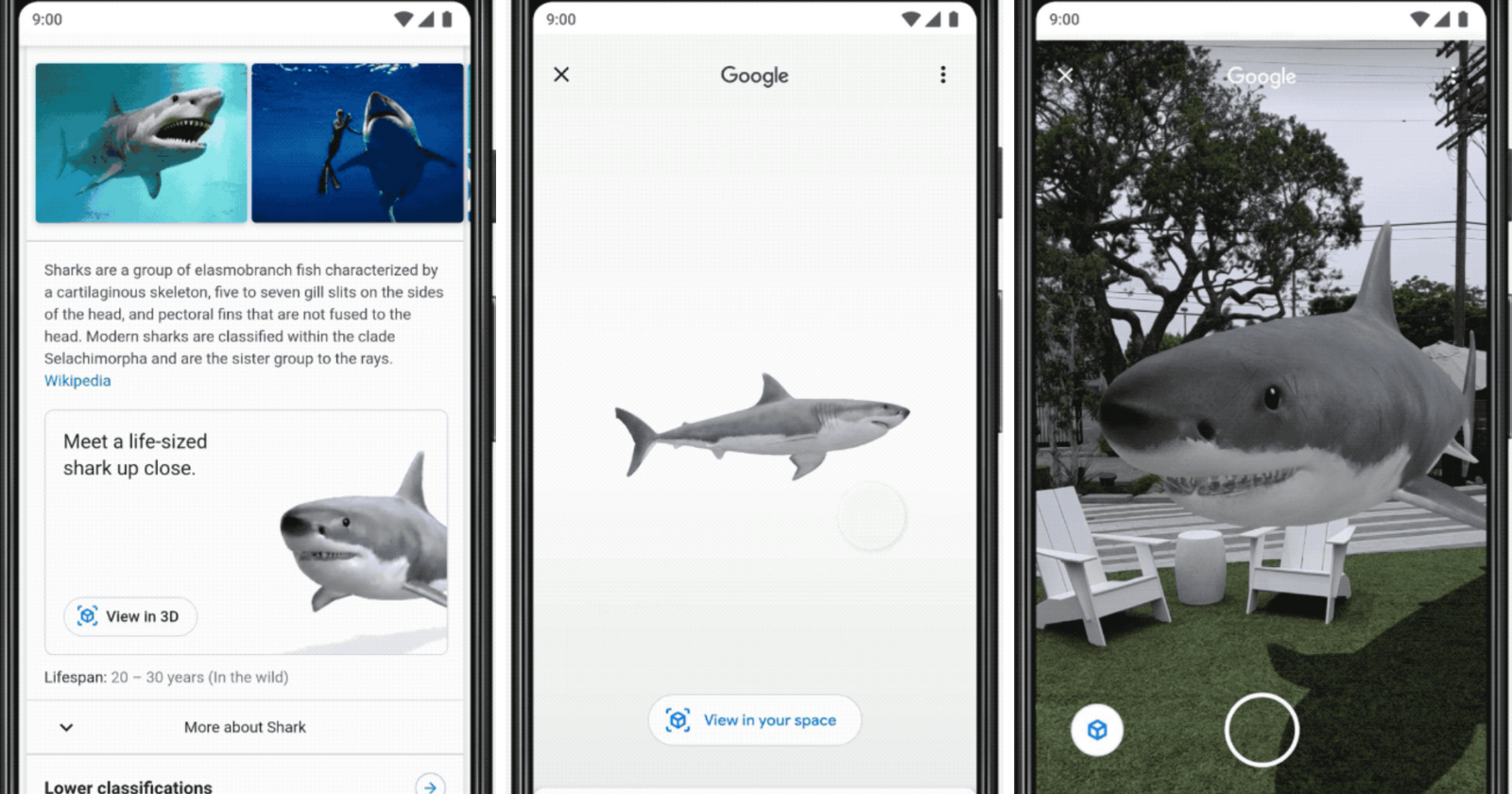

Later this month, AR features will be rolling out in search results allowing users to view and interact with 3D objects.

Using a smartphone, people can search for a 3D model and place it in their physical space with their phone’s viewfinder.

Here’s an example showing how you can search for an animal and get an option to view it in 3D and AR:

There are also practical uses for this technology. For example, the retailer Wayfair uses AR in its app to let shoppers see how furniture would look in their home.

In fact, Wayfair is one of several partners working directly with Google to have its 3D models surfaced in search results.

Other partners include:

- NASA

- New Balance

- Samsung

- Target

- Visible Body

- Volvo

- and more

New Google Lens Features

Google Lens, accessible from the search bar in Google’s mobile app, is getting an upgrade to provide more visual answers to visual questions.

Here are a few of the updates to Google Lens that are on the way:

- Restaurant menu search: Point Google Lens at a menu and get information about dishes, including reviews and photos.

- Automatic translations: Point Google Lens at text and translate it into over 100 languages.

- Text-to-speech: Point Google Lens at text and it can be read out loud to you.

Google notes that the text-to-speech feature will first be released on the scaled down Google Go app before being brought to the main app.