Can images become more semantic?

Perhaps the selection of images to show in a set of image search results can be, as identified by the image search labels that are associated with them.

In these days of Knowledge Graphs and entities, we are seeing Google search moving toward using information about entities to power search, and this is true with image search as well as text.

We can read about this in blog posts from Google, as well as patents from the search giant. One of those patents was updated recently, and it is worth looking at what has changed in the patent.

I found myself wondering how much meaning might we possibly get from Google’s association of entities with images.

As I wrote this post and did some image searches, I found a lot of meaning, and a lot of history revealed in labels chosen for image searches.

I think the changes to the patent are understandable from considering this intent, too. I’ve included some label examples of what I mean in this post.

In June 12, 2013, Chuck Rosenberg wrote a post on the Google Blog, Improving Photo Search: A Step Across the Semantic Gap.

In the post, he was identified as being part of the Image Search Team. He also probably was selected to write that post because his name is on an updated continuation patent from Google that covers some similar ground.

It is rare that we have a paper or a blog post to consider and compare to a patent that has been filed, so you may want to stop and read that Google Blog Post and then return to this post.

I like that they talk in the blog post about using Freebase type Machine IDs for entities that appear in images for image search. The post tells us:

As in ImageNet, the classes were not text strings, but are entities, in our case we use Freebase entities which form the basis of the Knowledge Graph used in Google search. An entity is a way to uniquely identify something in a language-independent way. In English when we encounter the word “jaguar”, it is hard to determine if it represents the animal or the car manufacturer. Entities assign a unique ID to each, removing that ambiguity, in this case “/m/0449p” for the former and “/m/012×34” for the latter. In order to train better classifiers we used more training images per class than ImageNet, 5000 versus 1000. Since we wanted to provide only high precision labels, we also refined the classes from our initial set of 2000 to the most precise 1100 classes for our launch.

The new version of the patent is:

System and method for associating images with semantic entities

Inventors: Maks Ovsjanikov, Yuan Li, Hartwig Adam and Charles Joseph Rosenberg;

Assignee: Google LLC

US Patent: 10,268,703

Granted: April 23, 2019

Filed: December 8, 2016

Abstract

A system and computer-implemented method for associating images with semantic entities and providing search results using the semantic entities. An image database contains one or more source images associated with one or more images labels. A computer may generate one or more documents containing the labels associated with each image. Analysis may be performed on the one or more documents to associate the source images with semantic entities. The semantic entities may be used to provide search results. In response to receiving a target image as a search query, the target image may be compared with the source images to identify similar images. The semantic entities associated with the similar images may be used to determine a semantic entity for the target image. The semantic entity for the target image may be used to provide search results in response to the search initiated by the target image.

The earlier version of this patent, which this one is identified as a continuation of was filed on January 16, 2013, and is also titled, System and method for associating images with semantic entities.

Comparing the Claims

On continuation patents, usually the body of the patent, and the title remain the same, but the claims are different from one to the other.

It’s usually worth comparing the two to see what has changed. The claims in a patent are what a patent examiner from the USPTO looks at when deciding whether to grant a patent.

The original first claim from the version of the patent filed in 2013 tells us:

1. A computer-implemented method comprising: receiving, using one or more computing devices, an input image as a search query; determining, using the one or more computing devices, reference images that are identified as matching the input image, each image of the reference images being associated with at least one entity including text information related to that image; selecting, using the one or more computing devices, from among multiple entities that are collectively associated with the reference images, one or more particular entities to associate with the input image; identifying, using the one or more computing devices, a particular entity from amongst the one or more particular entities based on a number of the reference images that are associated with one or more entities that include the text information of the identified particular entity, wherein the text information of the identified particular entity is configured to disambiguate from other different entities that include common text information; and storing, using the one or more computing devices, data that associates the input image with the identified particular entity.

The first claim from the newer version of the patent is a little different:

1. A method for associating source images with semantic entities and providing search results, the method comprising: associating, by one or more computing devices having one or more processors, labels to a plurality of source images based on a frequency with which a source image of the plurality of source images appears in search results for a text string, the one or more labels corresponding to the text string; identifying, by one or more computing devices, additional labels for a particular source image of the plurality of source images based on a comparison between features of the particular source image and other source images of the plurality of source images; associating, by the one or more computing devices, the additional labels with the particular source image; generating, by the one or more computing devices, a document representing the particular source image using the particular source image and any labels associated with the particular semantic entity; and analyzing, by the one or more computing devices, the document to identify one or more semantic entities for each of the particular source images, wherein each semantic entity defines a concept with a particular ontology.

I’m not going to break down the patents themselves, especially since the blog post does such a good job of capturing the ideas behind the semantic associations taking place in image search.

I think though that it is interesting seeing the changes from the earlier version of the patent to the version that was just granted.

We see some new approaches and ideas appearing in the updated version of the first claim.

- Labels for images may be associated with text strings that those appear in search results from.

- Each Semantic entity defines a concept with a particular ontology.

When I do an image search in Google for George Washington, and the top of the search results is a carousel of topics related to images from that search that look like they could be described as concepts with a particular ontology.

Image Search Labels

By organizing images by the entities that they may identify, and then providing labels that identify concepts from a particular ontology, we see images being organized semantically.

Some of the image search labels that are associated with a search for George Washington include:

- painting

- president

- Portrait

- mount vernon

- quotes

- Gilbert Stewart

- Hamilton

- American Revolution

- farewell address

- battle

- Alexander Hamilton

- family

- Cartoon

- Abraham Lincoln

- venn diagram

- Clip art

- President’s day

- heaven

- Silhouette

- coloring

- apotheosis

- animated

- Thomas Jefferson

These cover a mix of types of images, events from George Washington’s life, places associated with him, people whom he is shown in images with.

It’s possible that these labels may be associated in text strings that produce search results for George Washington.

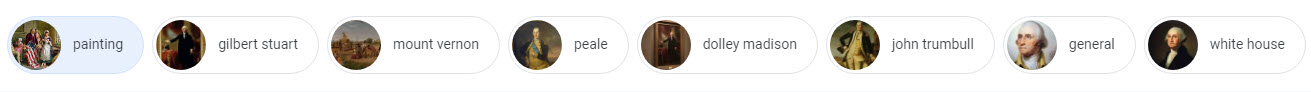

If you perform an image search for Donald Trump, you see very different labels then the ones for George Washington, including one for Twitter.

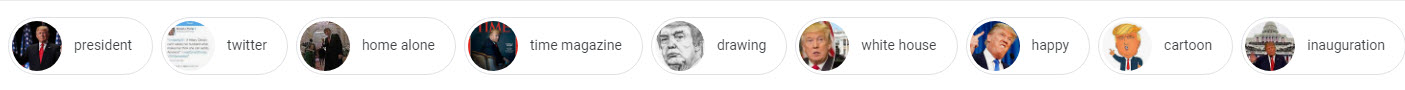

Same on a Search for John F. Kennedy.

It’s actually somewhat of a history lesson looking up different Presidents, and seeing the Labels that have been selected for each, which fulfills the idea of “associating images with semantic entities” to me.

Image Credits

All screenshots taken by author, April 2019