Every webmaster’s and SEO professional’s nightmare is seeing a sudden plummet of those hard-earned Google rankings. The chaos that ensues in trying to pinpoint just what happened is never a welcomed experience.

The good news is that Google has been as transparent as ever in recent months. It offers new information, tools and methods to help webmasters beg for mercy, such as Webmaster Tools alerts, re-inclusion requests and the Link Disavow tool.

Begging for mercy isn’t always the best course of action, however, depending on the circumstances of the issues surrounding the drop in ranking. It should be a last resort once you have identified the reason for the issues with a great deal of certainty, done everything possible to resolve the issues and still don’t see a positive result. In order to take the right course of action after a Google manual review, the first step is to know if a manual review actually took place.

Google Transparency

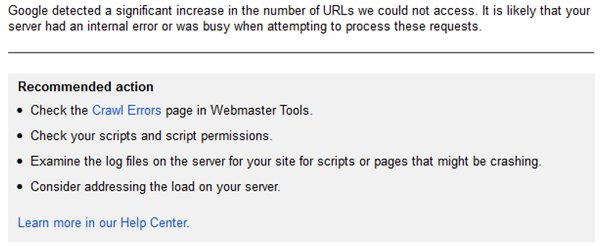

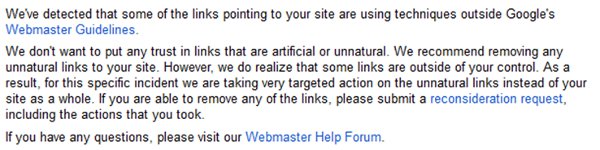

Within Google Webmaster Tools, Google has been providing alerts when issues that might impact site performance are detected. Anything from server errors to important pages being blocked in a robots.txt file to noticeable traffic drops to the dreaded detection of unnatural inbound links are reported to those with owner-level access of the Webmaster Tools account. This provides webmasters insight into whether a manual review actually occurred.

To put the level of transparency Google began to project in 2012 into perspective, the number of messages sent through Webmaster Tools in January and February alone surpassed all messages from 2011.

Algorithm Update vs. Manual Review

When a website experiences a sudden drop in rankings, the first reaction of those responsible for the site is often to try to make an immediate case to Google. SEO pros assume that a manual review took place, submit a re-inclusion request to Google, tell them how great their site is and expect Google to agree.

This is a dangerous practice. In many cases, the webmaster fails to understand that a routine algorithm update caused the blip in his rankings, which a re-inclusion request will not remedy. In doing so, he unintentionally invites Google to scrutinize the website and inbound-link profile. This gives Google an opening to find something more damaging on your site than the initial bot.

When experiencing a drop in search rankings, it’s necessary to research and understand if there were any impactful changes in Google’s algorithm and map the timeline of the ranking drop to the recent updates. Jumping ahead to a re-inclusion request can lead to additional penalties, de-indexing, etc. In the worst-case scenarios, it can lead to an actual manual review of your site.

The correct plan of action is to understand how the site violates any recent algorithm updates and take the necessary steps to comply.

There is no benefit to submitting a re-inclusion request if your website wasn’t manually reviewed by Google. If a request is submitted, Google responds with a Webmaster Tools notification stating, “There’s no need to file a reconsideration request for your site, because any ranking issues you may be experiencing are not related to a manual action taken by the webspam team”.

Submitting a Re-Inclusion Request

Before submitting a re-inclusion request, it must be established that a manual review occurred and that everything possible has been done to clean up the website.

The easiest, and most certain, way to know if your site has been manually reviewed is to receive a Google Webmaster Tools alert. The best case of a bad scenario is that Google will inform you why your website is no longer ranking. If that doesn’t happen, deeper digging is necessary.

Below is an example of the type of communications you should expect to receive from Google when submitting a re-inclusion request.

Perhaps, for example, there have been no known relevant algorithm updates but the site was engaged in unnatural linking practices. Because the most common reason for a manual review is the detection of unnatural links, the link profile of the website must be analyzed to identify potential spam links or trends that would appear unnatural.

Once links that violate Google’s guidelines are identified, all attempts must be made to remove any and all questionable links. This includes reaching out to the webmaster(s) hosting the links and requesting removal. All attempts to remove links should be documented to include in the re-inclusion request.

Google further assisted webmasters by releasing the Link Disavow tool in October. This handy tool can be found in Webmaster Tools when logged in. This is a way to tell Google about any low-quality links you have attempted to have removed to no avail. It does not guarantee that Google will turn the other cheek on those links. Instead, Google’s Link Disavow tool should be used as a means of informing Google when an attempt has been made.

The Link Disavow tool should be used with caution. Webmasters need a high level of certainty that the links are harming the site and all attempts have been made to remove the links.

Only after all site issues in non-compliance with Google’s quality guidelines have been identified and cleaned up should a re-consideration request be filed. This can be done by logging into Google Webmaster Tools and accessing this page: http://bit.ly/TMGDoX. Be sure to detail all attempts made to clear up any issues within the request. Once submitted, it can take weeks or months before any improvements in rank or correspondences from Google occur.

In the meantime, the best thing to do is continue creating quality content for the site and staying in line with Google’s quality guidelines.