This is a sponsored post written by Botify. The opinions expressed in this article are the sponsor’s own.

One of the greatest challenges of SEO is to make sure search engines have visibility over your newest content. Otherwise, they may only display older content in search result pages.

You need to be aware that search engines probably don’t explore your entire website, because of crawl budget limitations.

Also, if your website publishes new content regularly (like e-commerce marketplaces, news publishing websites, classifieds websites, forums) your most important content may not be discovered fast enough, not in full – or neither.

Here are some important questions you should ask yourself to make sure you are not missing key traffic opportunities on your new pages:

How Much of Your New Content Does Google Explore?

To get an answer, we need to do two things:

- Get the inventory of new pages, with a crawler set to explore the website periodically (weekly or daily for instance) to track changes,

- Find out which of these new pages are explored by search engines. Web server log files are the only source for this information: they record every single hit on the website, wherever it comes from – a user or a search engine robot.

A report that combines both aspects sheds light on Google’s behavior on your new pages.

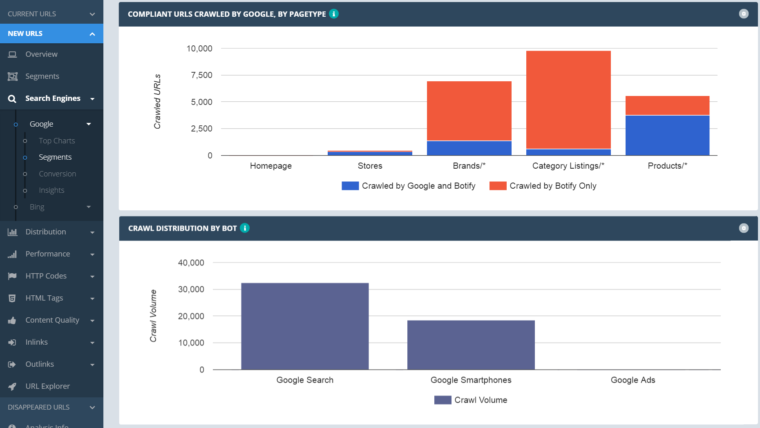

Let’s take a look at the example of an e-commerce website.

The first chart details the website as discovered by the website crawler, showing only new pages (those not found in the previous report, one week earlier). Only those in blue were explored by Google. The search engine’s behavior will vary depending on the type of page (product lists, product pages, etc.), so each type of page is shown in a separate bar.

Does your website aim to generate significant traffic from mobile devices? Then, you may also be interested in finding out which bot explored your new pages (Google’s generic bot used for desktop search traffic or its mobile bot), as shown in the bottom chart.

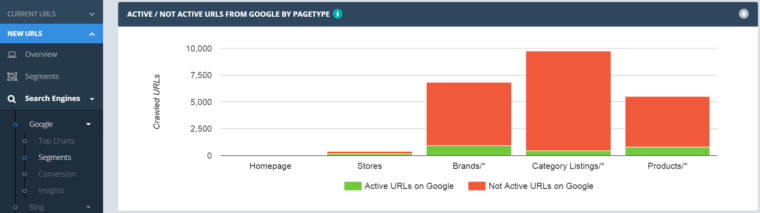

Do New Pages Start Generating Traffic Right Away?

Among new pages explored by Google, some start generating traffic while others don’t. Which are bringing in traffic? Those that do – a subset of pages crawled by Google – are called active pages in the chart below (in green).

If the website crawler has visibility over the date the page was added to the website, for instance by extracting the publication date from article pages on a publishing website, then you can get even more insight by looking at the delay between each step in the organic traffic conversion funnel: publication date vs. date of first crawl by Google vs. date of first visit generated from Google results.

Does Your Website Structure Promote Your New Pages the Way They Deserve?

Some of your new pages weren’t even crawled by Google. Why is that?

Their depth in the website structure (how many clicks are necessary to reach them from the home page), and the number of internal links they receive influences search engine crawl heavily.

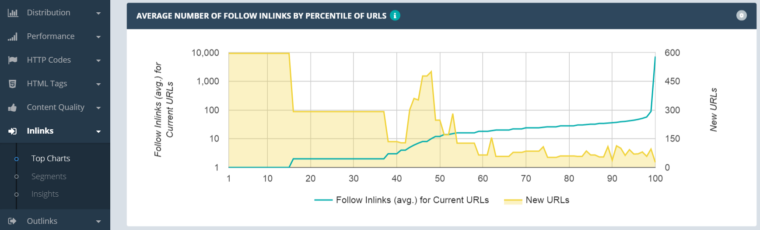

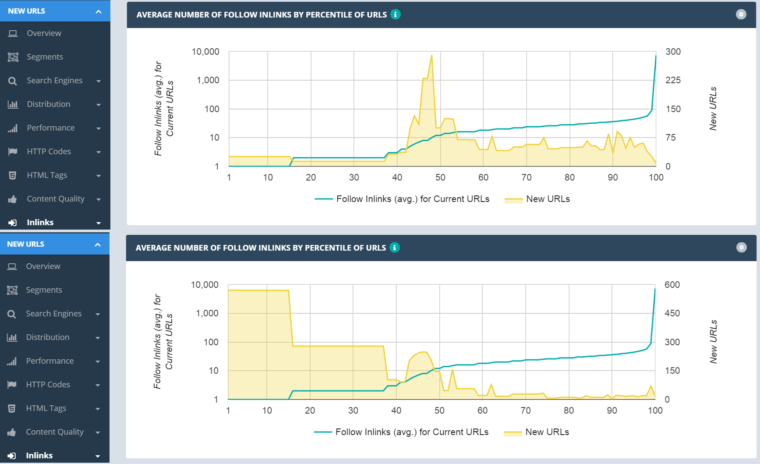

Here is, for our previous example, the distribution of incoming links to new pages. The blue line indicates the number of links by percentile for the whole website (pages in the top percentile receive on average close to 10,000 links each, while those in the lower percentiles receive a single link on average). The distribution of new pages in the different percentiles is shown in yellow.

If we look only at pages that were crawled by Google (top chart), and only at pages that were not crawled (bottom chart), the impact of internal linking is pretty obvious: most pages that receive a significant number of links are crawled, most of those with few incoming links are ignored.

What About Content?

Internal linking is about page accessibility to crawlers. Another potential reason for pages not being crawled may be poor content quality. If a certain type of page tends to have thin content or little unique content – which Google will know, based on pages of the same type crawled previously – it does not provide an incentive to try to crawl more of these pages at every opportunity.

Poor content quality may also be one of the reasons why some new pages are crawled by Google, but don’t generate traffic: they are not good enough to rank.

Botify provides full visibility on your new pages, as well as the rest of your website. It is the only SEO tool in the industry that combines a website crawler and web server log analysis along with segmented views of your website and tons of other key metrics, offering unparalleled insights into SEO priorities. With Botify, you get everything you need to make the right decisions and get results.

Image Credits

Featured Image: Image by Botify. Used with permission.

In-post Photos: All images by Botify. Used with permission.