Google Search Console’s new crawl stats report is thoroughly explained by Search Advocate Daniel Waisberg in a new training video.

The crawl stats report in Search Console received a major update a few months ago. If you haven’t had a chance to look at the new report, now is a good time to get familiar with all the insights that have been added.

Google’s new video breaks down every section of the crawl stats report and explains the data can be used to determine how well Googlebot is able to crawl a particular site.

When Googlebot is able to efficiently crawl a site it helps get new content indexed in search results quickly, and helps Google discover changes made to existing content.

Here’s a recap of the video starting with the absolute basics: what is crawling?

What is Web Crawling?

The crawling process begins with a list of URLs from previous crawls and sitemaps provided by site owners.

Google uses web crawlers to visit URLs, read the information in them, and follow links on those pages.

The crawlers will revisit pages already in the list to check if they have changed, and also crawl new pages it discovered.

During this process the crawlers have to make important decisions, such as prioritizing when and what to crawl, while making sure the website can handle the server requests made by Google.

Successfully crawled pages are processed and passed to Google indexing to prepare the content for serving in Google Search results.

Google wants to make sure it doesn’t overload your servers, so the frequency of crawls is dependent on three things:

- Crawl rate: Maximum number of concurrent connections a crawler may use to crawl a site

- Crawl demand: How much the content is desired by Google.

- Crawl budget: Number of URLs Google can and wants to crawl.

What is the Search Console Crawl Stats Report?

The crawl stats report in Search Console is used to help understand and optimize Googlebot crawling. It provides statistics about Google’s crawling behavior, such as how often it crawls a site and what the responses were.

Wasiberg says the report is relevant if working with a large website, but not so much a concern if you have a site with fewer than 1,000 pages.

Here are some questions you can answer with the data provided in the crawl stats report:

- What is your site’s general availability?

- What is the average page response for a crawl request?

- How many requests were made by Google to your site in the last 90 days?

How to Access the Crawl Stats Report

Site owners can find the crawl stats report by logging into Search Console and going to the ‘settings’ page. There you will see the crawl stats report.

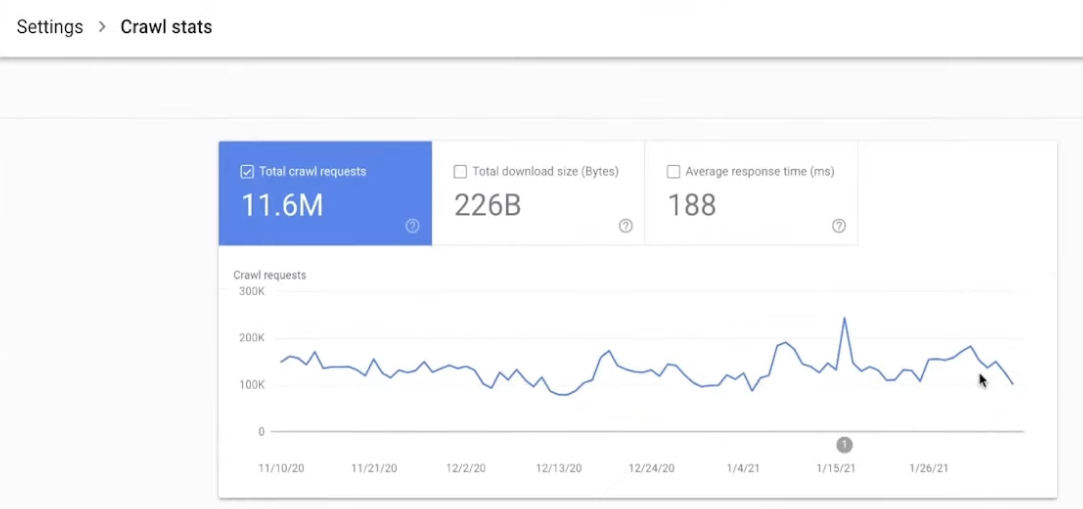

Upon opening the report you’ll see a summary page which includes a crawling trends chart, host status details, and a crawl request breakdown.

Crawling Trends Chart

The crawling trends chart reports on these three metrics:

- Total crawl requests for URLs on your site (whether successful or not).

- Total download size from your site during crawling.

- Average page response time for a crawl request to retrieve the page content.

When analyzing this data look for major spikes, drops, and trends over time.

For example, if you see a significant drop in total crawl requests, Google suggests making sure no one added a new robots.txt to your site.

Or you may discover your site is responding slowly to Googlebot. That might be a sign your server cannot handle all the requests.

Watch out for a consistent increase in average response time. Google says it might not affect crawl rate immediately, but it’s a good indicator that your servers might not be handling all the load.

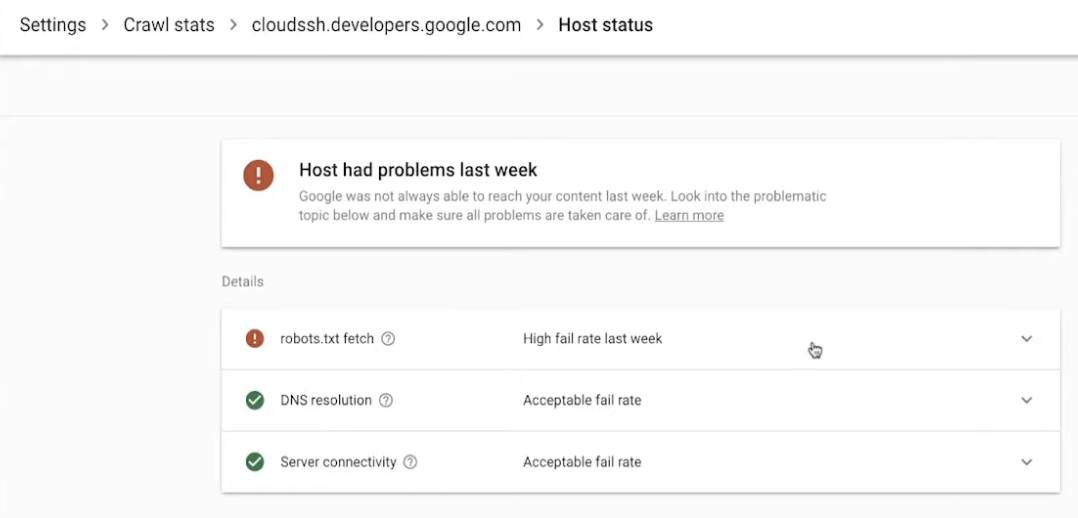

Host Status Details

Host status data lets you check a site’s general availability in the last 90 days. Errors in this section mean Google cannot crawl your site for a technical reason.

When you click to get host status details you will find three categories:

- Robots.txt fetch: The failure rate when crawling your robots.txt file.

- DNS resolution: Shows when the DNS server didn’t recognize your host name or didn’t respond during crawling.

- Server connectivity: Shows when your server was unresponsive or did not provide the full response for your URL during a crawl.

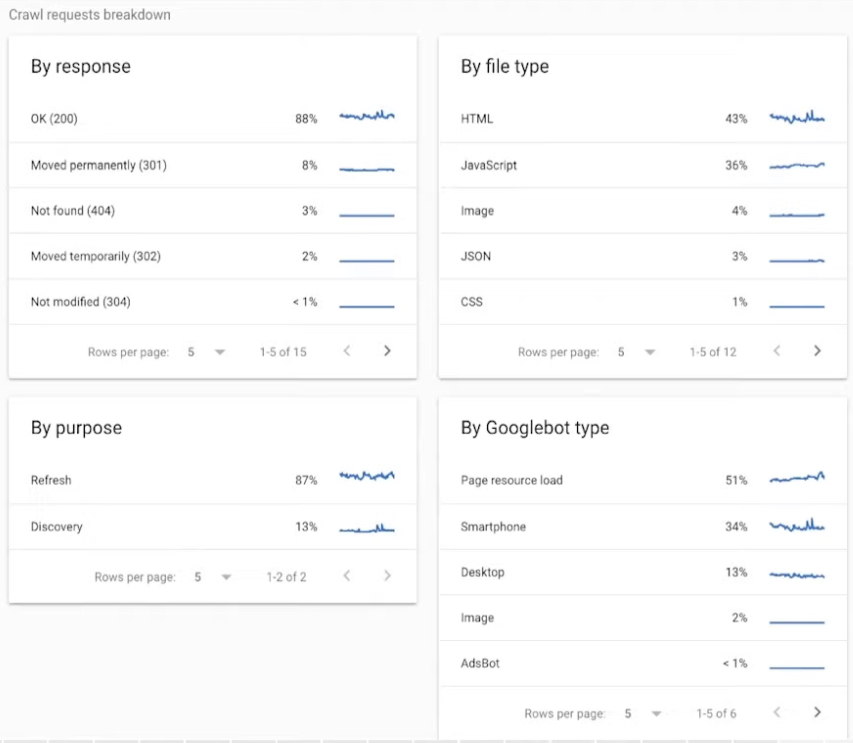

Crawl Request Cards

Crawl request cards show several breakdowns to help you understand what Google crawlers found in your website.

There are four available breakdowns:

- Crawl response: Responses Google received when crawling your site.

- Crawl file type: Shows file types returned by the request.

- Crawl purpose: Shows the reason for crawling your site.

- Googlebot type: Shows the user agent used by Google to make the crawl request.

In Summary

Those are the basics of using Search Console’s crawl stats report to ensure Googlebot can efficiently crawl your site for search.

Takeaways:

- Use the summary page chart to analyze crawling volume and trends.

- Use the host status details to check your site’s general availability.

- Use the crawl request breakdown to understand what Googlebot is finding when crawling your website.

See the full video below: