You can’t call yourself a technical SEO if you aren’t using the Google Search Console Index Coverage report.

It’s an invaluable tool for understanding:

- Which URLs have been crawled and indexed by Google and which have not.

- And, more importantly, why the search engine has made that choice about a URL.

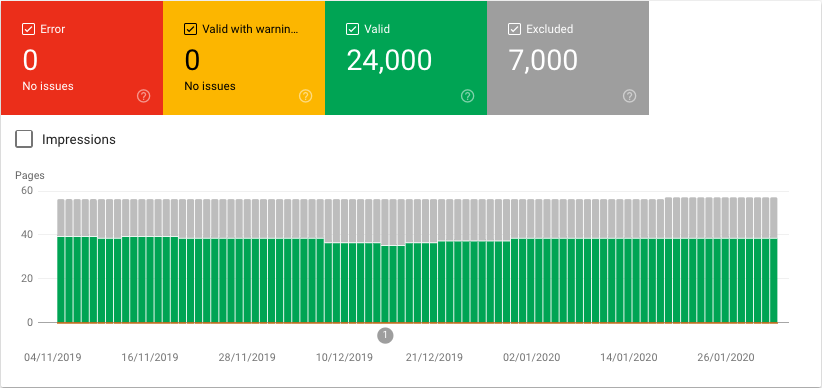

The report seems relatively simple to follow with its traffic light color scheme.

- Red (Error): Stop! The pages are not indexed.

- Yellow (Valid with warnings): If you have time to spare, stop, else, hit the gas and go! The pages may be indexed.

- Green (Valid): All is well. The pages are indexed.

Problem is, there’s a big grey zone (Excluded).

And when you read down into the details, the road rules seem to be written in a foreign language. Googlish.

So today, we will translate the status types in the Index Coverage Report into SEO action items you should take to improve indexing and drive up organic performance.

Here for a specific violation? Feel free to use the quick links:

SEO impacting: Address these offenses as a priority.

- Discovered – currently not indexed

- Crawled – currently not indexed

- Duplicate without user-selected canonical

- Duplicate, submitted URL not selected as canonical

- Duplicate, Google chose different canonical than user

- Submitted URL not found (404)

- Redirect error

- Server error (5xx)

- Crawl Anomaly

- Indexed, though blocked by robots.txt

Further thought required: These may or may not require action, depending on your SEO strategy.

- Indexed, not submitted in sitemap

- Blocked by robots.txt

- Submitted URL blocked by robots.txt

- Submitted URL marked ‘noindex’

- Submitted URL returns unauthorized request (401)

- Submitted URL has crawl issue

- Submitted URL seems to be a Soft 404

- Soft 404

Natural status: No action needed.

- Submitted and indexed

- Alternate page with proper canonical tag

- Excluded by ‘noindex’ tag

- Page with redirect

- Not found (404)

- Blocked by page removal tool

SEO Impacting Issues in the Index Coverage Report

Don’t focus only on fixing only the errors. Often the larger SEO wins are actually buried in the grey zone of excluded.

Below are the Index Coverage report issues that truly matter for SEO, listed in priority order, so you know where to address your attention first.

Discovered – Currently Not Indexed

Cause: The URL is known to Google, often by links or an XML sitemap, and is in the crawl queue, but Googlebot hasn’t got around to crawl it yet. This indicates a crawl budget issue.

How to fix it: If it’s only a handful of pages, trigger a crawl manually by submitting the URLs in Google Search Console.

If there is a significant number, invest time into a long term fix of the website architecture (including URL structure, site taxonomy, and internal linking) to solve the crawl budget issues at their source.

Crawled – Currently Not Indexed

Cause: Googlebot crawled the URL and found the content not worthy to be included in the index. This is most commonly due to quality issues such as thin content, outdated content, doorway pages or user-generated spam. If the content is worthy but not being indexed, you’re likely being tripped up by rendering.

How to fix it: Review the content of the page.

If you understand why Googlebot has deemed the page’s content not valuable enough to index, then ask yourself a second question. Does this page need to exist on my website?

If the answer is no, 301 or 410 the URL. If yes, add a noindex tag until you can solve the content issue. Or if it is a parameter based URL, you can prevent the page from being crawled with best practice parameter handling.

If the content seems to be of acceptable quality, check what renders without JavaScript. Google is capable of indexing JavaScript-generated content, but it is a more complex process than HTML because there are two waves of indexing whenever JavaScript is involved.

The first wave indexes a page based on the initial HTML from the server. This is what you see when you right-click and view page source.

The second indexes based on the DOM, which includes both the HTML and the rendered JavaScript from the client-side. This is what you see when you right-click & inspect.

The challenge is that the second wave of indexing is deferred until Google has the rendering resources available. This means it takes longer to index JavaScript-reliant content than HTML only content. Anywhere from days up to a few weeks from the time it was crawled.

To avoid delays in indexing, use server-side rendering so that all essential content is present in the initial HTML. This should include your hero SEO elements like page titles, headings, canonicals, structured data and of course your main content and links.

Duplicate Without User-Selected Canonical

Cause: The page is considered by Google to be duplicate content, but isn’t marked with a clear canonical. Google has decided this page should not be the canonical and as such has excluded it from the index.

How to fix it: Explicitly mark the correct canonical, using rel=canonical links, for every crawlable URL on your website. You can understand which page Google chose as the canonical by inspecting the URL in Google Search Console.

Duplicate, Submitted URL Not Selected as Canonical

Cause: Same as above, except in this case, you explicitly asked for this URL to be indexed, for example by submitting it in your XML sitemap.

How to fix it: Explicitly mark the correct canonical, using rel=canonical links, for every crawlable URL on your website and be sure to include only canonical pages in your XML sitemap.

Duplicate, Google Chose Different Canonical Than User

Cause: The page has rel=canonical link in place, but Google disagrees with this suggestion and has chosen a different URL to index as the canonical.

How to fix it: Inspect the URL to see the Google selected canonical URL. If you agree with Google, change the rel=canonical link. Else, work on your website architecture to reduce the amount of duplicate content and send stronger ranking signals to the page you wish to be the canonical.

Submitted URL Not Found (404)

Cause: The URL you submitted, likely via your XML sitemap, doesn’t exist.

How to fix it: Create the URL or remove it from your XML sitemap. You can systematically avoid this error by following the best practice of dynamic XML sitemaps.

Redirect Error

Cause: Googlebot took issue with the redirect. This is most commonly caused by redirect chains five or more URLs long, redirect loops, an empty URL or an excessively long URL.

How to fix it: Use a debugging tool such as Lighthouse or a status code tool such as httpstatus.io to understand what is breaking the redirect and thus how to address it.

Ensure your 301 redirects always point directly to the final destination, even if this means editing old redirects.

Server Error (5xx)

Cause: Servers return a 500 HTTP response code (a.k.a., Internal Server Error) when they’re unable to load a page. It could be caused by wider server issues, but more often than not is caused by a brief server disconnection that prevents Googlebot from crawling the page.

How to fix it: If it’s a ‘once in a blue moon’ thing, don’t stress. The error will go away by itself after some time. If the page is important, you can recall Googlebot to the URL by requesting indexing within URL inspection. If the error is recurring, speak with your system engineer / tech lead / hosting company to improve the server infrastructure.

Crawl Anomaly

Cause: Something prevented the URL from being crawled, but even Google doesn’t know what it is exactly.

How to fix it: Fetch the page using the URL Inspection tool to see if any 4xx or 5xx level response codes are returned. If that gives no clues, send the URLs to your development team.

Indexed, Though Blocked by Robots.Txt

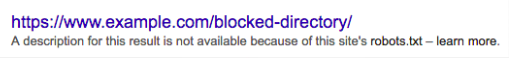

Cause: Think of robots.txt as the digital equivalent of a “no entry” sign on an unlocked door. While Googlebot does obey these instructions, it does it to the letter of the law, not the spirit.

So you may have pages that are specifically disallowed in robots.txt showing up in the search results. Because if a blocked page has other strong ranking signals, such as links, Google may deem it relevant to index.

Despite not having crawled the page. But because the content of that URL is unknown to Google, the search result looks something like this.

How to fix it: To definitively block a page from appearing in SERPs, don’t use robots.txt. You need to use a noindex tag or prohibit anonymous access to the page using auth.

How to fix it: To definitively block a page from appearing in SERPs, don’t use robots.txt. You need to use a noindex tag or prohibit anonymous access to the page using auth.

Know that URLs with a noindex tag will also be crawled less frequently and if present for a long time, it will eventually lead Google to nofollow the page’s links as well, which means they won’t add those links to the crawl queue and ranking signals won’t be passed to linked pages.

Further Thought Required

Many Google Search Console Index Coverage report issues are caused by conflicting directives.

It’s not that one is right and the other wrong. You just need to be clear on your goal and ensure all site signals support that goal.

Indexed, Not Submitted in Sitemap

Cause: The URL was discovered by Google, likely through a link, and indexed. But it wasn’t submitted in the XML sitemap.

What to do: If the URLs are SEO relevant, add them to your XML sitemap. This will ensure fast indexing of new content or updates to existing content.

Else, consider if you want the URLs to be indexed. URLs are not ranked solely on their own merits. Every page indexed by Google impacts how the quality algorithms evaluate domain reputation.

Having pages indexed, but not submitted in the sitemap, is often a sign of the site is suffering from index bloat – where an excessive number of low-value pages have made it into the index.

This is commonly caused by auto-generated pages such as filter combinations, archive pages, tag pages, user profiles, pagination or rogue parameters. Index bloat hurts the domain’s ability to rank all its URLs.

Blocked by Robots.Txt

Cause: Googlebot won’t crawl the URL as it is blocked by robots.txt. But this does not mean that the page won’t be indexed by Google. When you will begin to see ‘indexed, though blocked by robots.txt’ warnings within the Index Coverage Report.

What to do: To ensure the page is not indexed by Google, remove the robots.txt block and use a noindex directive.

Submitted URL Blocked by Robots.Txt

Cause: The URL you submitted, likely via the XML sitemap, is also blocked by your robots.txt file.

What to do: Either remove the URL from the XML sitemap, if you do not wish it to be crawled and indexed, or the blocking rule from the robots.txt file, if you do. If you are using a hosting service that doesn’t allow you to modify this file, change web hosts.

Submitted URL Marked ‘Noindex’

Cause: The URL you submitted, likely via the XML sitemap, are marked noindex either via robots meta tags or in the HTTP header X-Robots tags.

What to do: Either remove the URL from the XML sitemap, if you do not wish it to be crawled and indexed, or remove the noindex directive, if you do.

Submitted URL Returns Unauthorized Request (401)

Cause: Google is not authorized to crawl the URL you submitted, such as password-protected pages.

What to do: If there is no reason to protect the content from being indexed, remove the authorization requirement. Else, remove the URL from the XML sitemap.

Submitted URL Has Crawl Issue

Cause: Something is causing a crawling issue, but even Google can’t put a name to it.

What to do: Try debugging the page using the URL Inspection tool. Check page load times, blocked resources and if there is any needless JavaScript code.

If this doesn’t turn up useful results, resort the old fashioned way of loading up the URL on your mobile and see what happens on page and in code.

Submitted URL Seems to Be a Soft 404

Cause: Google has deemed the URL you submitted, likely via your XML sitemap, to be a soft 404 – i.e., the server responds with a 200 success code but the page:

- Doesn’t exist.

- Has little to no content (aka thin content), such as vacant category pages.

- Has a redirect in place to an irrelevant destination URL, such as the homepage.

What to do: If the page truly doesn’t exist and was intentionally removed, return a 410 for fastest deindexing. Be sure to display a custom ‘not found’ page to the user. Unless there is a similar content on another URL, in which case implement a 301 redirect to pass on the ranking signals.

If the page appears to have lots of content, check that Google can render all that content. If it truly is suffering from thin content, if the page doesn’t have a reason to exist, 410 or 301, if it does, remove it from your XML sitemap to not draw Google’s attention, add a noindex tag and work on a longer-term solution to beef up the page with valuable content.

If there is a redirect in place to a non-relevant page, change it to a relevant one or, if this is not possible, to a 410.

Soft 404

Cause: Same as above, but you haven’t specifically requested the page to be indexed.

What to do: Similar to above, either show Google more content, 301 or 410 as appropriate.

Natural Statuses in the Index Coverage Report

The goal is not to get every URL of your site indexed, aka valid, although the number should climb up steadily as your site grows.

The goal is to get the canonical version of SEO relevant pages indexed.

It is not only natural but beneficial to SEO to have a number of pages labeled as excluded in the Index Coverage report.

This shows you are mindful that Google judged your domain reputation based on all indexed pages and have taken appropriate actions to exclude pages that must exist on your website, but do not need to be factored into Google’s view of your content.

Submitted and Indexed

Cause: You submitted the page by an XML sitemap, API or manually within Google Search Console and Google has indexed it.

No fix needed: Unless you don’t want those URLs to be in the index.

Alternate Page With Proper Canonical Tag

Cause: Google successfully processed the rel=canonical tag.

No fix needed: The page already correctly indicates its canonical. There is nothing else to do.

Excluded by ‘Noindex’ Tag

Cause: Google crawled the page and honored the noindex tag.

No fix needed: Unless you do want those URLs to be in the index, in which case, remove the noindex directive.

Page With Redirect

Cause: Your 301 or 302 redirect was successfully crawled by Google. The destination URL was added to the crawl queue and the original URL removed from the index.

The ranking signals with no dilution will be passed once Google crawls the destination URL and confirms that the destination URL has similar content.

No fix needed: This exclusion will decline naturally over time as the redirects are processed.

Not Found (404)

Cause: Google discovered the URL by a method other than the XML sitemap, such as a link from another website. When it was crawled, the page returned a 404 status code. As a result, Googlebot will crawl the URL less frequently over time.

No fix needed: If the page truly doesn’t exist because it was intentionally removed, there is nothing wrong with returning a 404. There is no Google penalty for amassing 404 codes. That is a myth.

But that’s not to say they are always best practice. If the URL had any ranking signals, these are lost to the 404 void. So if you have another page with similar content, considering changing to a 301 redirect.

Blocked by Page Removal Tool

Cause: A URL removal request was submitted within Google Search Console.

No fix needed: The removal request will naturally expire after 90 days. After that period, Google may reindex the page.

To Sum Up

Overall, prevention is better than cure. A well-thought-out website architecture and robots handling will often lead to a clean and clear Google Search Console Index Coverage report.

But as most of us inherit the work of others, rather than build from scratch, it is an invaluable tool to help you focus attention where it is most needed.

Be sure to check back in on the report every month to monitor Google’s progress on crawling and indexing your site and document the impact of SEO changes.

More Resources:

- How Search Engines Crawl & Index: Everything You Need to Know

- Your Indexed Pages Are Going Down – 5 Possible Reasons Why

- 11 SEO Tips & Tricks to Improve Indexation

Image Credits

Featured Image: Created by author, February 2020

Screenshots taken by author, February 2020