Google is emailing webmasters via Search Console telling them to remove noindex statements from their robots.txt file.

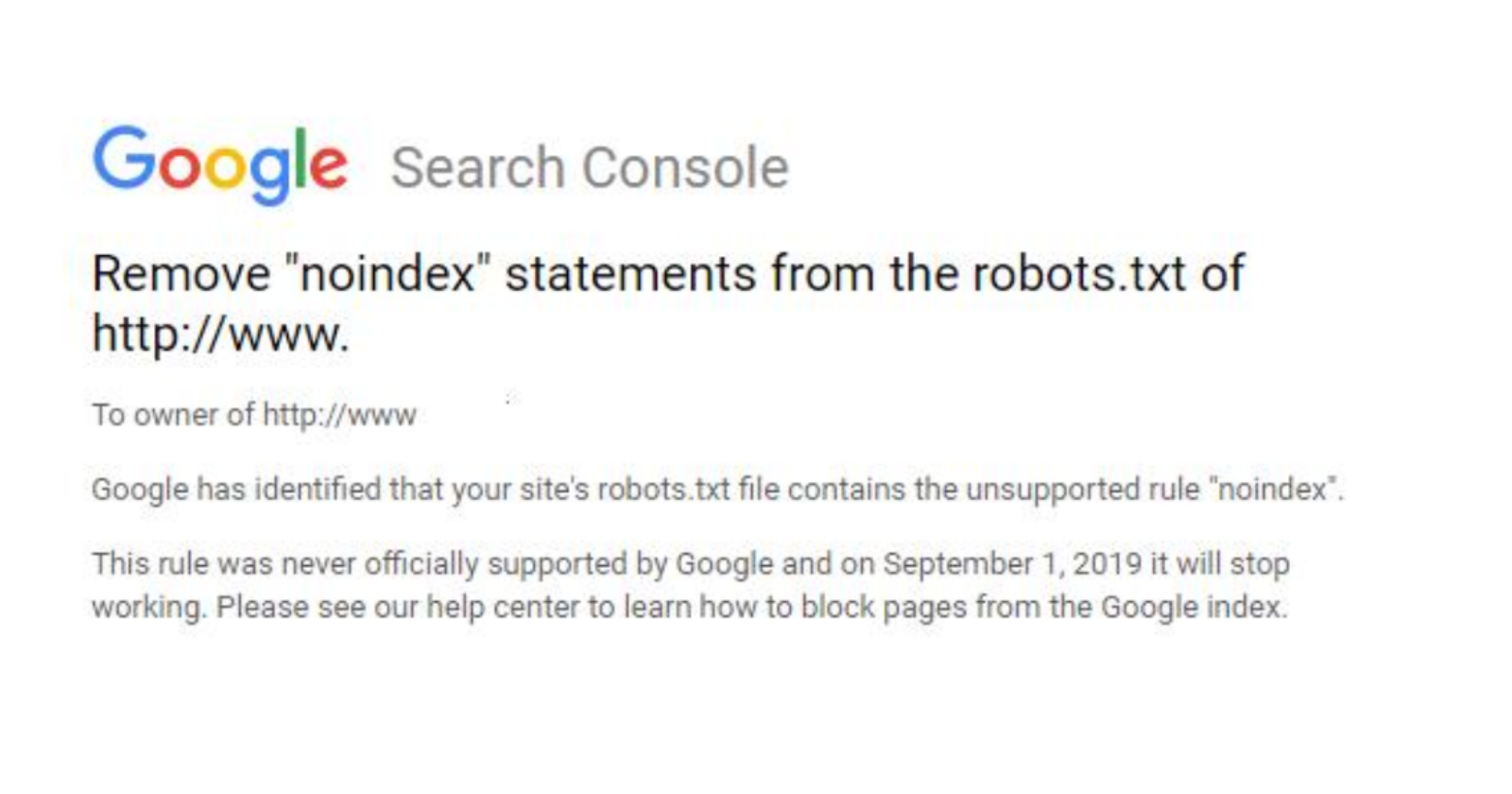

The email reads:

“Google has identified that your site’s robots.txt file contains the unsupported rule “noindex”. This rule was never officially supported by Google and on September 1, 2019 it will stop working. Please see our help center to learn how to block pages from the Google index.”

These notices come just weeks after Google officially canceled support for the noindex rule.

For now, Googlebot is still obeying the noindex directive and will continue to do so until September 1st. Site owners will then need to use an alternative.

Technically, as stated in the email, Google was never obligated to support the noindex directive in the first place. It’s an unofficial rule that Google adopted when it started to become widely used by site owners.

The lack of a standardized set of rules for robots.txt is another issue in and of itself – an issue that Google is working steadfastly to resolve.

Until such time as a standard list of rules is established, it’s probably best not to rely solely on the unofficial rules.

Here are some other options for blocking a page from being indexed:

- A noindex meta tag directly in the page’s HTML code

- 404 and 410 HTTP status codes

- Password protection

- Disallow in robots.txt

- Search Console Remove URL tool