Split tests (A/B tests) are crucial to long-term PPC success because they help you know which variable is leading to your profit and victory.

Structuring the test is just as important as giving it enough time for the data to guide you. A reader asks,

“How do you set up a split test? Do you recommend only testing one variable (ie. creative or copy or where the ads are placed)? Anything else you think could be help to go from 0>1 would be awesome!”

In this Ask the PPC post, we’ll go over:

- What Are Split Tests?

- Tips for Structuring Successful A/B Tests.

- How to Evaluate & Act on Tests.

While this post will approach split testing from a PPC mindset, the ideas discussed can be applied to all digital marketing channels.

What Are Split Tests?

Split tests (or A/B tests) test one element of your campaign against another.

These tests can focus on:

- Ad copy creative.

- Audience targets.

- Landing pages.

- Calls to action.

- Visual elements on a page/ad.

- Email/call script.

- User workflow.

- Any other element of the acquisition/retention process.

You’ll need to decide what will remain consistent and which element will be your variable.

Variables are a single element you are looking to test. They should be the only different element in their aspect of the campaign.

Controls are the current campaign settings and should run alongside your test.

Tips for Structuring A/B Tests

The most difficult part of split testing is setting it up so you can get actionable insights.

Common pitfalls include:

- Too many variables: Evaluating more than one variable invites doubt into the validity of the test.

- Ending tests too early: Split testing only works if you can achieve statistical significance (which can’t happen in a day).

- No success/failure measures from the outset: If you don’t know what you’re hoping to achieve, the test will be meaningless and likely a waste of time and money.

While most PPC professionals agree that 10,000 sessions are the minimum for statistical significance, some brands won’t ever hit that in a quarter, much less in 30 days.

That’s why it’s important to set realistic timeframes and milestones for your business.

Getting at least 1000 sessions is a reasonable threshold, as is letting a test run for 30-60 days.

Once you have your data, you’ll be able to act. Keep the current baseline, or evolve your campaign by fully adopting the variable.

Meaningful tests have success/fail measures to ensure you’re able to glean value from the testing period. These might be:

- Time on site.

- Average order value.

- Conversion rate.

- ROI.

Whatever metric you pick, it’s important you stick to it and own whether the test was a success or failed. Getting emotionally attached to creative or strategies before the data vets them can corrupt the test, so be sure to stay objective.

How to Evaluate & Act on Tests

Judging “ship ability” from an A/B test can get quite complicated. It involves digging through a breadth of metrics (some helpful, some not) to understand how users are experiencing your changes.

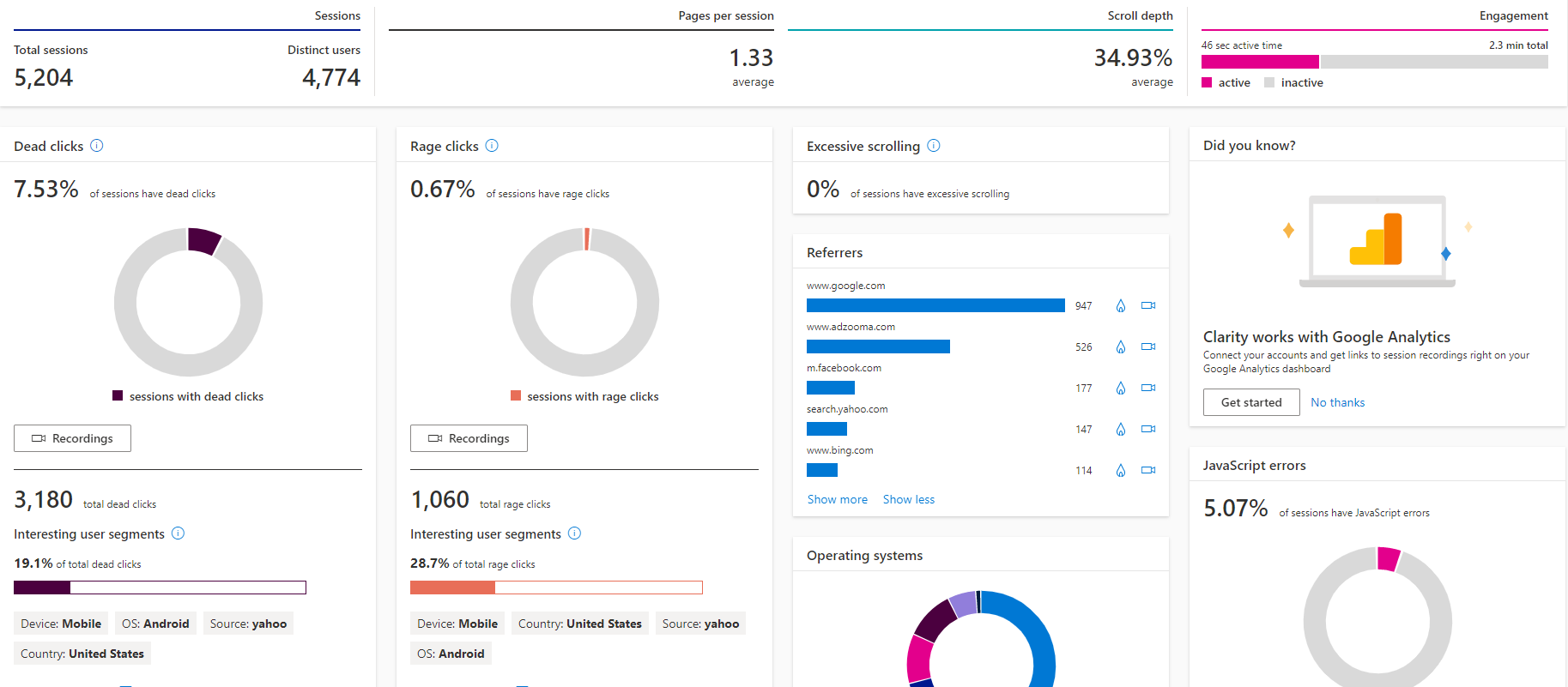

Behavioral analytics streamlines this process, simplifying all data into a visual understanding of your users’ interactions.

It helps you more efficiently understand the results of your A/B test – whether your hypothesis was validated, what surprises it may have led to, whether the treatment is good enough to ship, or if more iteration is needed.

Behavioral analytics like those you’ll find in Microsoft Clarity provide you the “why” behind “how” the metrics moved.

How to Use Clarity Features in A/B Testing

Session Recordings

A/B tests will show a variety of movements in metrics (up and down), and session recordings can help answer why the metrics moved by observing user behavior from real sessions.

Screenshot from Microsoft Clarity, September 2021

Screenshot from Microsoft Clarity, September 2021Heatmaps

Use aggregate view to compare treatment and control, to see if your key metrics on specific parts of the page are going as intended.

Click heatmaps

Understand click engagement on your treatment vs control.

- Compares where user CTA attention is going in both.

- Surfaces distracting content & possible unanticipated areas of confusion (like clicks on static content).

- Summarizes all-up interaction patterns with the new feature.

Scroll heatmaps

Understand scroll depth on both.

- Compares readership – how much of your page users might be reading.

- Helps troubleshoot discovery questions (what percent of users actually saw a specific CTA or paragraph).

Screenshot from Microsoft Clarity, September 2021

Screenshot from Microsoft Clarity, September 2021Rage Clicks

- As new UX experiences are provided, are users understanding how to use the feature, and is the feature working as expected across all edge cases?

- Identify whether further iteration is needed (are users unexpectedly frustrated with some part of the new experience?)

- Identify if learnability is an issue (are users not understanding how to use the new feature?)

Helpful Filters for A/B Testing

UTM filters: slicing and dicing across traffic sources.

- Is certain referral traffic leading to more success in one treatment than another? E.g., reading more, longer session duration, higher CTR, more overall conversions.

- See entire sessions for treatments from different sources – are they exploring different pages or using your treatments differently?

Custom Tags: differentiating control vs treatment sessions.

- Add tags based on whether each treatment was present

- Can stack additional filters – e.g., see sessions where treatment = a AND user did XYZ (like click on a specific button or visit the contact page, etc).

Takeaway

Split tests are a critical element of running successful PPC campaigns.

They’ll yield the best results when you go in with a clear idea of what you want to test and what success/failure looks like.

More Resources:

- A/B & Multivariate Testing for SEO: How to Do It the Right Way

- 5 Less Obvious PPC Testing Ideas That You Should Try

- PPC 101: A Complete Guide to PPC Marketing Basics

Have a question about PPC? Submit via this form or tweet me @navahf with the #AskPPC tag. See you next month!

Featured Image: Paulo Bobita/Search Engine Journal

![AI Overviews: We Reverse-Engineered Them So You Don't Have To [+ What You Need To Do Next]](https://www.searchenginejournal.com/wp-content/uploads/2025/04/sidebar1x-455.png)