Advertisers looking to optimize their performance have long used A/B testing to improve their ads. It’s well understood how this is done with expanded text ads (ETAs).

But recently, Google has announced it will sunset ETAs, making responsive search ads (RSAs) the main text ad format from July 1, 2022 onward.

It’s time to revisit our ad testing best practices.

What Is A/B Ad Testing?

Even novice PPC advertisers with no clear ad testing goal have probably already done quite a bit of A/B ad testing by taking advantage of the ability to add multiple ads to an ad group.

This is done so that Google can show the better-performing one more often.

Optimized rotation is, after all, the default setting for ad rotation.

-

Screenshot from Google, December 2021

Screenshot from Google, December 2021

More advanced advertisers usually choose the option to rotate ads indefinitely and use their own methodology to pick winners and losers. Rather than focusing on CTR, advertisers care more about conversion rate.

But solely focusing on conversion rate ignores the possibility that an ad with a high conversion rate may get very few clicks due to a low CTR and hence leave the advertiser with fewer total conversions.

By combining CTR and conversion rate into a metric called conversions per impression (CTR * conv rate = conv/imp), advertisers get a better measure of which ad is best at meeting one of their goals (which is to maximize conversions).

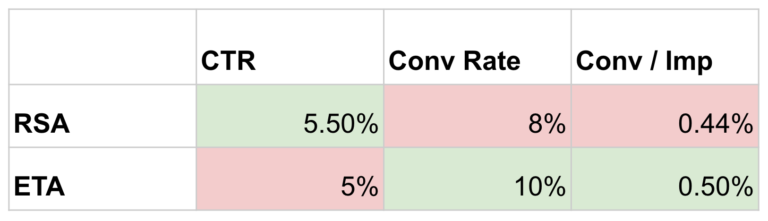

In the following example, you can see a traditional A/B test between an RSA and an ETA.

Based on performance data from Optmyzr’s recent RSA study (disclosure: my company), the average RSA has a 10% better CTR but a 20% lower conversion rate than an ETA in the same ad group.

Combining these two metrics, the RSA is 12% worse than the ETA at turning an impression into a conversion.

-

Image by author, December 2021

Image by author, December 2021

This finding helps explain why some advertisers remain hesitant to go all-in on RSAs: by traditional A/B measurement standards, they appear to perform worse.

Why Traditional A/B Testing Methods Don’t Work For RSAs

But just like conversion rate alone paints an incomplete picture about which ad performs best, conv/imp provides an incomplete picture when RSAs are added to the mix.

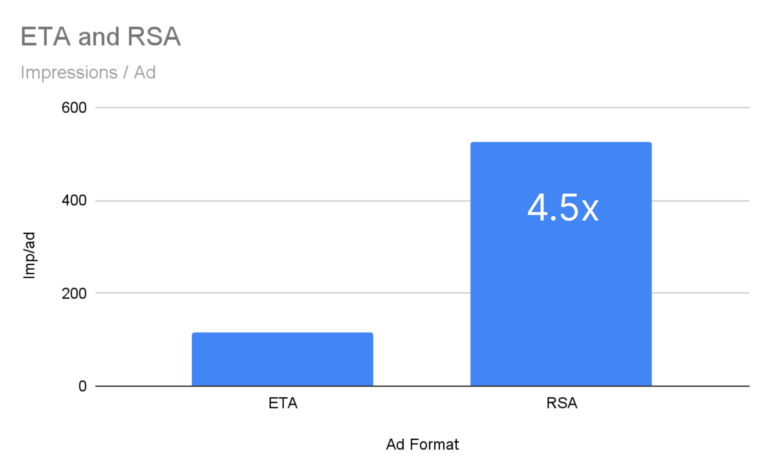

Here’s the problem. RSAs qualify for far more impressions than ETAs. Based on our study, RSAs are so much better at getting a good Ad Rank that they can show for about 4 times as many queries.

All else being equal, RSAs get 4 times the impressions of an ETA.

RSA ads get far more impressions than ETAs because they get a better Ad Rank for more queries.

-

Image by author, December 2021

Image by author, December 2021

The metric of ‘impressions per ad’ was calculated by taking the impressions of a particular ad type and dividing it by the number of ads of that type.

In the study, we separately analyzed ad groups with only RSAs, those with only ETAs, and those with both ad types.

The number in the chart above is based on over 157,000 ad groups that contained both ad formats. Even when we looked at ad groups with just one ad format, the ad groups with RSAs had more than twice the impressions per ad group as those with only ETAs.

So our old assumption that RSAs and ETAs compete for the same pool of impressions based solely on the targeted keywords in an ad group is wrong.

Since RSAs get far more impressions than ETAs, even with slightly worse performance on the conversions per impressions metric, they are still much more likely to drive more conversions.

RSA A/B Tests Must Include Impressions In The Analysis

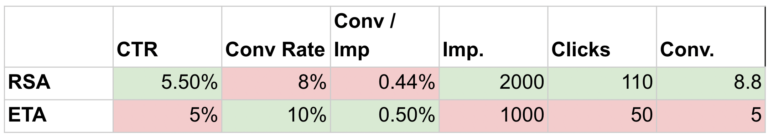

A more accurate way of determining the winning ad when RSAs are part of the mix is to incorporate impressions into the analysis as shown in this graphic.

-

Image by author, December 2021

Image by author, December 2021

Any A/B ad test including RSAs should include impression data to be able to measure incrementally.

As explained before, an RSA has a 12% worse conversion per impression rate compared to an ETA. We conservatively added in impressions that are double for the RSA and that nets 76% more conversions.

So RSAs are the real winner if you care about getting lots of conversions.

When shifting your A/B ad tests from ETAs to RSAs, it’s critical that you include impression volumes to be able to get at the true incrementality of RSAs.

Side note: Conversions are usually not the true goal of advertisers as profits matter, too. As more advertisers are now using automated bidding, this article won’t cover how to ensure these additional conversions are profitable.

That should be handled automatically by setting correct goals and feeding correct conversion data into the ad platform.

Even if you are A/B testing only ETAs, consider impressions. Historically, this was more because we wanted to ensure that any ads we were comparing had an equal opportunity to prove themselves.

If one ad was much newer than the other, it would have far fewer impressions and hence invalidate the test. Or if one ad was only running weekends and the other weekdays, there would be a similar discrepancy in impression volume that would invalidate the test.

Now, even when two RSAs run on the same days and have been active equally long, they may still produce discrepancies in impressions because one is better than the other at qualifying itself for more auctions.

Be sure to consider impression volume in all of your evaluations.

Now that we’ve established a better way to measure the results, let’s look at some ways to find what to test when creating new ad variations to challenge existing ads.

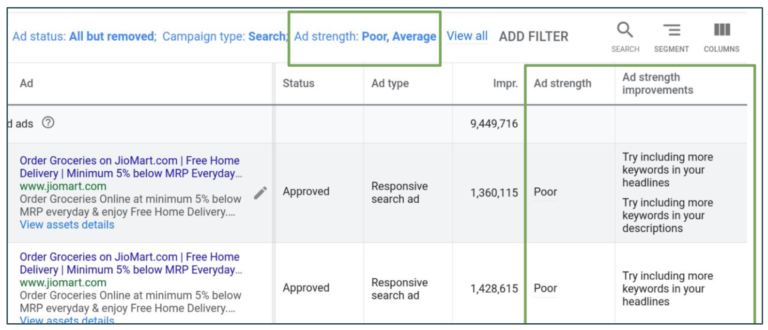

Ad Strength Indicates Whether You Follow Best Practices

As you write a new RSA, Google instantly provides feedback in the form of an Ad Strength indicator.

Ad strength is a best practice score that measures the relevance, quantity, and diversity of your responsive search ad content even before your RSA’s serve.

According to presentations by Google, every increase in ad strength corresponds to approximately a 3% uplift in clicks.

So going from ‘poor’ to ‘average’ should lead to about 3% more clicks, and going from ‘average’ to ‘good’ will drive another 3% in clicks.

-

Screenshot from Google, December 2021

Screenshot from Google, December 2021

Ad strength is a best-practice metric and should only be relied upon while creating a new RSA. But a key point is that this is a ‘best practice’ score and has no relation to actual performance.

Just as it’s possible to have a low-quality score keyword that drives valuable conversions for your account, you can have an ad with poor ad strength that is winning against other ads with better scores.

Try to follow Google’s guidelines so that you start your next test with the best chances of winning.

But don’t get stuck trying to get the perfect score. The actual performance will be reflected in your asset performance grouping labels, so let’s take a look at those next.

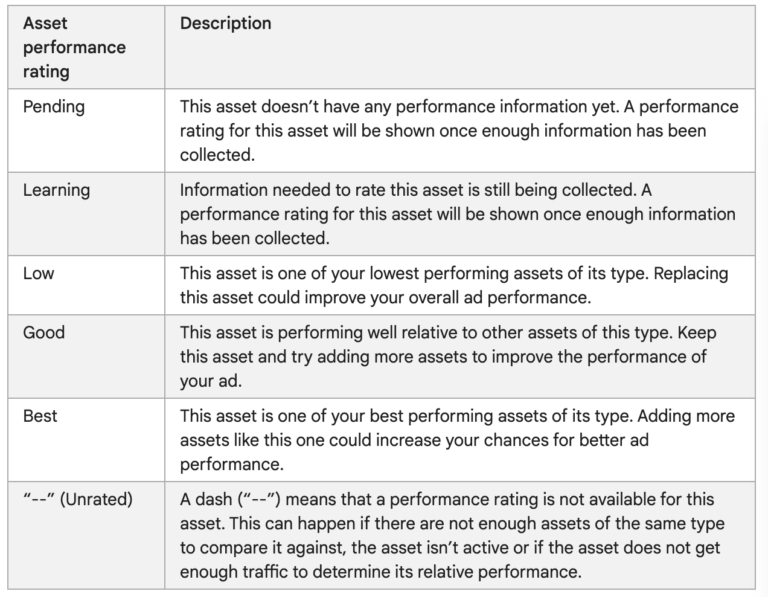

Asset Performance Labels Reflect Your Performance

Once your RSA has accrued about 5,000 impressions at the top of the search pages over the past 30 days, asset labels give you guidance on which pieces of text are performing well and which you should consider optimizing.

Google publishes a useful table of what the different performance grouping labels mean:

-

Screenshot from Google, December 2021

Screenshot from Google, December 2021

What’s most important is to replace any assets with the ‘Low’ label and to also replace assets that haven’t gotten any impressions after more than two weeks.

Remember, what you see in this assets report is based on actual performance. It’s more important to optimize based on this rather than ad strength score.

Testing Ideas

Many ads won’t accrue enough top impressions in a 30-day window to show performance data but there are still ways to optimize if this is the case for you.

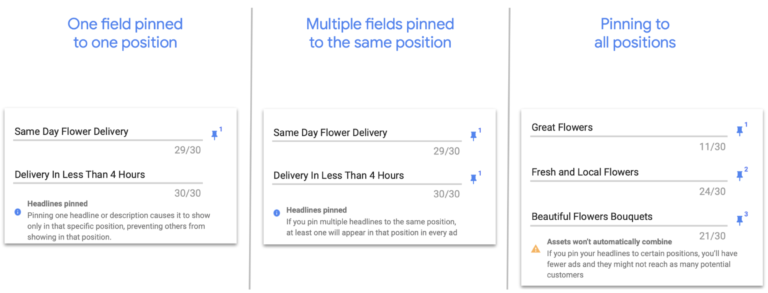

Remember, Google allows up to three RSAs per ad group and they allow specific pieces of text to be pinned to various positions in the ad.

With this, you can construct some useful experiments.

-

Screenshot from Google, December 2021

Screenshot from Google, December 2021

Advertisers can now pin multiple assets to a single location in an RSA ad.

The first experiment to try is based on messaging.

Create multiple RSAs, each with a different core message. The key messaging elements of any ad, whether RSA or ETA, consist of the company name, a unique value proposition, and a call to action.

The value proposition and call to action are the best ones to experiment with since your brand is your brand and for most advertisers, there’s not a whole lot of room to get creative with that.

For example, the first RSA could be more focused on the large selection your site offers, while the second could focus on another value proposition like how highly your site is rated by past customers.

Then when you use your A/B testing process, you will find what message resonates more and what leads to more conversions.

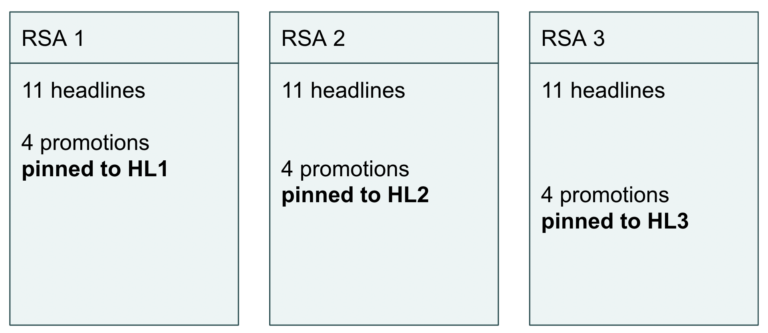

A second experiment takes the messages that worked well and tests them in different positions.

You can do this by pinning a group of assets to different positions like a particular headline or description.

Google now allows multiple assets to be pinned to a location, so even if you have 4 assets for your value proposition, you would pin all 4 assets to the desired location. Now when you run your A/B test, you may find that the value proposition performs best when pinned to the headline 2 position.

-

Image by author, December 2021

Image by author, December 2021

Pinning

Whether to pin or not is one of the biggest debates in RSAs. After ETAs are sunset and you can only create RSAs starting July 1, 2022, advertisers could pin one piece of text to each location to effectively turn an RSA into an ETA.

But that may defy the whole purpose of RSAs which is to get the machines to help construct the best possible ad for every user on the fly, thereby making the ad more relevant and hence eligible to appear for more searches.

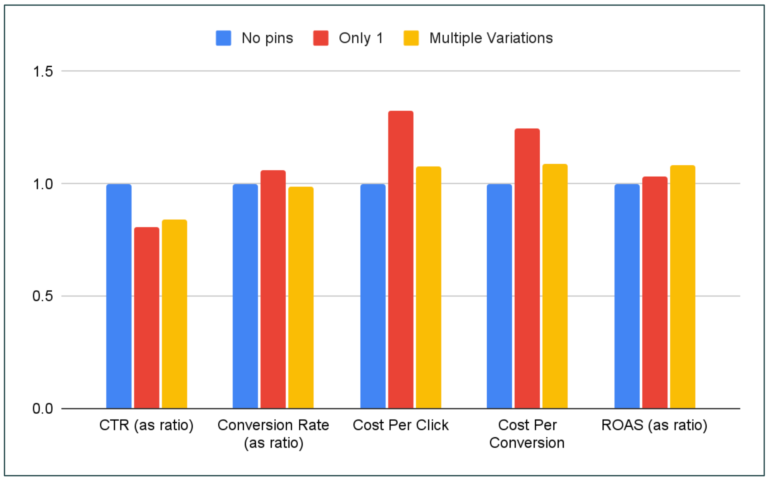

There are industries where pinning is a legal requirement. But besides those, here’s an analysis of pinning and whether helps (or hurts) performance.

-

Image by author, December 2021

Image by author, December 2021

Pinning is ultimately about advertiser control. And what the data shows us is that the machine drives the best CTR when advertisers let the algorithms do their thing without interfering. CPC and CPA are also lower in this case.

But when advertisers want control, they are better off giving the machine at least 2 variations of a text for each pinned position.

Pinning a single piece of text to a location has the biggest negative impact on CTR, CPC, and CPA.

Pinning multiple assets seems to offer a good balance of control and performance.

Headline Variations

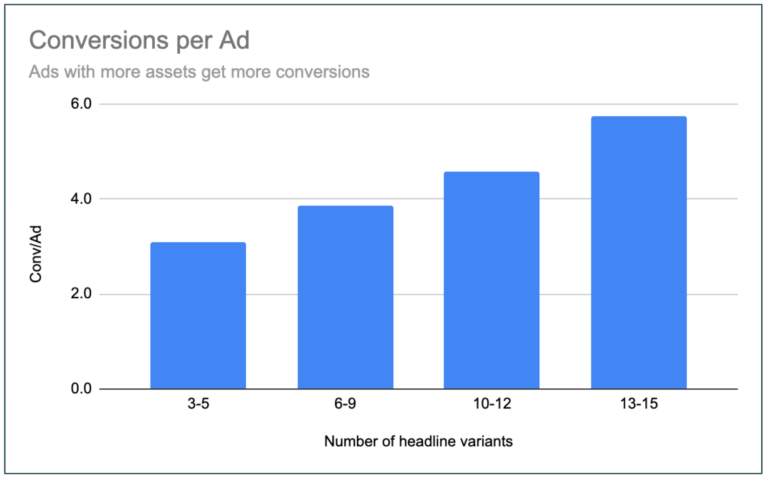

The Ad Strength indicator takes into account how many variations you provide for headlines and descriptions. Since coming up with 15 headlines can be daunting, we analyzed if it’s really worth adding more than a couple of headlines for each RSA.

-

Image by author, December 2021

Image by author, December 2021

What we found is that ads with more headline variations drove a higher number of conversions per ad. So when A/B testing RSAs, more headlines are indeed better.

Conclusion

If you remember only one thing from this post, it’s that a fundamental assumption about ad testing must change. Impressions now also depend greatly on the ad, and no longer just the keywords of an ad group.

When A/B testing RSA ads, it’s critical to account for this potential difference in impressions and try to find the ad that drives the biggest incrementality.

Over time, we expect Google will introduce more metrics for RSA assets and this will help all advertisers further build on the optimization techniques covered in this post.

More resources:

- 7 Powerful Benefits Of Using PPC Advertising

- Here’s What A Comprehensive Search Ad Looks Like

- 10 Most Important PPC Trends To Know In 2022

Featured Image: Jane0606/Shutterstock

![AI Overviews: We Reverse-Engineered Them So You Don't Have To [+ What You Need To Do Next]](https://www.searchenginejournal.com/wp-content/uploads/2025/04/sidebar1x-455.png)