When you consider a link building campaign, you may not be completely reaping the benefits of your SEO efforts if you ignore technical SEO.

The best results happen when you consider all the points of your website’s SEO:

- Technical SEO.

- Content.

- Links.

In fact, there are situations when you must tackle technical SEO before ever thinking about getting links.

If your website is weak in technical SEO areas, or extremely confusing for search engines, it won’t perform as well regardless of the quality and quantity of backlinks you have.

Your top goals with technical SEO is to make sure that your site is:

- Easily crawled by search engines.

- Has top cross-platform compatibility.

- Loads quickly on both desktop and mobile.

- Employs efficient implementation of WordPress plugins.

- Does not have any issues with misconfigured Google Analytics code.

These five points illustrate why it’s important to tackle technical SEO before link building.

If your site is unable to be crawled or is otherwise deficient in technical SEO best practices, you may suffer from poor site performance.

The following chapter discusses why and how you should be tackling technical SEO before starting a link building campaign.

Make Sure Your Site is Easily Crawled by Search Engines

Your HTTPS Secure Implementation

If you have recently made the jump to an HTTPS secure implementation, you may not have had the chance to audit or otherwise identify issues with your secure certificate installation.

A surface-level audit at the outset can help you identify any major issues affecting your transition to HTTPS.

Major issues can arise later on when the purchase of the SSL certificate did not initially take into account what the site would be doing later.

One thing to keep in mind is that you must take great care in purchasing your certificate and making sure it covers all the subdomains you want it to.

If you don’t, you may end up with some issues as a result, such as not being able to redirect URLs.

If you don’t get a full wildcard certificate, and you have URL parameters on a subdomain – using absolute URLs – that your certificate doesn’t cover, you won’t be able to redirect those URLs to https://.

This is why it pays to be mindful of the options you choose during the purchase of your SSL certificate because it can negatively affect your site later.

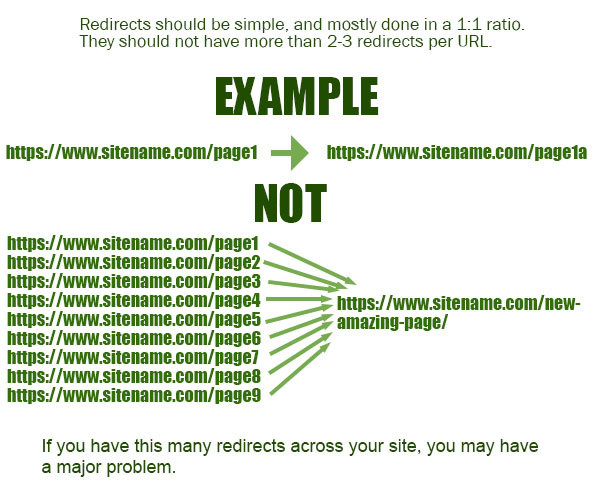

No Errant Redirects or Too Many Redirects Bogging Down Site Performance

It’s easy to create an HTTPS secure implementation with errant redirects.

For this reason, an eagle eye’s view of the site’s current redirect states will be helpful in correcting this issue.

It can also be easy to create conflicting redirects if you don’t keep watch on the redirects you are creating.

In addition, it’s easy to let redirects run out of control and lead to tens or many more redirects per site URL, in turn, leads to bogging down site performance.

The easiest way to fix this issue moving forward: make sure that your redirects are all created in a 1:1 ratio.

You should not have 10-15 or more redirect URLs per URL on your site.

If you do, something is seriously wrong.

Content on HTTPS & HTTP URLs Should Not Load at the Same Time

The correct implementation is that one should redirect to the other, not both.

If you have both of them loading at the same time, something is wrong with the secure version of your site.

If you type in your site’s URLs into your browser, try and test https:// and http:// separately.

If both URLs load, you are displaying two versions of your content, and duplicate URLs can lead to duplicate content issues.

To make sure that you do not run into this issue again, you will want to do one of the following, depending on your site’s platform:

- Create a full redirect pattern in HTACCESS (on Apache / CPanel servers)

- Use a redirect plugin in WordPress to force the redirects from http://

Instead, this is an example of exactly what we want to display to users and search engines:

How to Create Redirects in htaccess on Apache / Cpanel Servers

You can perform global redirects at the server level in .htaccess on Apache / CPanel servers.

Inmotionhosting has a great tutorial on how to force this redirect on your own web host. But, for our purposes, we’ll focus on the following ones.

To force all web traffic to use HTTPS, this is the following code you will want to use.

You want to make sure to add this code above any code that has a similar prefix (RewriteEngine On, RewriteCond, etc.)

RewriteEngine On

RewriteCond %{HTTPS} !on

RewriteCond %{REQUEST_URI} !^/[0-9]+\..+\.cpaneldcv$

RewriteCond %{REQUEST_URI} !^/\.well-known/pki-validation/[A-F0-9]{32}\.txt(?:\ Comodo\ DCV)?$

RewriteRule (.*) https://%{HTTP_HOST}%{REQUEST_URI} [L,R=301]

If you want to redirect only a specified domain, you will want to use the following lines of code in your htaccess file:

RewriteCond %{REQUEST_URI} !^/[0-9]+\..+\.cpaneldcv$

RewriteCond %{REQUEST_URI} !^/\.well-known/pki-validation/[A-F0-9]{32}\.txt(?:\ Comodo\ DCV)?$

RewriteEngine On

RewriteCond %{HTTP_HOST} ^example\.com [NC]

RewriteCond %{SERVER_PORT} 80

RewriteRule ^(.*)$ https://www.example.com/$1 [R=301,L]

Don’t forget to change any URLs in the above examples to what is the correct implementation on your domain name.

There are other solutions in that tutorial which may work for your site.

WARNING: if you do not have confidence in your abilities to make the correct changes at the server level on your server, please make sure to have your server company/IT person perform these fixes for you.

You can screw up something major with these types of redirects if you do not know exactly what you are doing.

Use a Plugin If You Are Operating a WordPress Site

The easiest way to fix these redirect issues, especially if you operate a WordPress site, is to just use a plugin.

There are many plugins that can force http:// to https:// redirects but here are a few that will help make this process as painless as possible:

Caution about plugins – don’t just add another plugin if you’re already using too many plugins.

You may want to investigate if your server can use similar redirect rules mentioned above (such as if you are using an NGINX-based server).

It must be stated here: plugin weight can affect site speed negatively, so don’t always assume that the latest plugin will help you.

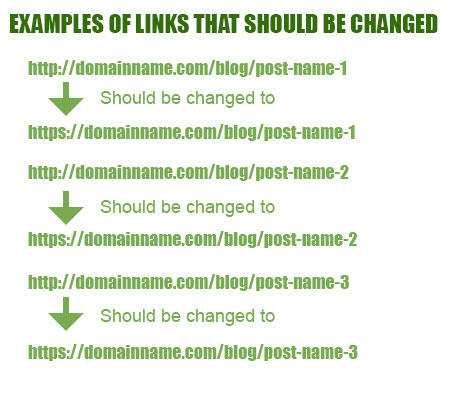

All Links On-site Should Be Changed From HTTP:// To HTTPS://

Even if you perform the redirects above, you should perform this step.

This is especially true if you are using absolute URLs, as opposed to relative URLs, where the former always displays the hypertext transfer protocol that you’re using.

If you are using the latter, this is less important and you probably don’t need to pay much attention to this.

Why do you need to change links on-site when you are using absolute URLs?

Because Google can and will crawl all of those links and this can result in duplicate content issues.

It seems like a waste of time, but it’s really not. You are making sure the end result is that Google sees exactly the site you want them to see.

One version.

One set of URLs.

One set of content.

No confusion.

No 404s From HTTP:// To HTTPS:// Transitions

A sudden spike in 404s can make your site almost impossible to crawl, especially if the links between http:// and https:// pages exist.

Difficulty crawling a site is one of the most common issues that can result from a spike in 404s.

Also, crawl budget wasted due to too many 404s showing up, and Google not finding pages that it should.

Why this impacts site performance, and why it matters:

While John Mueller of Google mentions that crawl budget doesn’t matter except for extremely large sites:

“Google’s John Mueller said on Twitter that he believes that crawl budget optimization is overrated in his mind. He said for most sites, it doesn’t make a difference and that it only can help really massive sites.

John wrote “IMO crawl-budget is over-rated.” “Most sites never need to worry about this. It’s an interesting topic, and if you’re crawling the web or running a multi-billion-URL site, it’s important, but for the average site owner less so,” he added.”

A great article by Yauhen Khutarniuk, Head of SEO at SEO PowerSuite, puts this perfectly:

“Quite logically, you should be concerned with crawl budget because you want Google to discover as many of your site’s important pages as possible. You also want it to find new content on your site quickly. The bigger your crawl budget (and the smarter your management of it), the faster this will happen.”

It’s important to optimize for crawl budget because finding new content on your site quickly should be the priority, while discovering as many of your site’s high priority pages as possible.

How to Fix Any 404s You May Have

Primarily, you want to redirect any 404s from the old URL to the new, existing URL.

Check out Benj Arriola’s Search Engine Journal article for more information on 404s vs. soft 404s, and how to fix them.

One of the easier ways, especially if you have a WordPress site, would be to crawl the site with Screaming Frog and perform a bulk upload of your 301 redirect rules using the Redirection WordPress plugin.

Otherwise, you may have to create redirect rules in .htaccess.

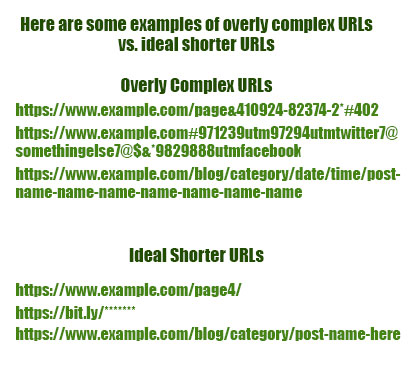

Your URL Structure Should Not Be Overly Complex

The structure of your URLs is an important consideration when getting your site ready for technical SEO.

You must pay attention to things like randomly generating dynamic parameters that are being indexed, URLs that are not easy to understand, and other factors that will cause issues with your technical SEO implementation.

These are all important factors because they can lead to indexation issues that will hurt your site’s performance.

More Human-Readable URLs

When you create URLs, you likely think about where the content is going, and then you create URLs automatically.

This can hurt you, however.

The reason why is because automatically generated URLs can follow a few different formats, none of which are very human-readable.

For example:

- /content/date/time/keyword

- /content/date/time/string-of-numbers

- /content/category/date/time/

- /content/category/date/time/parameters/

None of these formats that you encounter are very human readable, are they?

The reason why it’s important is that communicating the content behind the URL properly is a large part of user intent.

It’s even more important today also because of accessibility reasons.

The more readable your URLs are, the better:

- Search engines can use these to determine exactly how people are engaging with those URLs vs. those who are not engaging with those URLs.

- If someone sees your URL in the search results, they may be more apt to click on it because of the fact that they will see exactly how much that URL matches what they are searching for. In short – match that user search intent, and you’ve got another customer.

- This is why considering this part of URL structure is so important when you are auditing a site.

Many existing sites may be using outdated or confusing URL structures, leading to poor user engagement.

Identifying which URLs can be more human readable can create better user engagement across your site.

Duplicate URLs

One important technical SEO consideration that should be ironed out before any link building is duplicate content.

When it comes to duplicate content issues, these are the main causes:

- Content that is significantly duplicated across sections of the website.

- Scraped content from other websites.

- Duplicate URLs where only one piece of content exists.

This can hurt because it does confuse search engines when more than one URL represents one piece of content.

Search engines will rarely show the same piece of content twice, and not paying attention to duplicate URLs dilutes their ability to find and serve up each duplicate.

Avoid Using Dynamic Parameters

While dynamic parameters are, in and of themselves, not a problem from an SEO perspective, if you cannot manage your creation of them, and get consistent in their use, this can become a significant problem later.

Jes Scholz has an amazing article on Search Engine Journal covering the basics of dynamic parameters and URL handling and how it can affect SEO. If you are not familiar with dynamic parameters, I suggest reading her article ASAP before proceeding with the rest of this section.

Scholz explains that parameters are used for the following purposes:

- Tracking

- Reordering

- Filtering

- Identifying

- Pagination

- Searching

- Translating

When you get to the point that your URL’s dynamic parameters are causing a problem, it usually comes down to basic mismanagement of the creation of these URLs.

In the case of tracking, using many different dynamic parameters when creating links that search engines crawl.

In the case of reordering, using these different dynamic parameters to reorder lists and groups of items that then create indexable duplicate pages that search engines then crawl.

You can inadvertently trigger excessive duplicate content issues if you don’t keep your dynamic parameters to a manageable level.

You should never need 50 URLs with UTM parameters to track the results of certain types of campaigns.

The creation of these dynamic URLs for one piece of content can really add up over time if you aren’t carefully managing their creation and will dilute the quality of your content along with its capability to perform in search engine results.

It leads to keyword cannibalization and on a large enough scale can severely impact your ability to compete.

Shorter URLs Are Better Than Longer URLs

A long-held SEO best practice has been shorter URLs are better than longer URLs.

Google’s John Mueller has discussed this:

“What definitely plays a role here is when we have two URLs that have the same content, and we try to pick one to show in the search results, we will pick the short one. So that is specifically around canonicalization.

It doesn’t mean it is a ranking factor, but it means if we have two URLs and one is really short and sweet and this other one has this long parameter attached to it and we know they show exactly the same content we will try to pick the shorter one.

There are lots of exceptions there, different factors that come into play, but everything else being equal – you have a shorter one and a longer one, we will try to pick the shorter one.”

There is also empirical evidence that shows that Google ranks shorter URLs for more terms, rather than long and specific.

If your site contains super long URLs everywhere, you may want to optimize them into better, shorter URLs that better reflect the article’s topic and user intent.

Make Sure Your Site Has Top Cross-Platform Compatibility & Fast Page Speed

Site glitches and other problems can arise when your site is not coded correctly.

These glitches can result from badly-nested DIV tags resulting in a glitched layout, code with bad syntax resulting in call-to-action elements disappearing, and bad site management resulting in the careless implementation of on-page elements.

Cross-platform compatibility can be affected along with page speed, resulting in greatly reduced performance and user engagement, long before link building ever becomes a consideration.

Nip some of these issues in the bud before they become major problems later.

Many of these technical SEO issues come down to poor site management and poor coding.

The more that you tackle these technical SEO issues at the beginning with more consistent development and website management best practices, the better off you’ll be later when your link building campaign takes off.

Poorly Coded Site Design

When you have a poorly coded site design, your user experience and engagement can suffer and will be adversely affected.

This is yet another element of technical SEO that can be easily overlooked.

A poorly coded site design can manifest in several ways with:

- Poor page speed.

- Glitches in the design appearing on different platforms.

- Forms not working where they should (impacting conversions).

- Any other call to actions not working on mobile devices (and desktop).

- Any tracking code that’s not being accurately monitored (leading to poor choices in your SEO decision-making).

Any of these issues can spell disaster for your site when it can’t properly report on, capture leads, or engage with users to its fullest potential.

This is why these things should always be considered and tackled on-site before moving to link building.

If you don’t, you may wind up with weaknesses in your marketing campaigns that will be even harder to pin down, or worse – you may never find them.

All of these elements of a site design must be addressed and otherwise examined to make sure that they are not causing any major issues with your SEO.

Pages Are Slow to Load

Since July 2018, Google rolled out page speed as a ranking factor in its mobile algorithm to all users.

Slow loading pages can affect everything, so it’s something that you should pay attention to on an ongoing basis, and not just for rankings.

But for all of your users also.

What should you be on the lookout for when it comes to issues that impact page speed?

Slow Loading Images

If your site has many images approaching 1 MB (1 megabyte) in file size, you have a problem.

As the average internet connection speed approaches over 27.22 Mbps download on mobile, and fixed broadband approaches over 59.60 Mbps download, realistically, this becomes less of an issue, but can still be an issue.

You will still face slower loading pages when you have such large images on your site. If you use a tool like GTMetrix, you can see how fast your site loads these images.

Typical page speed analysis best practices say that you should take three snapshots of your site’s page speed.

Average out the three snapshots, and that’s your site’s average page speed.

It is recommended, on average, for most sites, that images should be at most 35 – 50K per image, not more. This is depending on resolution, and pixel density (including whether you are accommodating the higher pixel densities of iPhones and other devices).

Also, use lossless compression in graphics applications like Adobe Photoshop in order to achieve the best quality possible while resizing images.

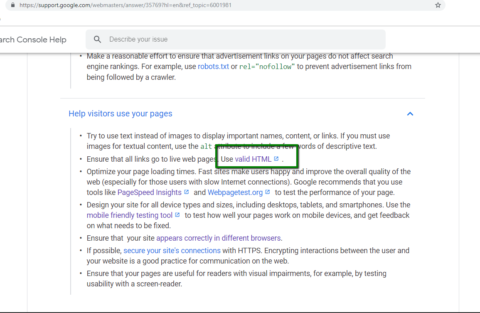

Efficient Coding Best Practices

Some people believe that standard coding best practices say that you should be using W3C valid coding.

Google’s Webmaster Guidelines recommend using valid W3C coding to code your site.

But, John Mueller (and even Matt Cutts) have mentioned in the past that it’s not critical to focus on W3C-valid coding for ranking reasons.

Search Engine Journal staff Roger Montti discusses this conundrum in even further detail here: 6 Reasons Why Google Says Valid HTML Matters.

But, that’s the key word there. Focusing on it for ranking purposes.

You will find at the top of Google, for different queries, all sorts of websites that ascribe to different coding best practices, and not every site validates via the W3C.

Despite a lack of focus on that type of development best practice for ranking purposes, there are plenty of reasons why using W3C valid coding is a great idea, and why it can put you ahead of your competitors who are not doing it.

Before any further discussion takes place, it needs to be noted from a developer perspective:

- W3C-standard validated code is not always good code.

- Bad code is not always invalid code.

- W3C validation should not be the be-all, end-all evaluation of a piece of coding work.

- But, validation services like the W3C validator should be used for debugging reasons,

- Using the W3C validator will help you evaluate your work more easily and avoid major issues as your site becomes larger and more complex after completion of the project.

But in the end, which is better, and why?

Picking a coding standard, being consistent with your coding best practices, and sticking with them is generally better than not.

When you pick a coding standard and stick with it, you introduce less complexity and less of a chance that things can go wrong after the final site launch.

While some see W3C’s code validator as an unnecessary evil, it does provide rhyme and reason to making sure that your code is valid.

For example, if your syntax is invalid in your header, or you don’t self-close tags properly, W3C’s code validator will reveal these mistakes.

If, during development, you transferred over an existing WordPress theme, from say XHML 1.0 to HTML 5 for server compatibility reasons, you may notice thousands of errors.

It means that you have incompatibility problems with the DOCTYPE in the theme and the language that is actually being used.

This happens frequently when someone copies and pastes old code into a new site implementation without regard to any coding rules whatsoever.

This can be disastrous to cross-platform compatibility.

Also, this simple check can help you reveal exactly what’s working (or not working) under the hood right now code-wise.

Where efficient coding best practices come into play, is doing things like inadvertently putting multiple closing DIV tags where they shouldn’t go, being careless about how you code the layout, etc.

All of these coding errors can be a huge detriment to the performance of your site, both from a user and search engine perspective.

Common Ways Too Many WordPress Plugins Can Harm Your Site

Using Too Many Plugins

Plugins can become major problems when their use is not kept in check.

Why is this? How can this be – aren’t plugins supposed to help?

In reality, if you don’t manage your plugins properly, you can run into major site performance issues down the line.

Here are some reasons why.

Extra HTTP Requests

All files that load on your site generate requests from the server or HTTP requests.

Every time someone requests your page, all of your page elements load (images, video, graphics, plugins, everything), and all of these elements require an HTTP request to be transferred.

The more HTTP requests you have, the more these extra plugins will slow down your site.

This can be mostly a matter of milliseconds, and for most websites does not cause a huge issue.

It can, however, be a major bottleneck if your site is a large one, and you have hundreds of plugins.

Keeping your plugin use in check is a great idea, to make sure that your plugins are not causing a major bottleneck and causing slow page speeds.

Increased Database Queries Due to Extra Plugins

WordPress uses SQL databases in order to process queries and maintain its infrastructure.

If your site is on WordPress, it’s important to know that every plugin you add will send out extra database queries.

These extra queries can add up, and cause bottleneck issues that will negatively affect your site’s page speed.

The more you load plugins up, the slower your site will get.

If you don’t manage the database queries well, you can run into serious issues with your website’s performance, and it will have nothing to do with how your images load.

It also depends on your host.

If you suffer from a large website with too many plugins and too little in the way of resources, now may be the time for an audit to see exactly what’s happening.

The Other Problem With Plugins: They Increase the Probability of Your Website Crashing

When the right plugins are used, you don’t have to worry (much) about keeping an eye on them.

You should, however, be mindful of when plugins are usually updated, and how they work with your WordPress implementation to make sure your website stays functional.

If you auto-update your plugins, you may have an issue one day where a plugin does not play nice with other plugins. This could cause your site to crash.

This is why it is so important to manage your WordPress plugins.

And make sure that you don’t exceed what your server is capable of.

This Is Why It’s Important to Tackle Technical SEO Before Link Building

Many technical SEO issues can rear their ugly head and affect your site’s SERP performance long before link building enters the equation.

That’s why it’s important to tackle technical SEO before you start link building.

Any technical SEO issues can cause significant drops in website performance long before link building ever becomes a factor.

Start with a thorough technical SEO audit to reveal and fix any on-site issues.

It will help identify any weaknesses in your site, and these changes will all work together with link building to create an even better online presence for you, and your users.

Any link building will be for naught if search engines (or your users) can’t accurately crawl, navigate, or otherwise use your site.

Summary

Timeframe: Month 1, 2, 3 and every quarter

Results Detected: 1-4 months after implementation

Tools needed:

- Screaming Frog

- DeepCrawl

- Ahrefs (or Moz)

- Google Search Console

- Google Analytics

Link building benefits of technical SEO:

- Technical SEO will help you get the maximum performance out of your links.

- Technical SEO like a clean site structure and understanding of PR flow is very key for internal link placement.

Image Credits

Featured Image: Paulo Bobita

In-post images/screenshots taken by author, July 2019