While I do not encourage anyone to rely too much on Robots.txt tools (you should either make your best to understand the syntax yourself or turn to an experienced consultant to avoid any issues), the Robots.txt generators and checkers I am listing below will hopefully be of additional help:

Robots.txt generators:

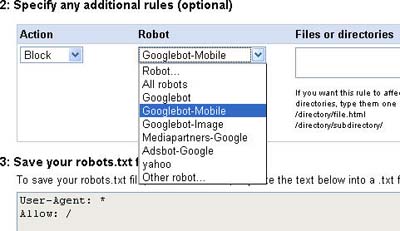

Common procedure:

- choose default / global commands (e.g. allow/disallow all robots);

- choose files or directories blocked for all robots;

- choose user-agent specific commands:

- choose action;

- choose a specific robot to be blocked.

As a general rule of thumb, I don’t recommend using Robots.txt generators for the simple reason: don’t create any advanced (i.e. non default) Robots.txt file until you are 100% sure you understand what you are blocking with it. But still I am listing two most trustworthy generators to check:

- Google Webmaster tools: Robots.txt generator allows to create simple Robots.txt files. What I like most about this tool is that it automatically adds all global commands to each specific user agent commands (helping thus to avoid one of the most common mistakes):

- SEObook Robots.txt generator unfortunately misses the above feature but it is really easy (and fun) to use:

Robots.txt checkers:

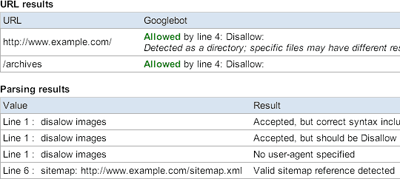

- Google Webmaster tools: Robots.txt analyzer “translates” what your Robots.txt dictates to the Googlebot:

- Robots.txt Syntax Checker finds some common errors within your file by checking for whitespace separated lists, not widely supported standards, wildcard usage, etc.

- A Validator for Robots.txt Files also checks for syntax errors and confirms correct directory paths.

![AI Overviews: We Reverse-Engineered Them So You Don't Have To [+ What You Need To Do Next]](https://www.searchenginejournal.com/wp-content/uploads/2025/04/sidebar1x-455.png)