Technical SEO is incredibly important. You need a strong technical foundation in order to be successful.

It is a necessity and not optional.

There are also some who believe that it’s more important than ever to be technical.

I am in full agreement with both of these articles.

Whether it’s knowledge of programming, server architecture, website architecture, JavaScript, CSS, or whatever it is, having this knowledge will put you a step above the rest.

Technical SEO will help you optimize your own site, and identify issues on websites that non-technical SEOs can’t catch.

In fact, in some cases, it can be critical to perform technical SEO before ever touching link building.

Let’s examine some of the more common technical SEO issues and get some checks and balances going so we can fix them.

1. Sitemaps

The presence of a sitemap file on your site will help search engines:

- Better understand its structure.

- Where pages are located.

- More importantly, give it access to your site (assuming it’s set up correctly).

XML sitemaps can be simple, with one line of the site per line. They don’t have to be pretty.

HTML sitemaps can benefit from being “prettier” with a bit more organization to boot.

How to Check

This is a pretty simple check. Since the sitemap is installed in the root directory, you can check for the presence of the sitemap file by searching for it in Screaming Frog, or you can check it in the browser by adding sitemap.xml or sitemap.html.

Also, be sure to check the sitemaps section in Google Search Console.

It will tell you if a sitemap has previously been submitted, how many URLs were successfully indexed, whether there are any problems, and other issues.

If you don’t have one, you’ll have to create one.

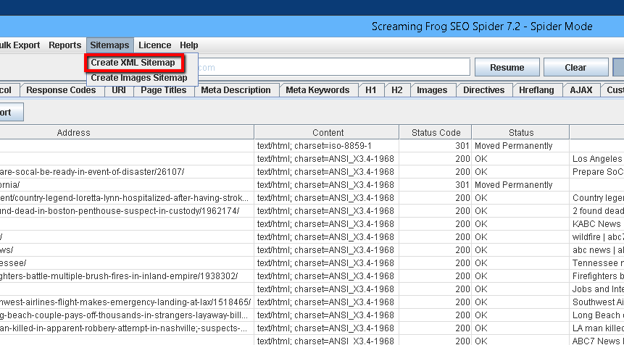

Using Screaming Frog, it’s quite simple to create an XML Sitemap. Just click on Sitemaps > Create XML Sitemap.

Go to the Last modified tab and uncheck it.

Go to the Priority tab and uncheck it. Go to the Change Frequency tab and uncheck it.

These tags don’t provide much benefit for Google, and thus the XML sitemap can be submitted as-is.

Any additional options (e.g., images, noindex pages, canonicalized URLs, Paginated URLs, or PDFs) can all be checked if they apply to your site.

It’s also a good idea to check your sitemap for errors before submitting it. Use an XML validator tool like CodeBeautify.org and XMLValidation.com.

Using more than one validator will help ensure your sitemap doesn’t have errors and that it is 100% correct the first time it is submitted.

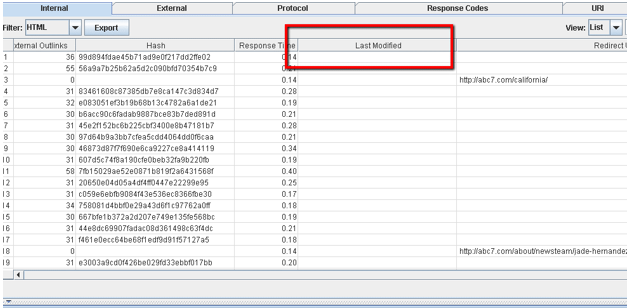

In addition, uploading the URL list to Screaming Frog using list mode is a good way to check that your sitemap also has all 200 OK errors.

Strip out all the formatting and ensure it’s only a list of URLs.

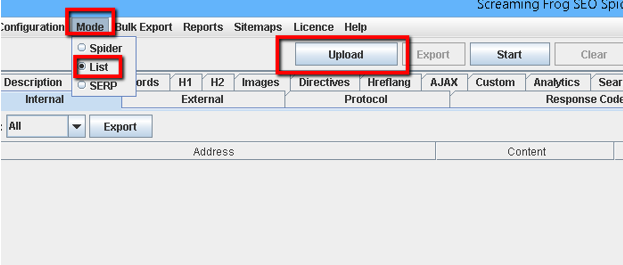

Then click on Mode > List > upload > Crawl and make sure all pages in the sitemap have 200 OK errors.

2. Robots.txt

Identifying whether robots.txt exists on-site is a good way to check the health of your site. The robots.txt file can make or break a website’s performance in search results.

For example, if you set robots.txt to “disallow: /”, you’re telling Google never to index the site because “/” is root!

It’s important to set this as one of the first checks in SEO because so many site owners get this wrong.

It is always supposed to be set at “disallow: ” without the forward slash. This will allow all user agents to crawl the site.

How to Check

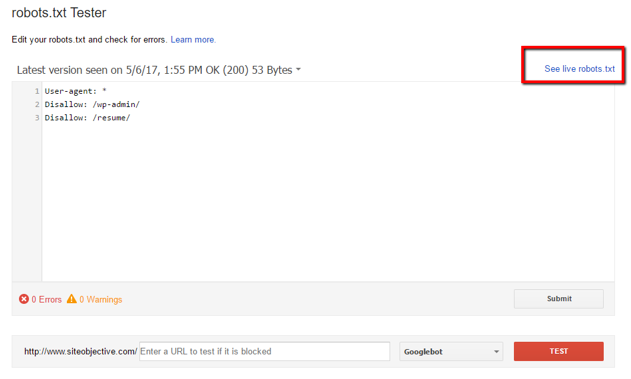

Check Google Search Console for the presence of a robots.txt file. You can go to Crawl > robots.txt Tester to do this.

It will help you see what is currently live on-site, and if any edits will improve that file.

It’s also a good idea to maintain records of the robots.txt file.

Monthly screenshots will help you identify whether changes were made and when, and help you pinpoint errors in indexation if any were to arise.

Checking the link “See live robots.txt” will let you investigate the current live state of the site’s robots.txt file.

3. Crawl Errors

The Crawl Errors section of GSC will help you identify whether crawl errors currently exist on-site.

Finding crawl errors, and fixing them, is an important part of any website audit because the more crawl errors a site has, the more issues Google has finding pages and indexing them.

Ongoing technical SEO maintenance of these items is crucial for having a healthy site.

How to Check

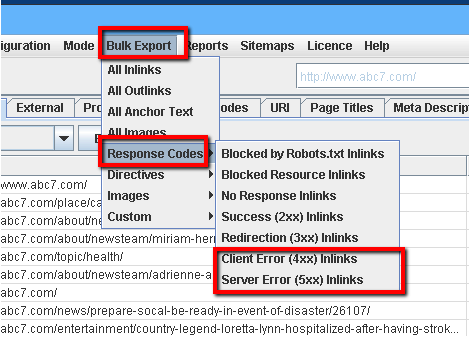

In Google Search Console, identify any 400 and 500 server and not found errors found on-site. All of these types of errors should be called out and fixed.

In addition, you can use Screaming Frog to find and identify 400 and 500 server error codes.

Simply click on Bulk Export > Response Codes > Client Error (4xx) Inlinks and Server Error (5xx) Inlinks.

4. Multiple URLs: Capital vs. Lowercase URLs

This issue can cause Google to see two or more versions of the page as the source of single content on your site.

Multiple versions can exist, from capital URLs to lower case URLs, to URLs with dashes and URLs with underscores.

Sites with severe URL issues can even have the following:

- https://www.example.com/this-is-the-url

- https://www.example.com/This-Is-The-URL

- https://www.example.com/this_is_the_url

- https://www.example.com/thisIStheURL

- https://www.example.com/this-is-the-url/

- http://www.example.com/this-is-the-url

- http://example.com/this-is-the-url

What’s wrong with this picture?

In this case, seven different URL versions exist for one piece of content.

This is awful from Google’s perspective, and we don’t want to have such a mess on our hands.

The easiest way to fix this is to point the rel=canonical of all of these pages to the one version that should be considered the source of the single piece of content.

However, the existence of these URLs is still confusing. The ideal fix is to consolidate all seven URLs down to one single RL, and set the rel=canonical tag to that same single URL.

Another situation that can happen is that URLs can have trailing slashes that don’t properly resolve to their exact URLs. Example:

- http://www.example.com/this-is-the-url

- http://www.example.com/this-is-the-url/

In this case, the ideal situation is to redirect the URL back to the original, preferred URL, and make sure the rel=canonical is set to that preferred URL.

If you aren’t in full control over the site updates, keep a regular eye on these.

5. Does the Site Have an SSL Certificate (Especially in Ecommerce)?

Ideally, an ecommerce site implementation will have an SSL certificate.

But with Google’s recent moves toward preferring sites that have SSL certificates for security reasons, it’s a good idea to determine whether a site has a secure certificate installed.

How to Check

If a site has https:// in their domain, they have a secure certificate, although the check at this level may reveal issues.

If a red X appears next to the https:// in a domain, it is likely that the secure certificate has issues.

Screaming Frog can’t identify security issues such as this, so it’s a good idea to check for certain issues like https://www, https://blog, or https://.

If two of these have X’s across them, as opposed to the main domain (if the main domain has https://), it is likely that during the purchase process of the SSL certificate, errors were made.

In order to make sure that all variations of https:// resolve properly, it’s necessary to get a wildcard secure certificate.

This wildcard secure certificate will ensure that all possible variations of https:// resolve properly.

6. Minifying CSS & JavaScript Files

Identifying bloated CSS code, along with bloated JavaScript, will help decrease your site’s load time.

Many WordPress themes are guilty of bloated CSS and JavaScript, which if time were taken to minify them properly, these sites could experience load times of 2-3 seconds or less.

Ideally, most website implementations should feature one CSS file and one JavaScript file.

When properly coded, the lack of these files minimizes the calls to the server, potential bottlenecks, and other issues.

How to Check

Using URIValet.com, it’s possible to identify server bottlenecks and issues with larger CSS and JavaScript files.

Go to URIValet.com, input your site, and examine the results.

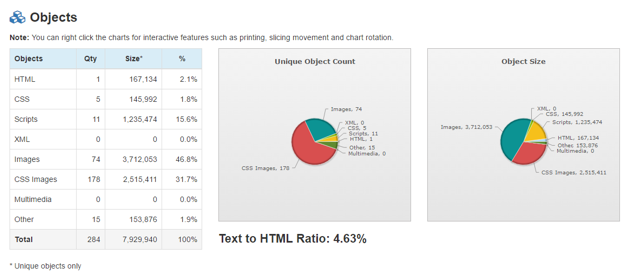

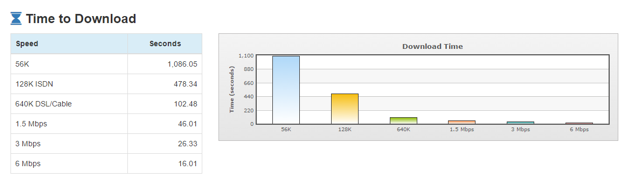

Continuing our audit of ABC7.com’s website, we can identify the following:

There are at least five CSS files and 11 script files that may need minification.

Further study into how they interact with each other will likely be required to identify any issues that may be happening.

Time to download is through the roof: 46 seconds on 1.5 Mbps connection, 26.33 seconds on a 3 Mbps connection, and a whopping 16 seconds on a 6 Mbps connection.

Further investigation into the multiple CSS and JavaScript files will likely be required, including more investigation into images not being optimized on-site.

Since this site is pretty video-heavy, it’s also a good idea to figure out how the video implementations are impacting the site from a server perspective as well as from a search engine perspective.

7. Image Optimization

Identifying images that are heavy on file size and causing increases in page load time is a critical optimization factor to get right.

This isn’t a be-all, end-all optimization factor, but it can deliver quite a decrease in site speed if managed correctly.

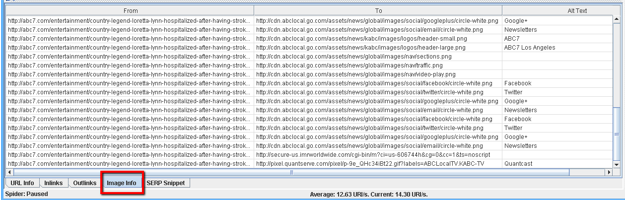

Using our Screaming Frog spider, we can identify the image links on a particular page.

When you’re done crawling your site, click on the URL in the page list, and then click on the Image Info tab in the window below it:

You can also right-click on any image in the window to either copy or go to the destination URL.

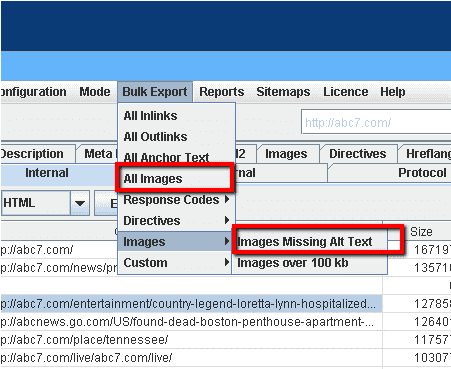

In addition, you can click on Bulk Export > All Images or you can go to Images > Images missing alt text.

This will export a full CSV file that you can use to identify images that are missing alt text or images that have lengthy alt text.

8. HTML Errors / W3C Validation

Correcting HTML errors and W3C validation by themselves doesn’t increase ranking, and having a fully W3C valid site doesn’t help your ranking, per Google’s John Mueller.

That said, correcting these types of errors can help lead to better rendering in various browsers.

If the errors are bad enough, these corrections can help lead to better page speed.

But it is on a case-by-case basis. Just doing these by themselves won’t automatically lead to better rankings for every site.

In fact, mostly it is a contributing factor, meaning that it can help enhance the main factor – site speed.

For example, one area that may help includes adding width + height to images.

Per W3.org, if height and width are set, the “space required for the image is reserved when the page is loaded”.

This means that the browser doesn’t have to waste time guessing about the image size, and can just load the image right then and there.

How to Check

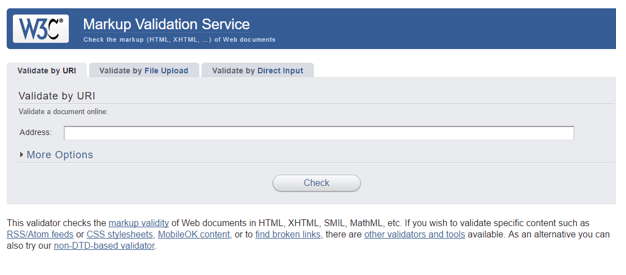

Using the W3C validator at W3.org can help you identify HTML errors and fix them accordingly.

Be sure to always use the appropriate DOCTYPE that matches the language of the page being analyzed by the W3C validator.

If you don’t, you will receive errors all over the place. You cannot change DOCTYPES from XHTML 1.0 to HTML 5, for example.

9. Mobile Optimization & Testing

Mobile is here to stay, and there are many reasons for mobile optimization.

This includes the fact that Google said that mobile-first indexing was being used for more than half the web pages in Google search results at the end of 2018.

As of July 1, 2019, Google has announced that mobile-first indexing is the default for any brand-new web domains.

This should be included in your audits because of how widespread mobile will be now.

These issues should be checked.

How to Check

Make sure that all content you develop can be viewed on mobile

- Install the user agent switcher for Google Chrome.

- Check your content on mobile devices using the user agent switcher by selecting iPhone, Samsung, etc.

- This will show you how your content is viewed on these devices.

- Shrink and expand the size of your browser window to check this.

- If the site has a responsive design, check on your actual mobile phone.

- Report any findings you have in the audit deliverables to your client.

What to Check

- Any videos that you have on your pages should load and be compatible with any and all potential smartphones that your user will use.

- Scrollability of your content – this ability will allow your content to scroll on any smart device. Don’t force your users to click through the next button to next button – this is extremely cumbersome and destroys the user experience.

- Your design should always be responsive. Don’t ever use a mobile.domainname.com website ever again. Unless this is a political thing at your employer, there is no excuse for any website in 2019 to have a mobile. or m. subdomain. Any website should be 100% responsive and should use the proper stylesheets.

- Don’t use AMP. Through a number of recent case studies we have performed, removing AMP has actually increased traffic, rather than causing issues with traffic. Check for implementations of AMP coding, and make sure that the coding doesn’t exist. If it does, recommend that the client remove it.

10. Forcing a Single Domain

Despite many recommendations online, I still run into plenty of websites that have this major issue.

And this is the issue of multiple URLs loading, creating massive problems with duplicate content.

Here’s the situation. When you enter your address in your web browser, you can test variations of URLs:

- http://www.example.com/

- https://www.example.com/

- http://example.com/

- https://example.com/

- https://example.com/page-name1.html

- https://www.example.com/page-name1.html

- https://example.com/pAgE-nAmE1.html

- https://example.com/pAgE-nAmE1.htm

What will happen is that all of these pages load when you input the web address, creating a situation where you have many pages loading for one URL, creating further opportunities for Google to crawl and index them.

This issue multiplies exponentially when your internal linking process gets out of control, and you don’t use the right linking across your site.

If you don’t control how you link to pages, and they load like this, you are giving Google a chance to index page-name1.html, page-name1.htm, pAgE-nAmE1.html, and pAgE-nAmE1.htm.

All of these URLs will still have the same content on them. This confuses Google’s bot exponentially, so don’t make this mistake.

How to Check

- You can check your URL list crawled in Screaming Frog and see if Screaming Frog has picked up any of these same URLs.

- You can also load different variations of these web addresses for your client’s site in your browser and see if content loads.

- If it doesn’t redirect to the proper URL, and your content loads on the new URL variation, you should report this to the client and recommend the fix for it (redirect all of these variations of URLs to the main one).

Why Certain ‘Signals’ Were Not Included

Some SEOs believe that social signals can impact rankings positively and negatively.

Other SEOs do not.

Correlation studies, while they have been done, continue to ignore the major factor: correlation does not equal causation.

Just because there is an improvement in the correlation between social results and rankings doesn’t always mean that social improves ranking.

It’s not as important as some may think to have active social media.

But, it is important to have social sharing buttons on-site, so that you can share that content and increase the possibility that that content will get links for SEO.

So there is that dimension to think of when this kind of thing is included in this audit guide.

There could be a number of additional links being added at the same time, or there could be one insanely valuable authority link that was added, or any other number of improvements.

Gary Illyes of Google continues to officially maintain that they do not use social media for ranking.

The goal of this SEO audit checklist is to put together on-site and off-site checks to help identify any issues, along with actionable advice on fixing these issues.

Of course, there are a number of ranking factors that can’t easily be determined by simple on-site or off-site checks and require time, long-term tracking methods, as well as in some cases, custom software to run.

These are beyond the scope of this guide.

Hopefully, you found this checklist useful. Have fun and happy website auditing!

Image Credits

Featured Image: Paulo Bobita

All screenshots taken by author