Image Credit: Lyna ™

Image Credit: Lyna ™Two trends have impacted how Google goes about indexing. While the open web has shrunk, Google needs to crawl through big content platforms like YouTube, Reddit, and TikTok, which are often built on “complex” JS frameworks, to find new content. At the same time, AI is changing the underlying dynamics of the web by making mediocre and poor content redundant.

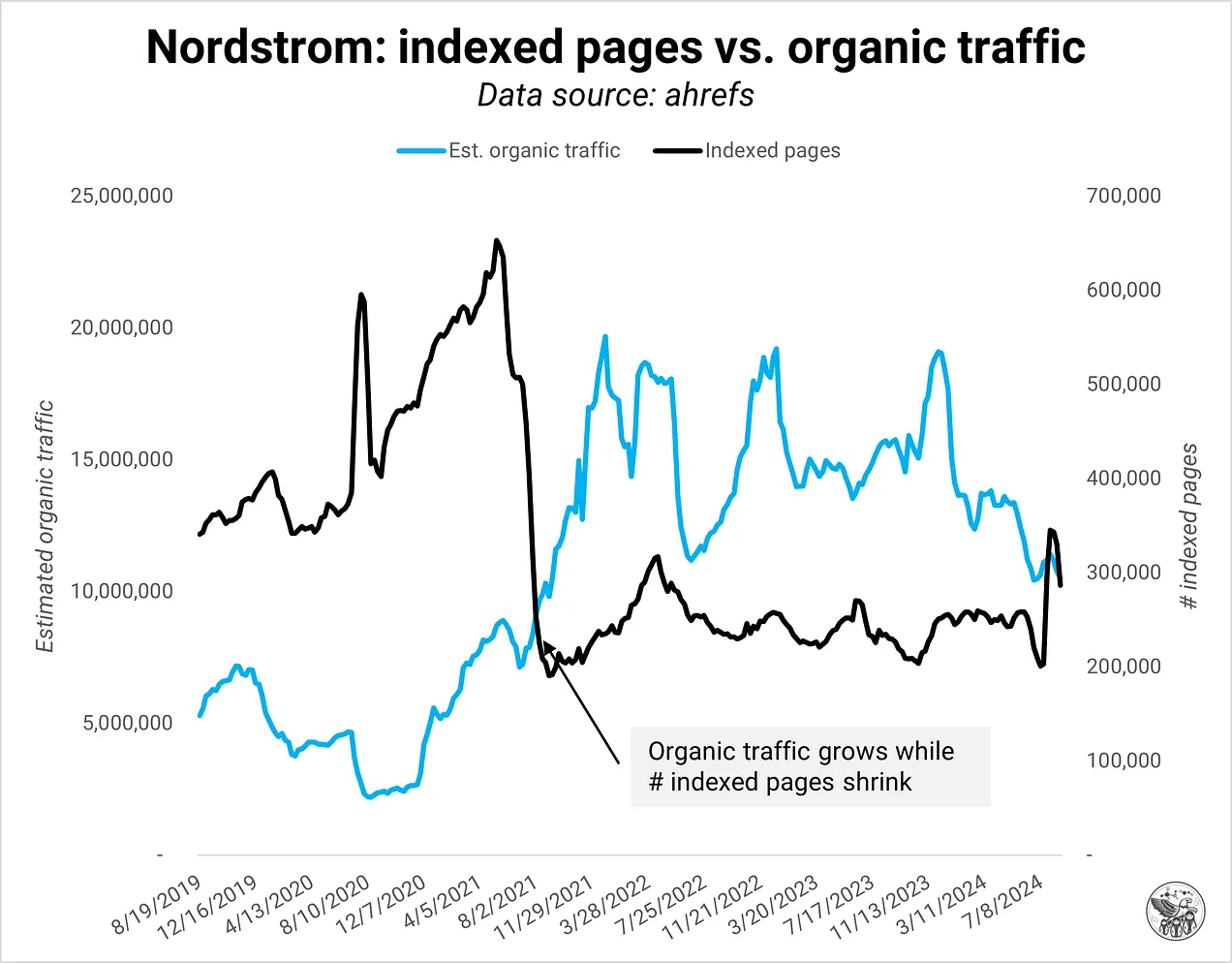

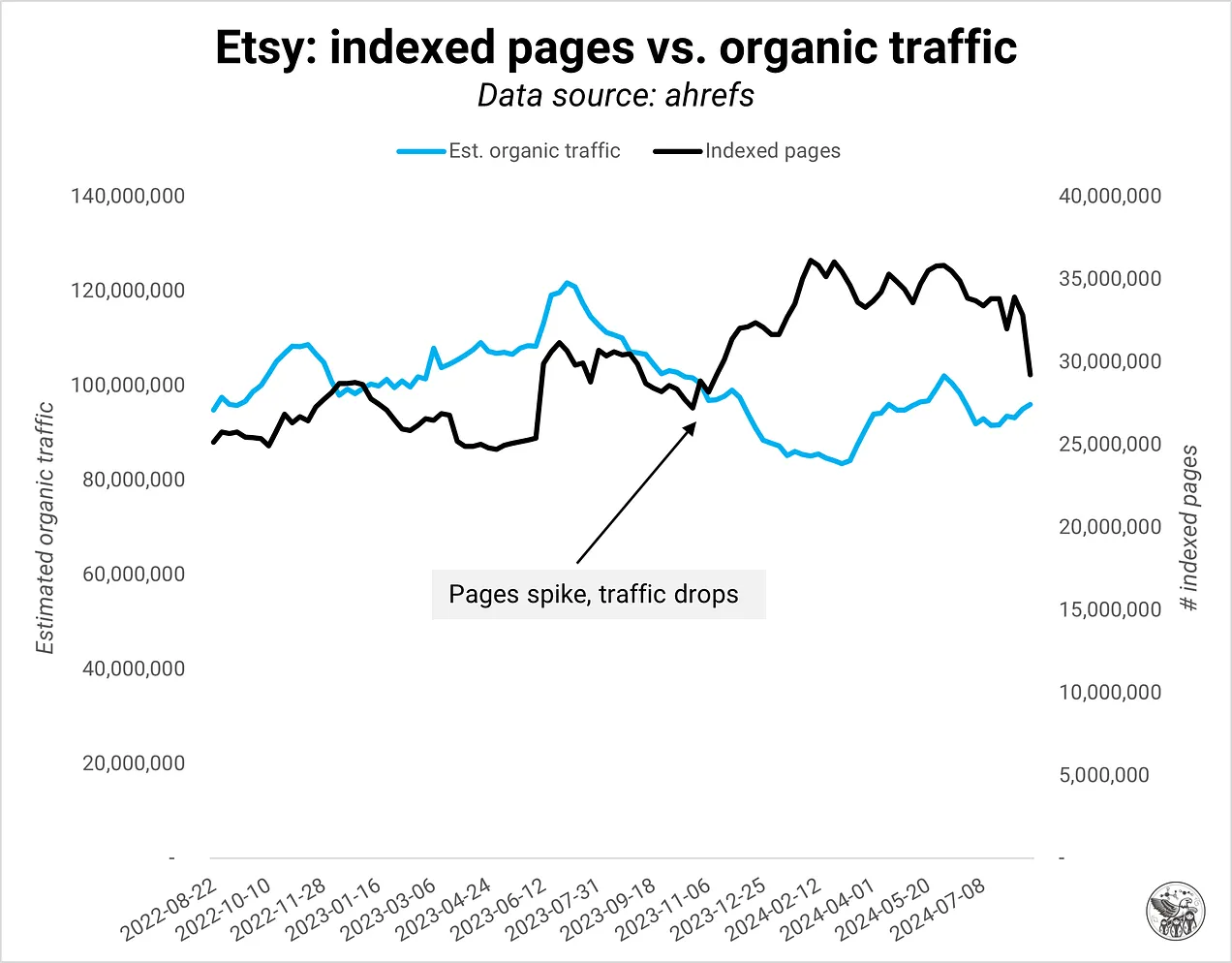

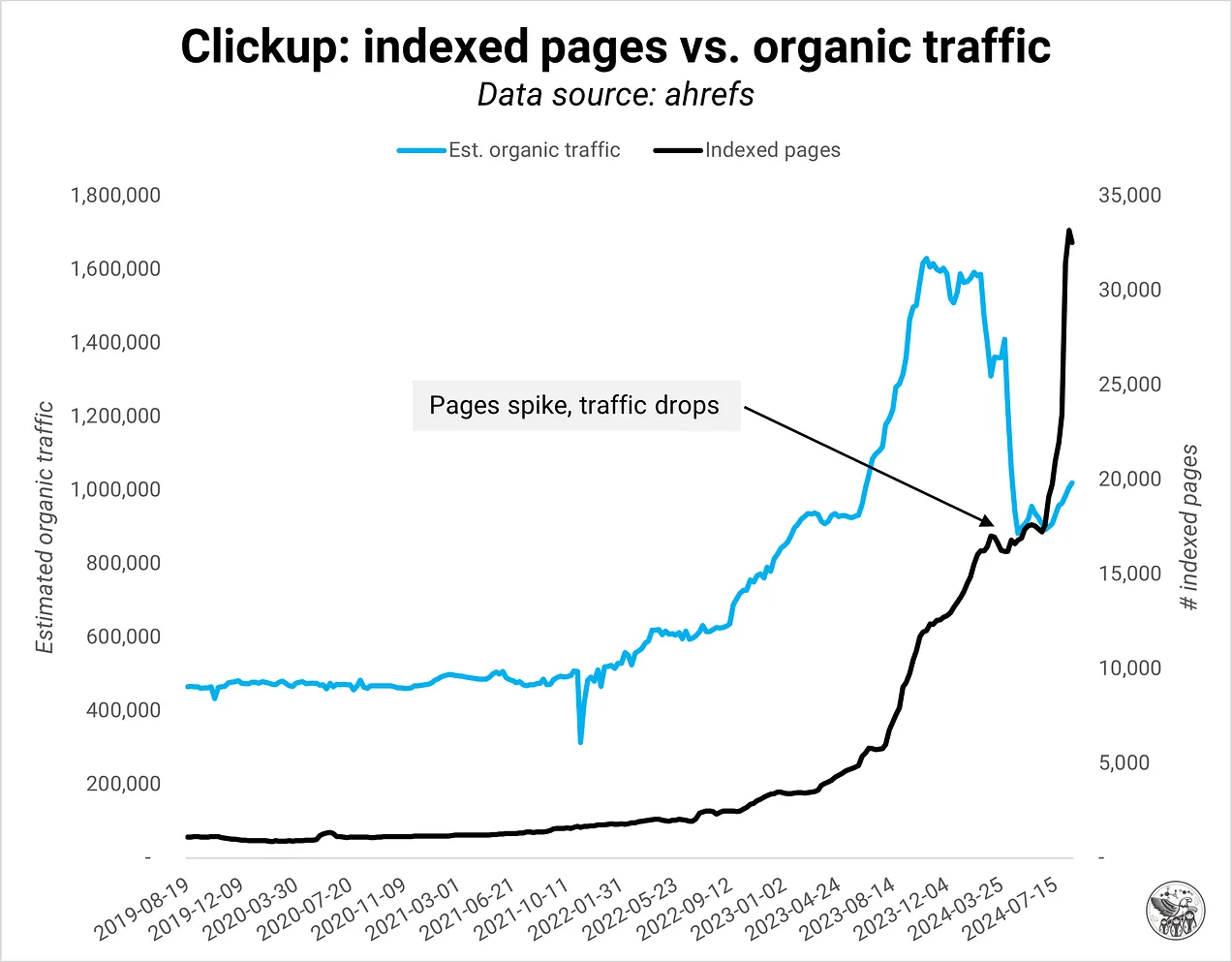

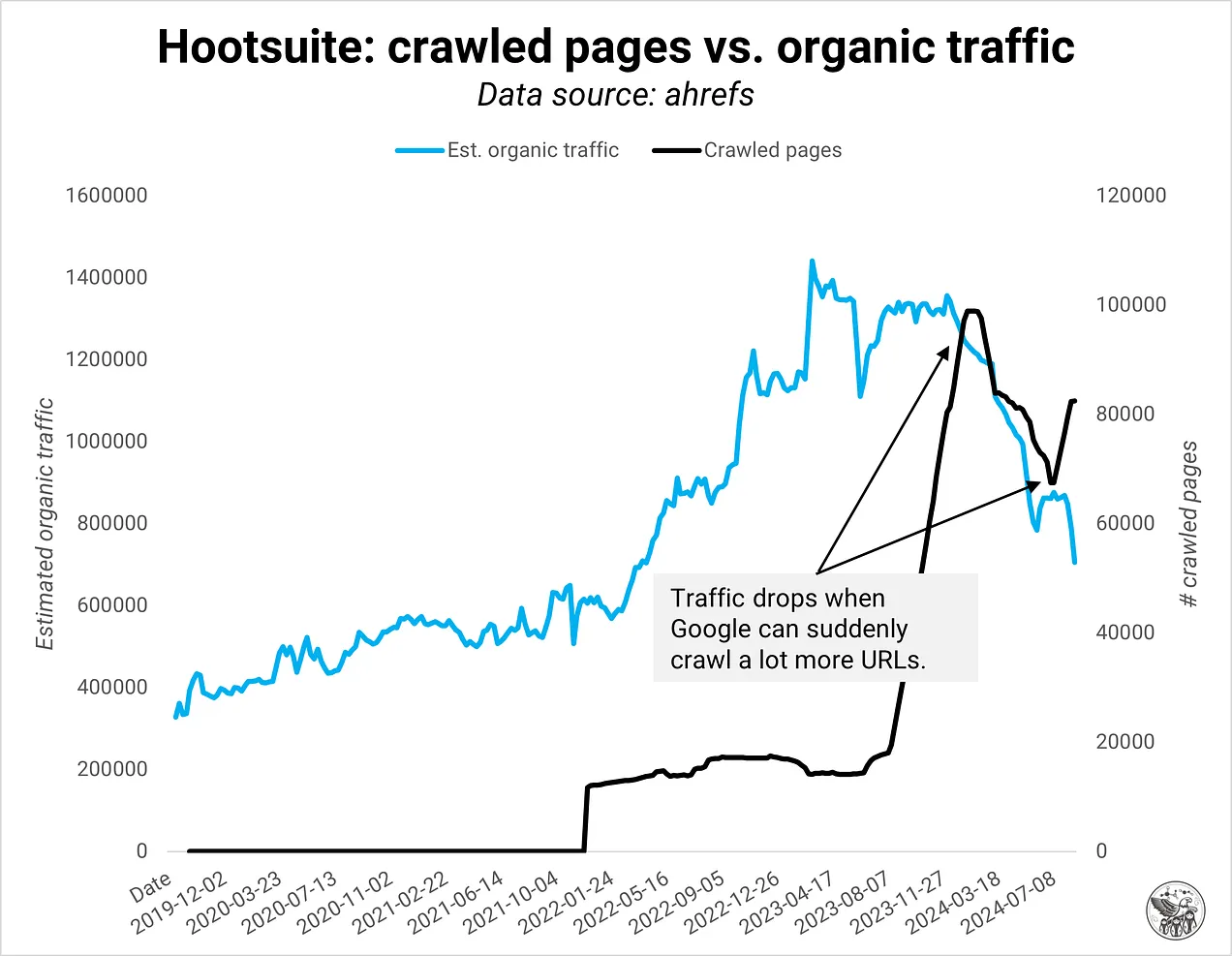

In my work with some of the biggest sites on the web, I lately noticed an inverse relationship between indexed pages and organic traffic. More pages are not automatically bad but often don’t meet Google’s quality expectations. Or, in better terms, the definition of quality has changed. The stakes for SEOs are high: expand too aggressively, and your whole domain might suffer. We need to change our mindset about quality and develop monitoring systems that help us understand domain quality on a page level.

Satiated

Google has changed how it treats domains, starting around October 2023: No example showed the inverse relationship before October. Also, Google had indexing issues when they launched the October 2023 Core algorithm update, just as it happened now during the August 2024 update.

Before the change, Google indexed everything and prioritized the highest-quality content on a domain. Think about it like gold panning, where you fill a pan with gravel, soil and water and then swirl and stir until only valuable material remains.

Now, a domain and its content need to prove themselves before Google even tries to dig for gold. If the domain has too much low-quality content, Google might index only some pages or none at all in extreme cases.

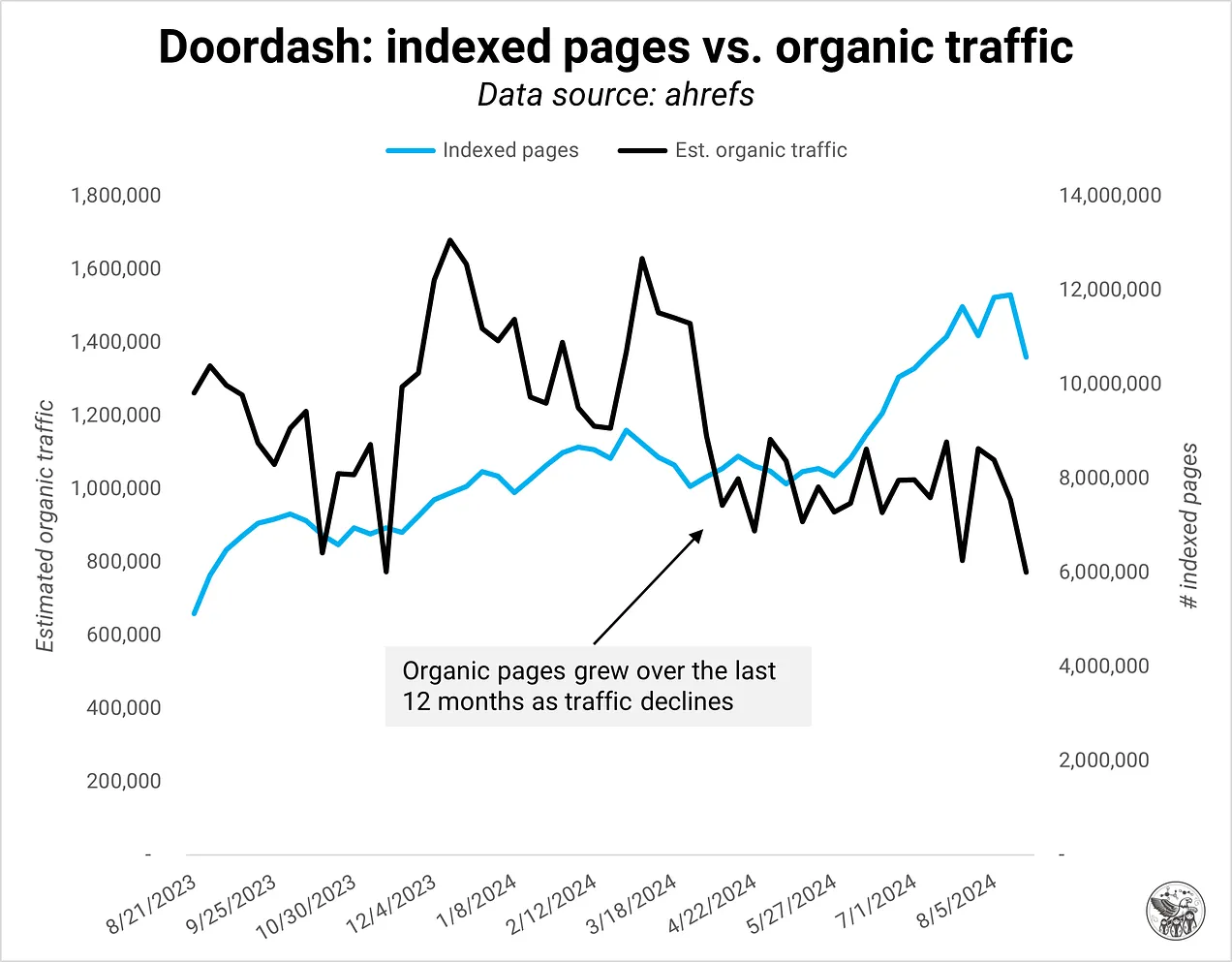

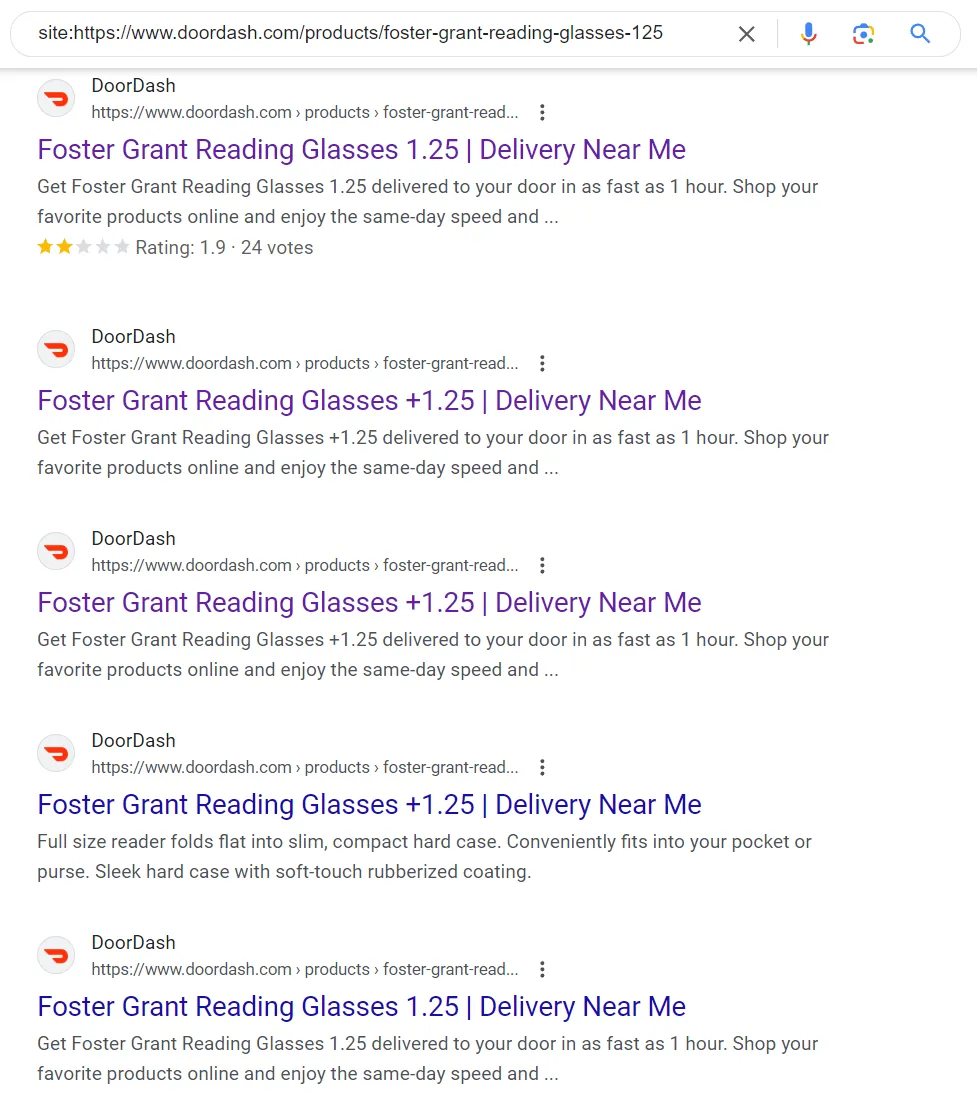

One example is doordash.com, which added many pages over the last 12 months and lost organic traffic in the process. At least some, maybe all, of the new pages didn’t meet Google’s quality expectations.

Image Credit: Kevin Indig

Image Credit: Kevin IndigBut why? What changed? I reason that:

- Google wants to save resources and costs as the company moves to an operational efficiency state of mind.

- Partial indexing is more effective against low-quality content and spam. Instead of indexing and then trying to rank new pages of a domain, Google observes the overall quality of a domain and handles new pages with corresponding skepticism.

- If a domain repeatedly produces low-quality content, it doesn’t get a chance to pollute Google’s index further.

- Google’s bar for quality has increased because there is so much more content on the web, but also to optimize its index for RAG (grounding AI Overviews) and train models.

This emphasis on domain quality as a signal means you have to change the way to monitor your website to account for quality. My guiding principle: “If you can’t add anything new or better to the web, it’s likely not good enough.”

Quality Food

Domain quality is my term for describing the ratio of indexed pages meeting Google’s quality standard vs. not. Note that only indexed pages count for quality. The maximum percentage of “bad” pages before Google reduces traffic to a domain is unclear, but we can certainly see when its met:

Image Credit: Kevin Indig

Image Credit: Kevin Indig Image Credit: Kevin Indig

Image Credit: Kevin Indig Image Credit: Kevin Indig

Image Credit: Kevin IndigI define domain quality as a signal composed of 3 areas: user experience, content quality and technical condition:

- User experience: are users finding what they’re looking for?

- Content quality: information gain, content design, comprehensiveness

- Technically optimized: duplicate content, rendering, onpage content for context, “crawled, not indexed/discovered”, soft 404s

An example of duplicate content (Image Credit: Kevin Indig)

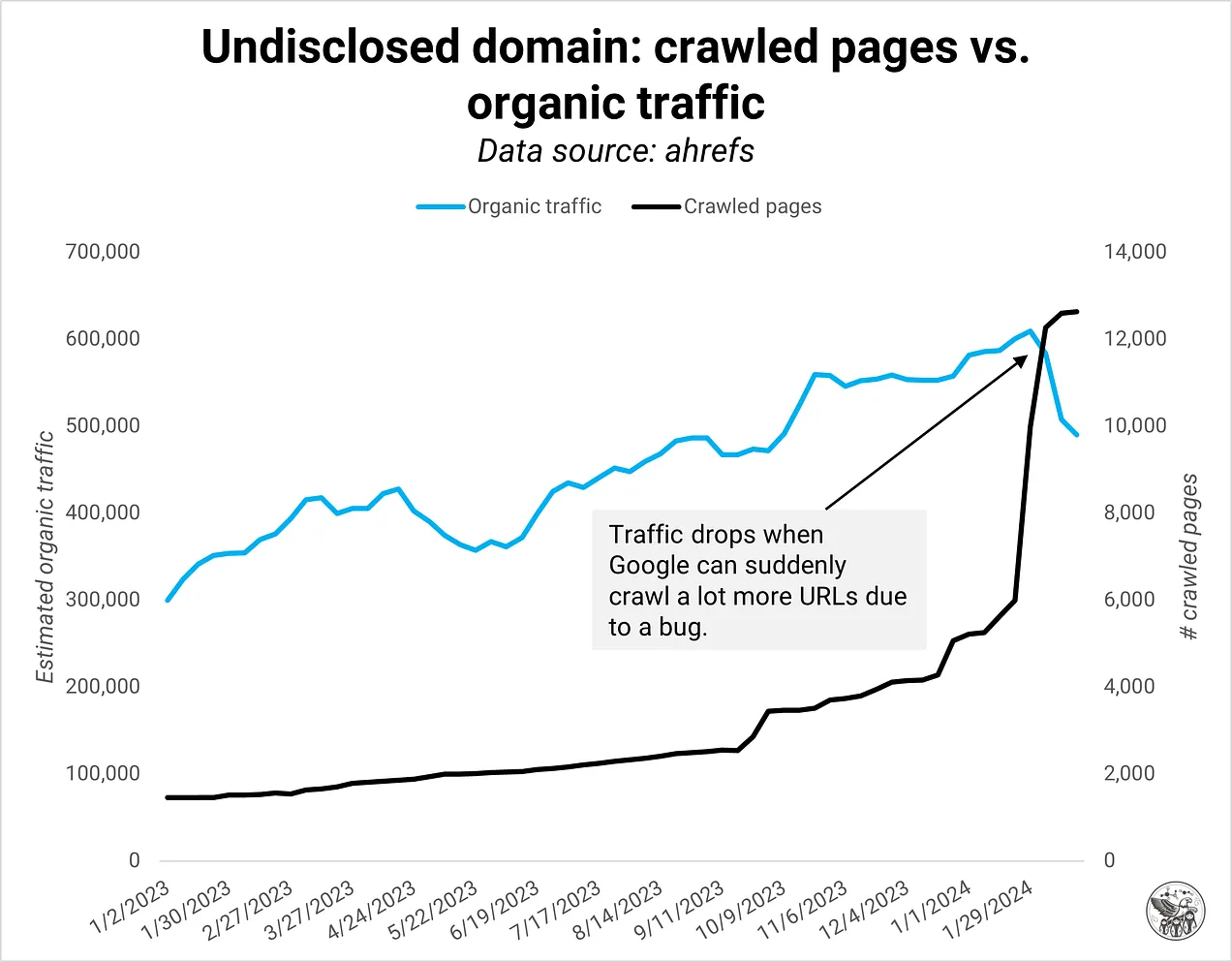

An example of duplicate content (Image Credit: Kevin Indig)A sudden spike in indexed pages usually indicates a technical issue like duplicate content from parameters, internationalization or broken paginations. In the example below, Google immediately reduced organic traffic to this domain when a pagination logic broke, causing lots of duplicate content. I’ve never seen Google react to fast to technical bugs, but that’s the new state of SEO we’re in.

Image Credit: Kevin Indig

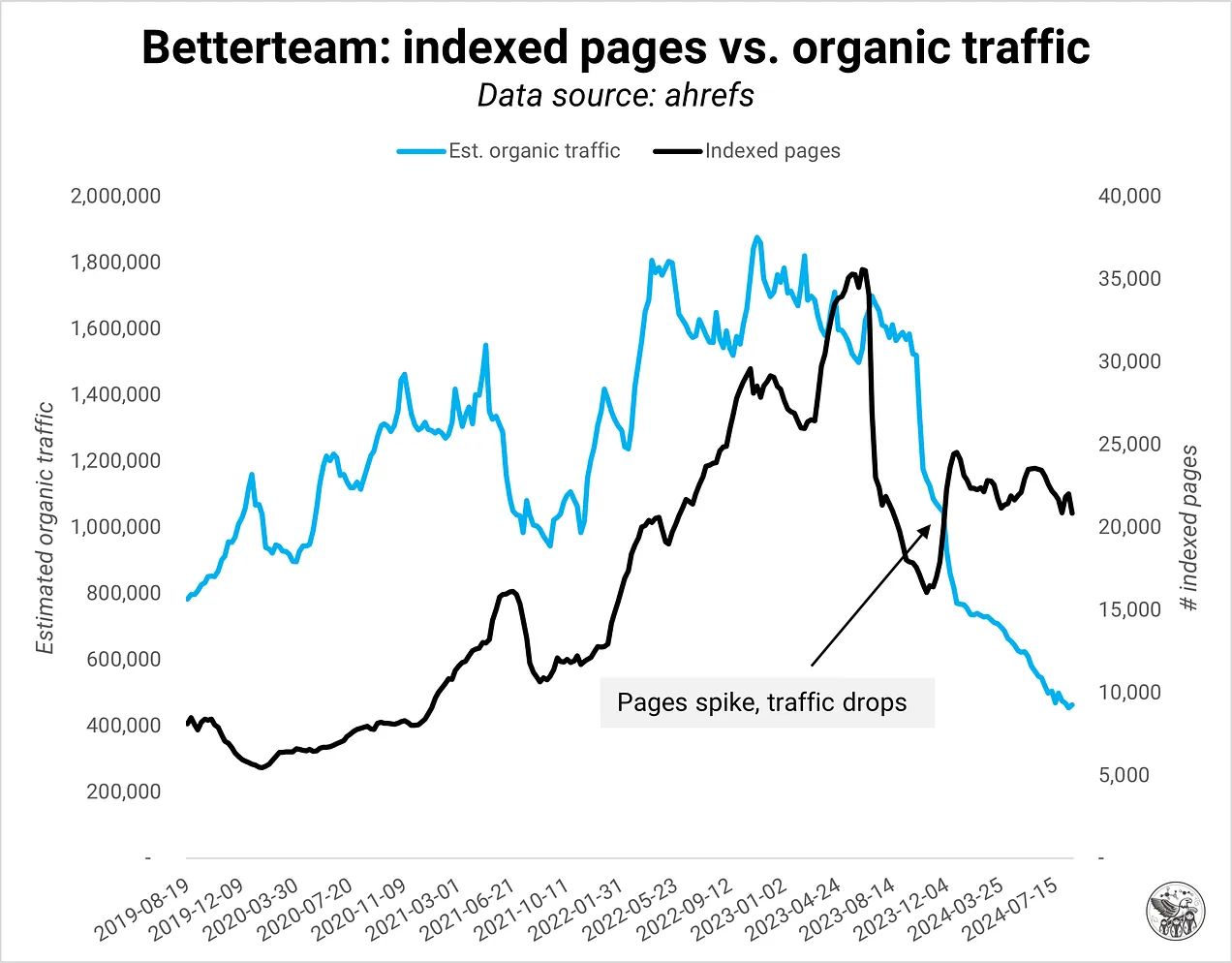

Image Credit: Kevin IndigIn other cases, a spike in indexed pages indicates a programmatic SEO play where the domain launched a lot of pages on the same template. When the content quality on programmatic pages is not good enough, Google quickly turns off the traffic faucet.

Image Credit: Kevin Indig

Image Credit: Kevin Indig Image Credit: Kevin Indig

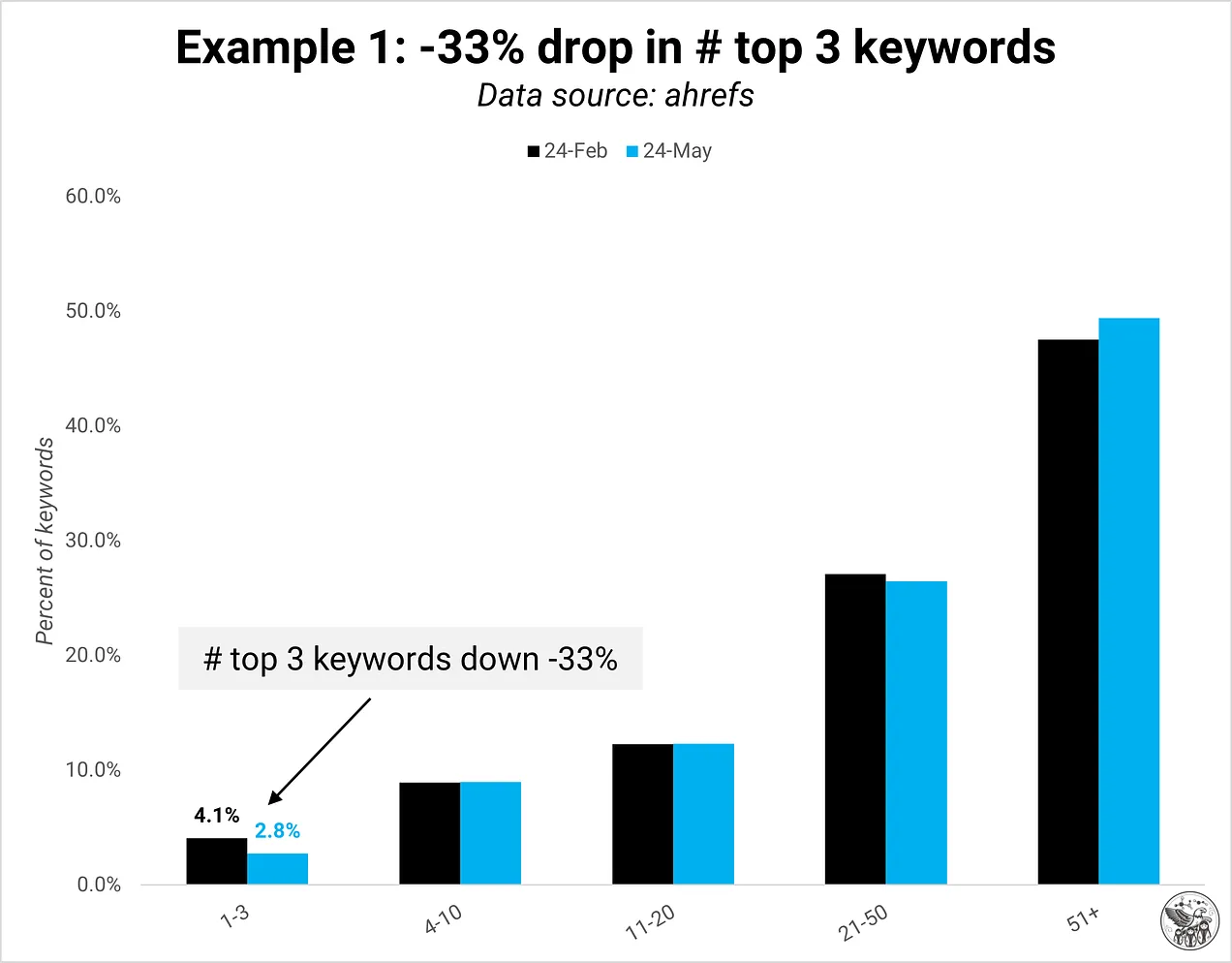

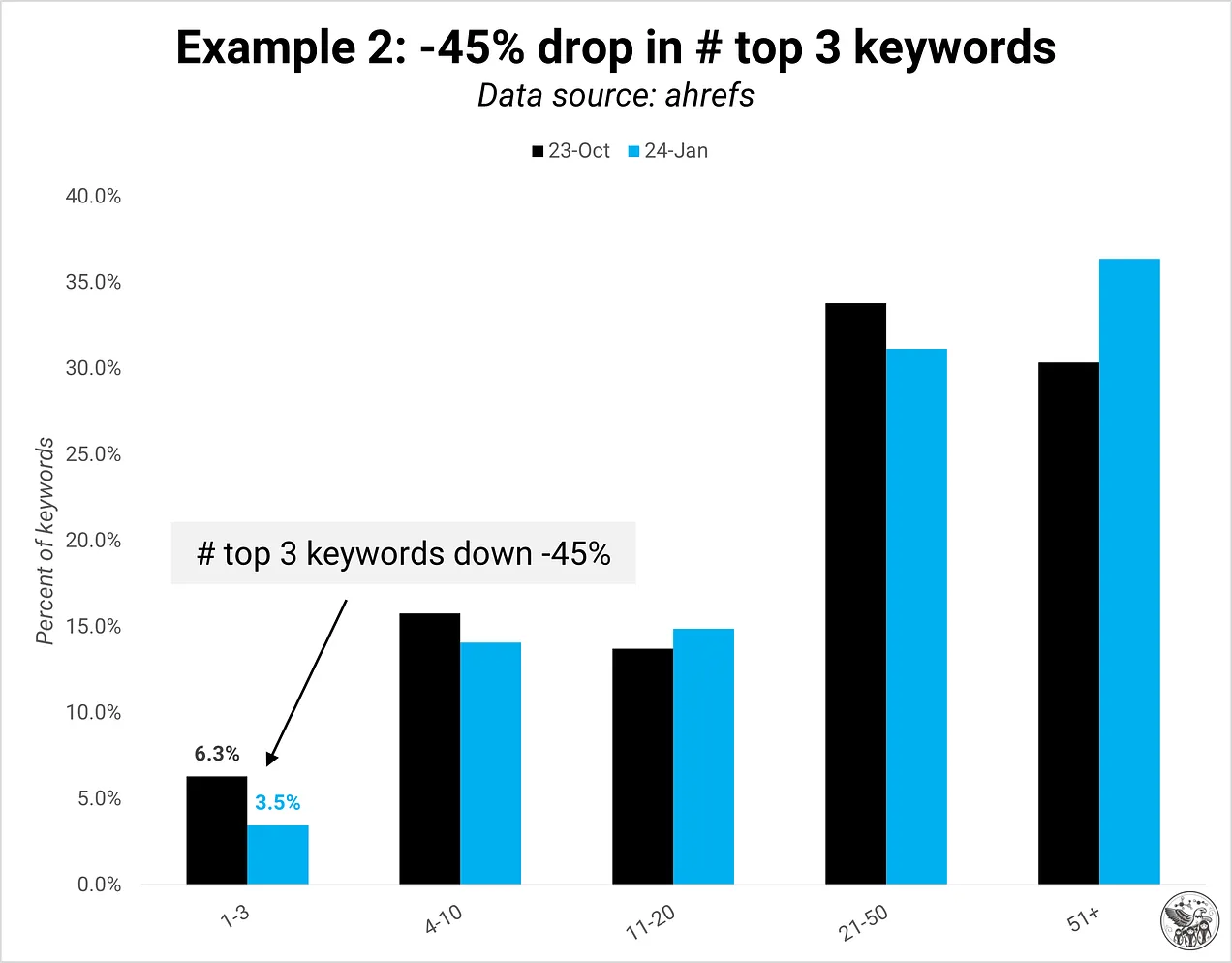

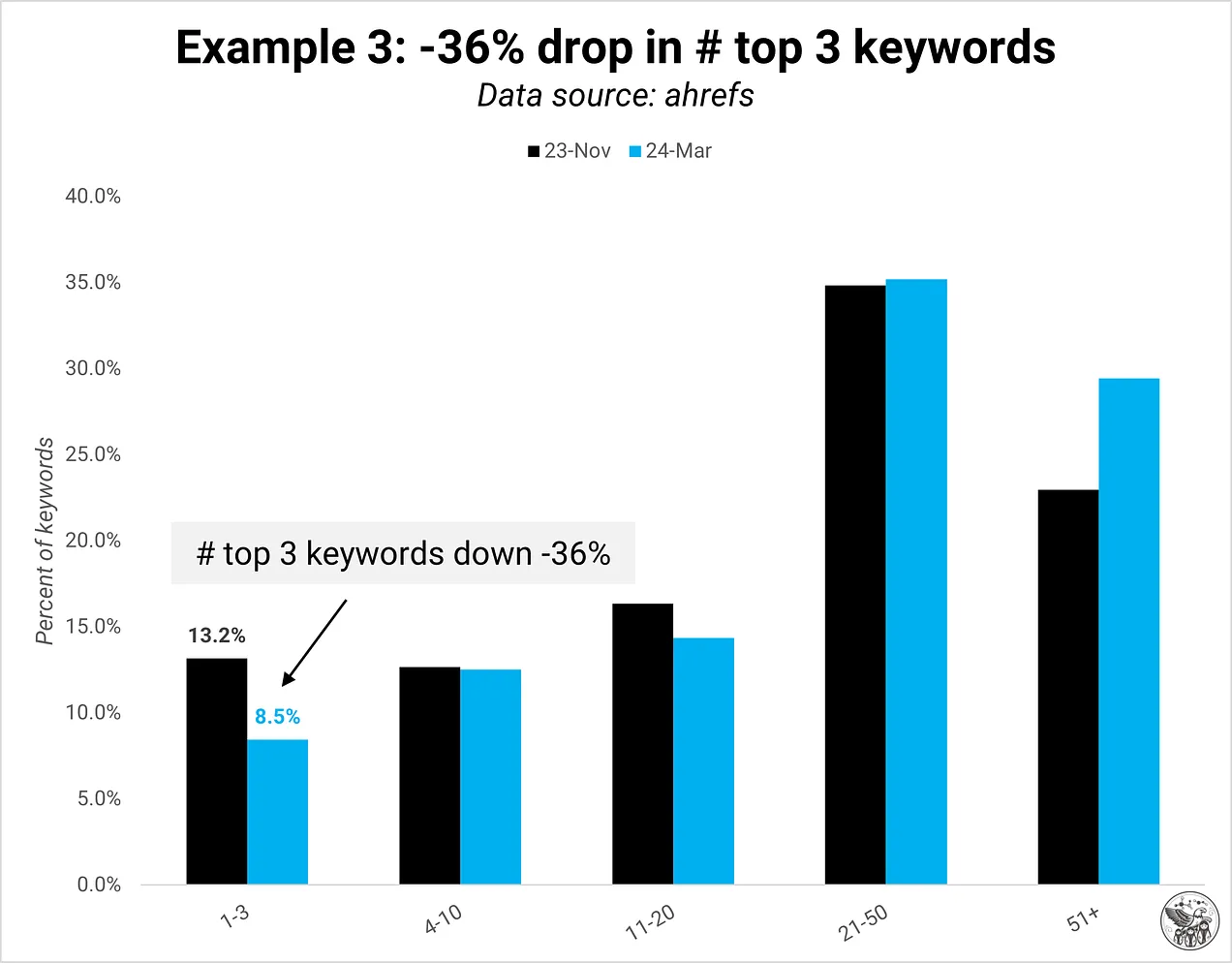

Image Credit: Kevin IndigIn response, Google often reduces the number of keywords ranking in the top 3 positions. The number of keywords ranking in other positions is often relatively stable.

Image Credit: Kevin Indig

Image Credit: Kevin Indig Image Credit: Kevin Indig

Image Credit: Kevin Indig Image Credit: Kevin Indig

Image Credit: Kevin IndigSize increases the problem: domain quality can be a bigger issue for larger sites, even though smaller ones can also be affected.

Adding new pages to your domain is not bad per se. You just want to be careful about it. For example, publishing new thought leadership or product marketing content that doesn’t directly target a keyword can still be very valuable to site visitors. That’s why measuring engagement and user satisfaction on top of SEO metrics is critical.

Diet Plan

The most critical way to keep the “fat” (low-quality pages) off and reduce the risk of getting hit by a Core update is to put the right monitoring system in place. It’s hard to improve what you don’t measure.

At the heart of a domain quality monitoring system is a dashboard that tracks metrics for each page and measures them against the average. If I could pick only three metrics, I would measure inverse bounce rate, conversions (soft and hard), and clicks + ranks by page type per page against the average. Ideally, your system alerts you when a spike in crawl rate happens, especially for new pages that weren’t crawled before.

As I write in How the best companies measure content quality:

1/ For production quality, measure metrics like SEO editor score, Flesch/readability score, or # spelling/grammatical errors

2/ For performance quality, measure metrics like # top 3 ranks, ratio of time on page vs. estimated reading time, inverse bounce rate, scroll depth or pipeline value

3/ For preservation quality, measure performance metrics over time and year-over-year

Ignore pages like Terms of Service or About Us when monitoring your site because their function is unrelated to SEO.

Gain Phase

Monitoring is the first step to understanding your site’s domain quality. You do not always need to add more pages to grow. Often, you can improve your existing page inventory, but you need a monitoring system to figure this out in the first place.

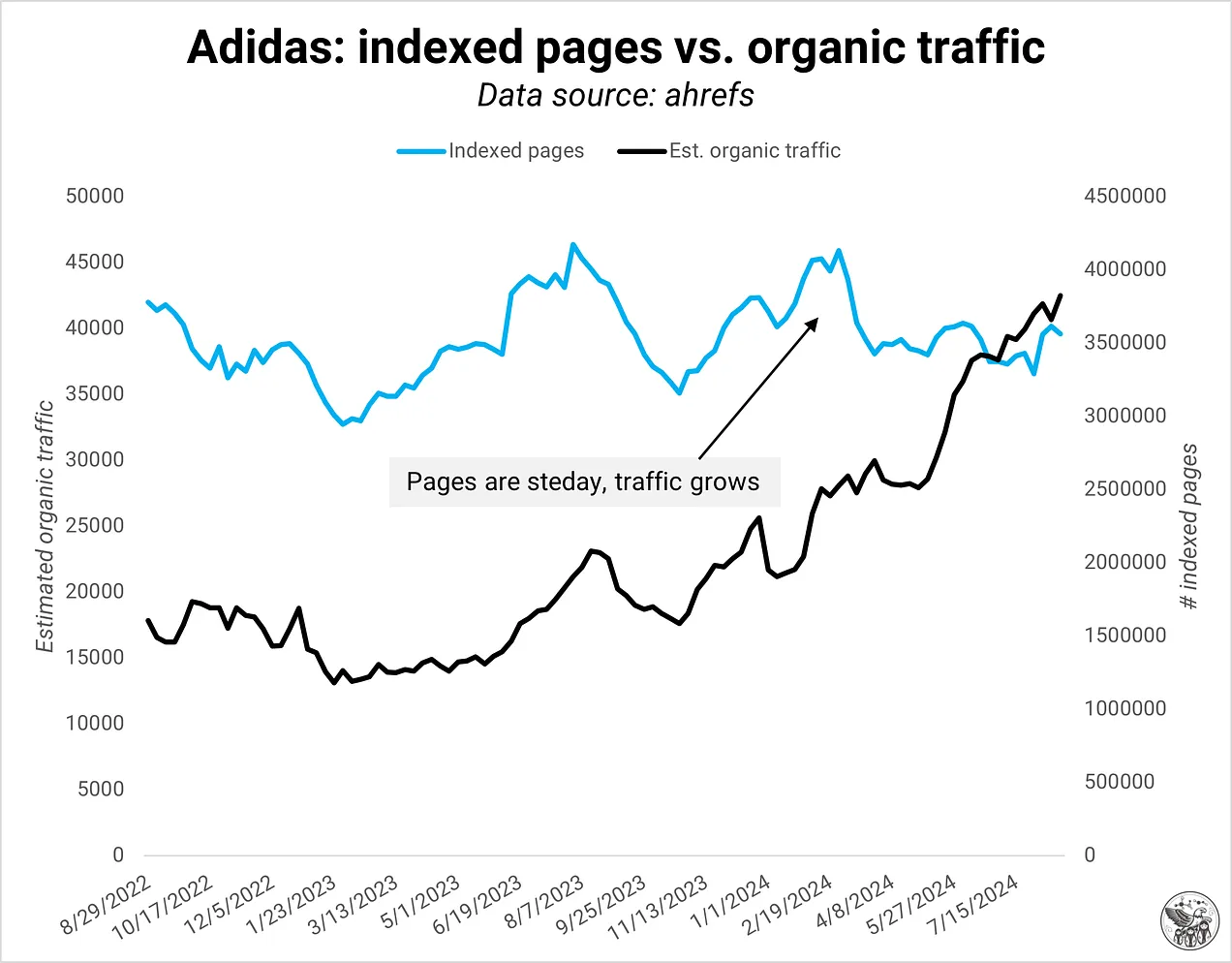

Adidas is a good example of a domain that was able to grow organic traffic just by optimizing its existing pages.

Image Credit: Kevin Indig

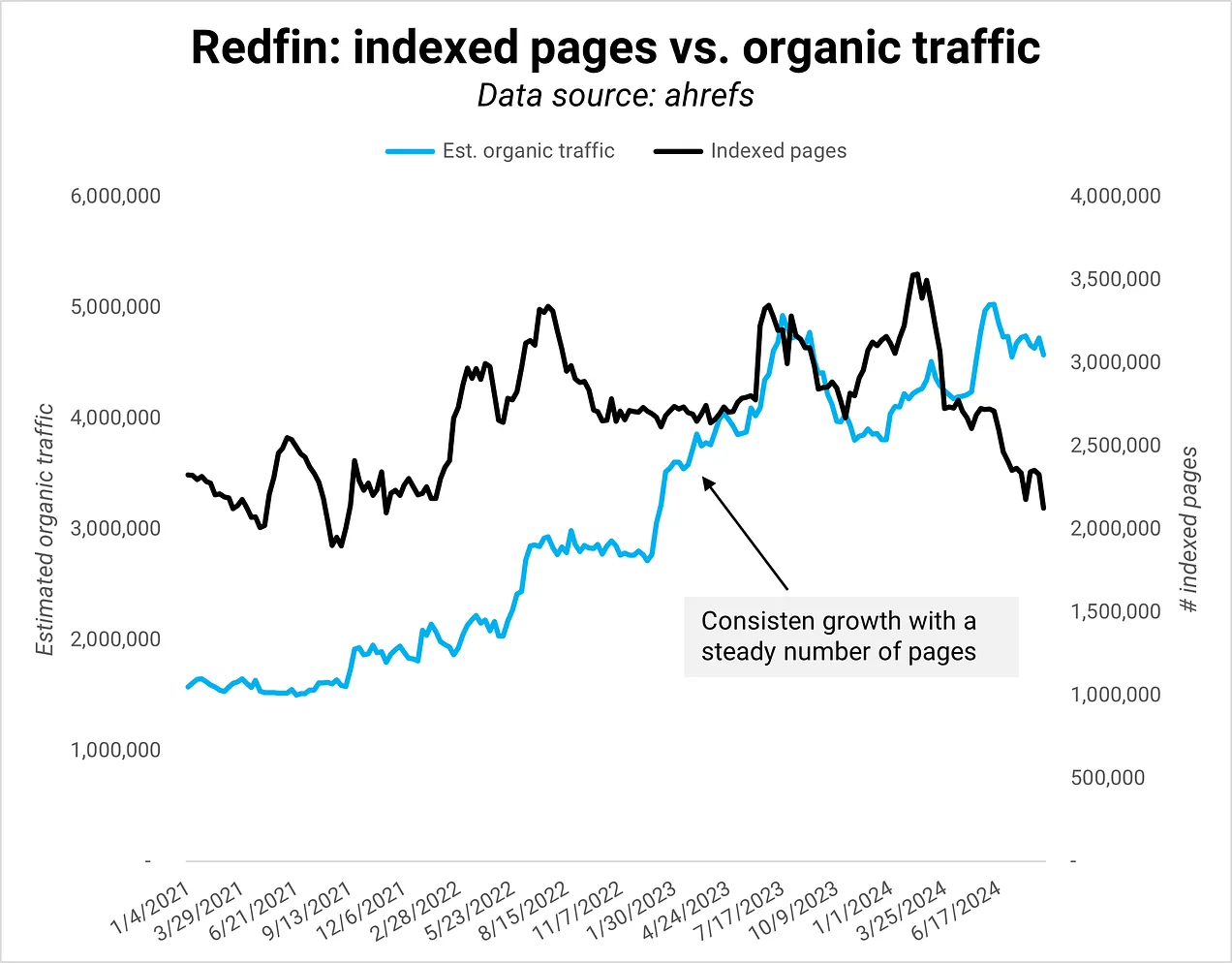

Image Credit: Kevin IndigAnother example is Redfin, which maintained a consistent number of pages while significantly increasing organic traffic.

Image Credit: Kevin Indig

Image Credit: Kevin IndigQuoting Snr. Director of Product Growth in my Redfin Deep Dive about meeting the right quality bar:

Bringing our local expertise to the website – being the authority on the housing market, answering what it’s like to live in an area, offering a complete set of for sale and rental inventory across the United States.

Maintaining technical excellence – our site is large (100m+ pages) so we can’t sleep on things like performance, crawl health, and data quality. Sometimes the least “sexy” efforts can be the most impactful.”

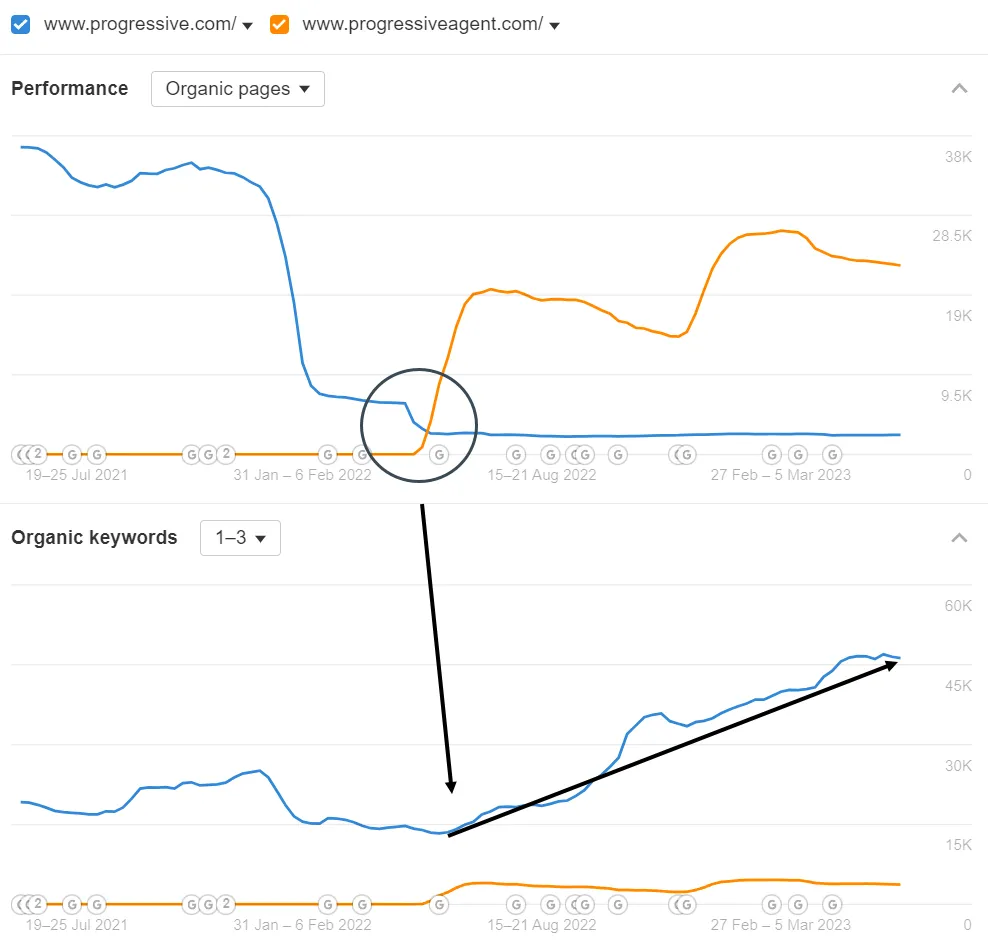

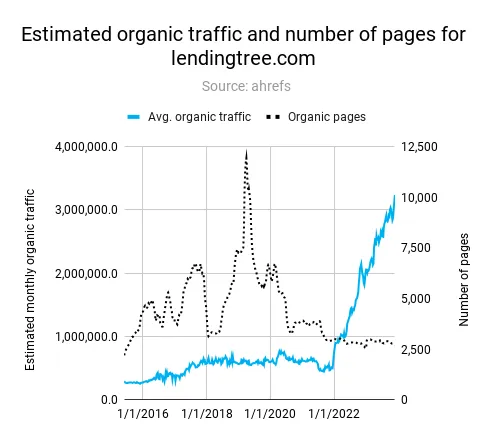

Companies like Lending Tree or Progressive saw significant gains by reducing pages that didn’t meet their quality standards (see screenshots from the Deep Dives below).

Progressive moved agent pages to a separate domain to increase the quality of its main domain. (Image Credit: Kevin Indig)

Progressive moved agent pages to a separate domain to increase the quality of its main domain. (Image Credit: Kevin Indig) LendingTree cut a lot of low-quality pages to boost organic traffic growth. (Image Credit: Kevin Indig)

LendingTree cut a lot of low-quality pages to boost organic traffic growth. (Image Credit: Kevin Indig)Conclusion

Google rewards sites that stay fit. In 2020, I wrote about how Google’s index might be smaller than we think. Index size used to be a goal early in the beginning. But today, it’s less about indexing as many pages indexed as possible and more about having the right pages. The definition of “good” has evolved. Google is pickier about who it lets into the club.

In the same article, I put up a hypothesis that Google would switch to an indexing API and let site owners take responsibility for indexing. That hasn’t come to fruition, but you could say Google is using more APIs for indexing:

- The $60/y agreement between Google and Reddit provides one-tenth of Google’s search results (assuming Reddit is present in the top 10 for almost every keyword).

- In e-commerce, where more organic listings show up higher in search results, Google relies more on the product feed in the Merchant Center to index new products and groom its Shopping Graph.

- SERP Features like Top Stories, which are critical in the News industry, are small services with their own indexing logic.

Looking down the road, the big question about indexing is how it will morph when more users search through AI Overviews and AI chatbots. Assuming LLMs will still need to be able to render pages, technical SEO work remains essential—however, the motivation for indexing changes from surfacing web results to training models. As a result, the value of pages with nothing new to offer will be even closer to zero than today.

Boost your skills with Growth Memo’s weekly expert insights. Subscribe for free!

Featured Image: Paulo Bobita/Search Engine Journal

![AI Overviews: We Reverse-Engineered Them So You Don't Have To [+ What You Need To Do Next]](https://www.searchenginejournal.com/wp-content/uploads/2025/04/sidebar1x-455.png)