Over the last 12 months, we filled significant gaps in our understanding of AI Chatbots like ChatGPT & Co.

We know:

- Adoption is growing rapidly.

- AI chatbots send more referrals to websites over time.

- Referral traffic from AI chatbots has a higher quality than that from Google.

You can read all about it in the state of AI chatbots and SEO.

But there isn’t much content about examples and success factors of content that drives citations and mentions in AI chatbots.

To get an answer, I analyzed over 7,000 citations across 1,600 URLs to content-heavy sites (think: Integrators) in # AI chatbots (ChatGPT, Perplexity, AI Overviews) in February 2024 with the help of Profound.

My goal is to figure out:

- Why some pages are more cited than others, so we can optimize content for AI chatbots.

- Whether classic SEO factors matter for AI chatbot visibility, so we can prioritize.

- What traps to avoid, so we don’t have to learn the same lessons many times.

- If different factors influence mentions and citations, so we can be more targeted in our efforts.

Here are my findings:

Boost your skills with Growth Memo’s weekly expert insights. Subscribe for free!

The Key To Brand Citation In AI Chatbots: Deep Content

Image Credit: Kevin Indig

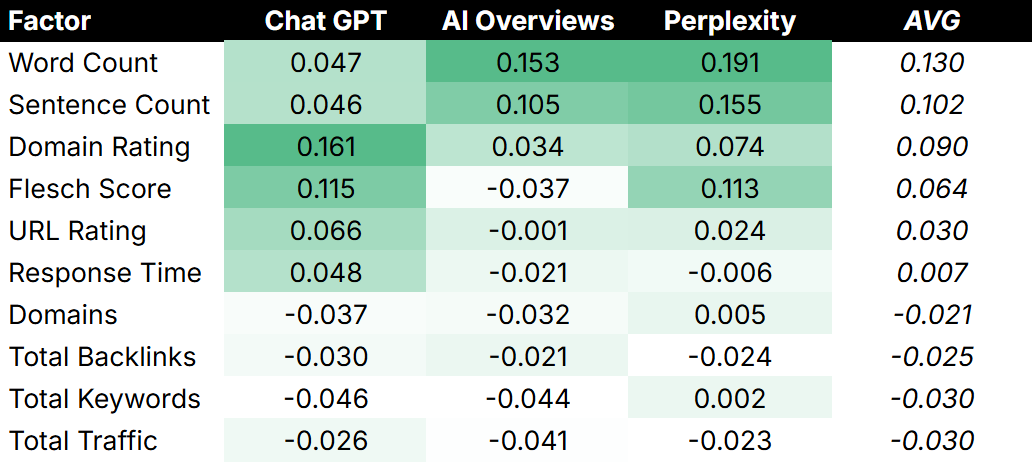

Image Credit: Kevin Indig🔍 Context: We know that AI chatbots use Retrieval Augmented Generation (RAG) to weigh their answers with results from Google and Bing. However, does that mean classic SEO ranking factors also translate to AI chatbot citations? No.

My correlation analysis shows that none of the classic SEO metrics have strong relationships with citations. LLMs have light preferences: Perplexity and in AIOs weigh word and sentence count higher. ChatGPT weighs domain rating and Flesch Score.

💡Takeaway: Classic SEO metrics don’t matter nearly as much for AI chatbot mentions and citations. The best thing you can do for content optimization is to aim for depth, comprehensiveness, and readability (how easy the text is to understand).

The following examples all demonstrate those attributes:

- https://www.byrdie.com/digital-prescription-services-dermatologist-5179537

- https://www.healthline.com/nutrition/best-weight-loss-programs

- https://www.verywellmind.com/we-tried-online-therapy-com-these-were-our-experiences-8780086

Broad correlations didn’t reveal enough meat on the bone and left me with too many open questions.

So, I looked at what the most-cited content does differently than the rest. That approach showed much stronger patterns.

Image Credit: Kevin Indig

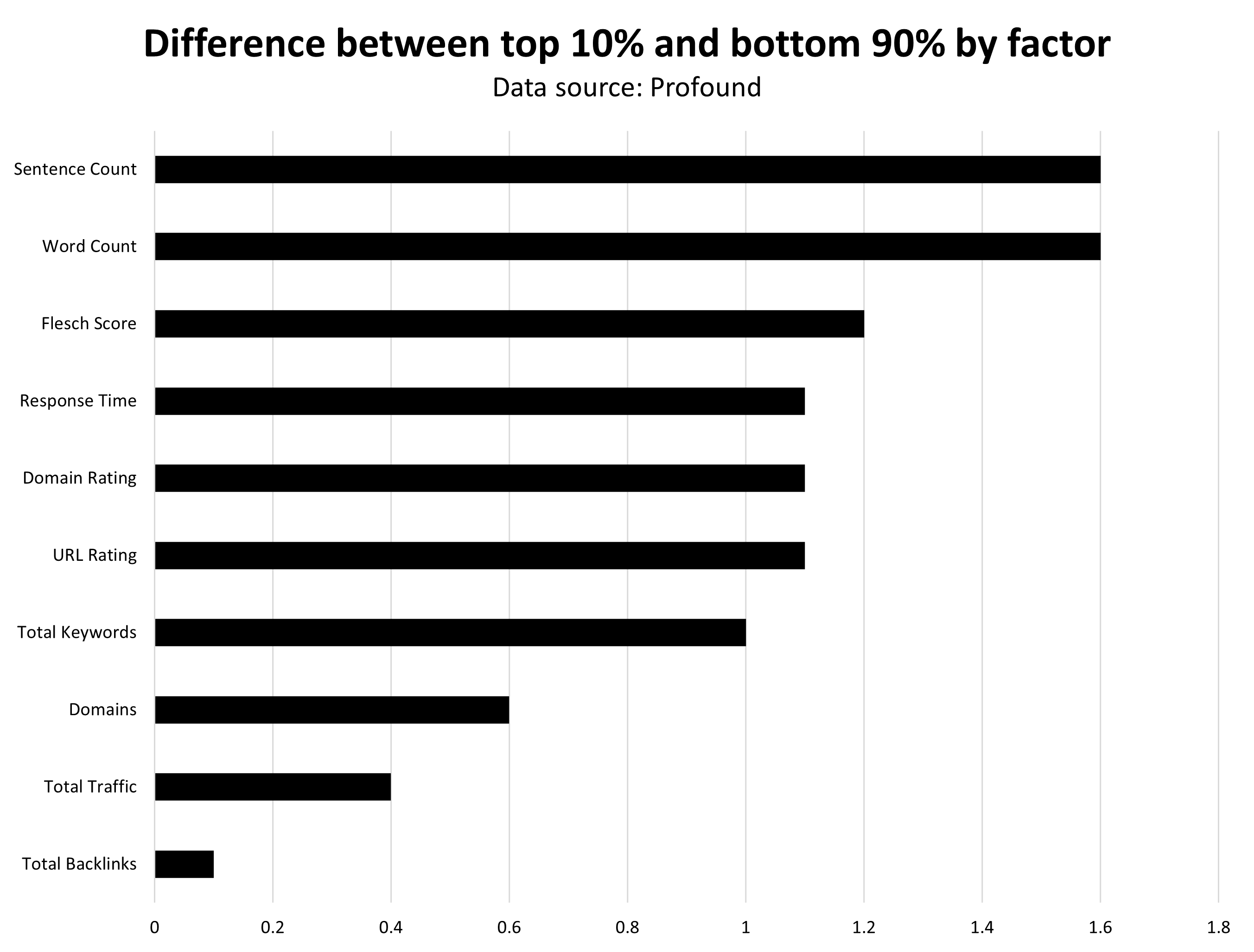

Image Credit: Kevin Indig🔍Context: Because I didn’t get much out of statistical correlations, I wanted to see how the top 10% of most cited content stacks up against the bottom 90%.

The bigger the difference, the more critical the factor for the top 10%. In other words, the multiplier (x-axis on the chart) indicates what factors LLMs reward with citations.

The results:

- The two factors that stand out are sentence and word count, followed by the Flesch Score. Metrics related to backlinks and traffic seem to have a negative effect, which doesn’t mean that AI chatbots weigh them negatively but simply that they don’t matter for mentions or citations.

- The top 10% of most cited pages across all three LLMs have much less traffic, rank for fewer keywords, and get fewer total backlinks. How does that make sense? It almost looks like being strong in traditional SEO metrics is bad for AI chatbot visibility.

- Copilot (not included in the chart) has the starkest inequality, by the way. The top 10% have 17.6 more citations than the bottom 90%. However, top 10% also rank for 1.7x more keywords in organic search. So, Copilot seems to have stronger preferences than other AI Chatbots.

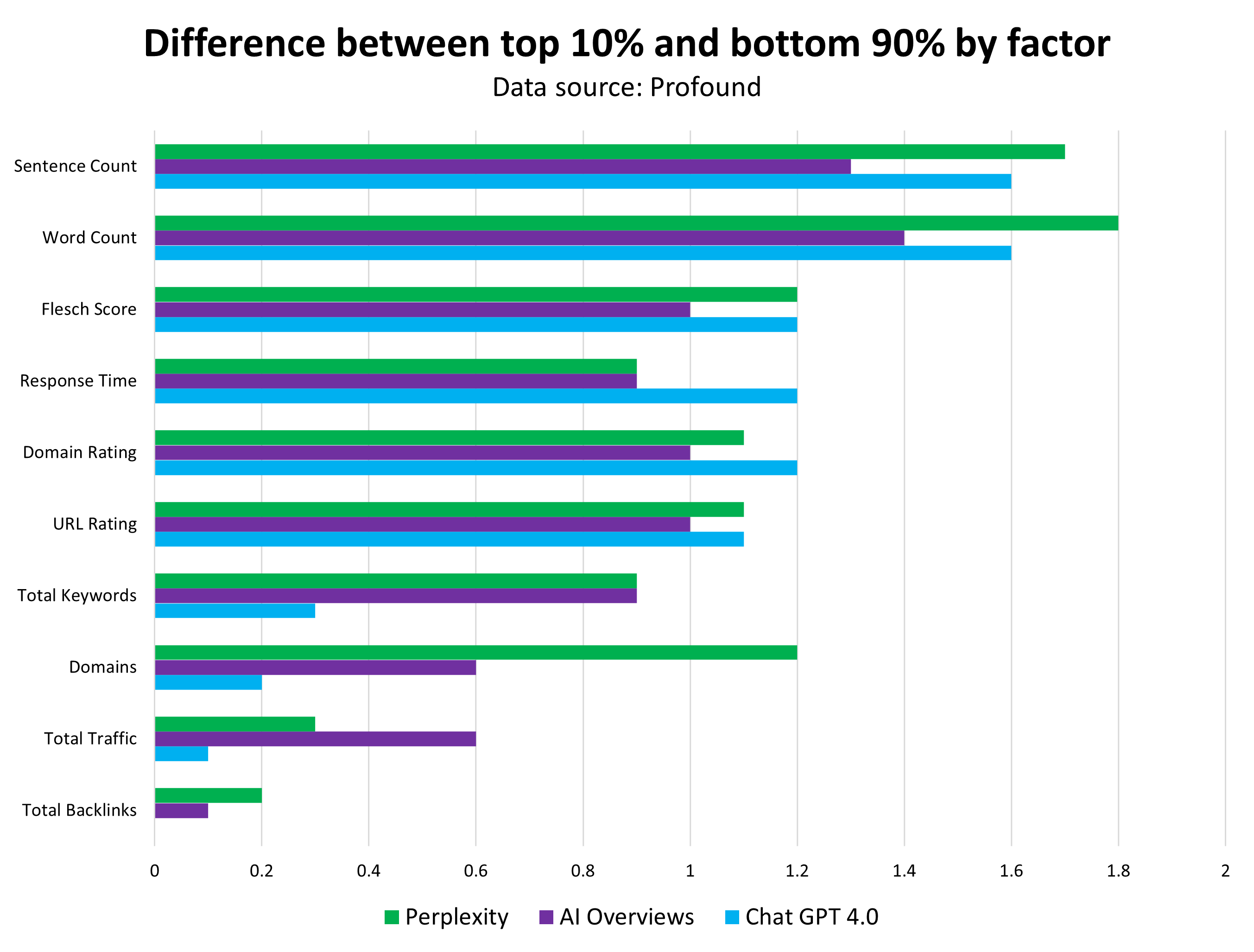

Splitting the data up by AI Chatbot shows you their unique preferences:

Image Credit: Kevin Indig

Image Credit: Kevin Indig💡Takeaway: Content depth (word and sentence count) and readability (Flesch Score) have the biggest impact on citations in AI chatbots.

This is important to understand: Longer content isn’t better because it’s longer, but because it has a higher chance of answering a specific question prompted in an AI chatbot.

Examples:

- www.verywellmind.com/best-online-psychiatrists-5119854 has 187 citations, over 10,000 words, and over 1,500 sentences, with a Flesch Score of 55, and is cited 72 times by ChatGPT.

- On the other hand, www.onlinetherapy.com/best-online-psychiatrists/ has only three citations, also a low Flesch Score, with 48, but comes “short” with only 3,900 words and 580 sentences.

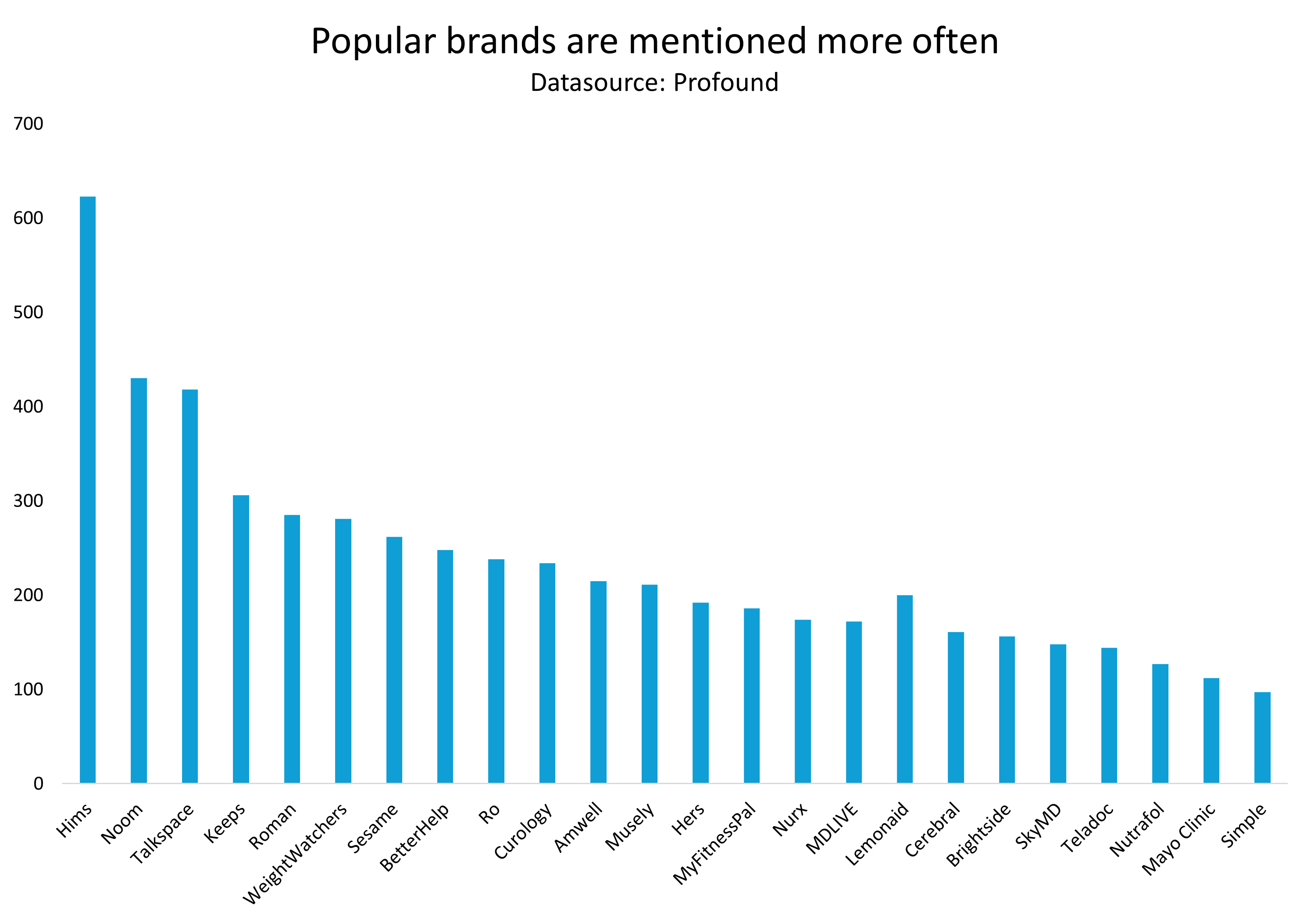

The Key To Brand Mentions In AI Chatbots: Popularity

🔍Context: We don’t yet know the value of a brand being mentioned by an AI chatbot.

Early research indicates it’s high, especially when prompts indicate purchase intent.

However, I wanted to get a step closer by understanding what leads to brand mentions in AI chatbots in the first place.

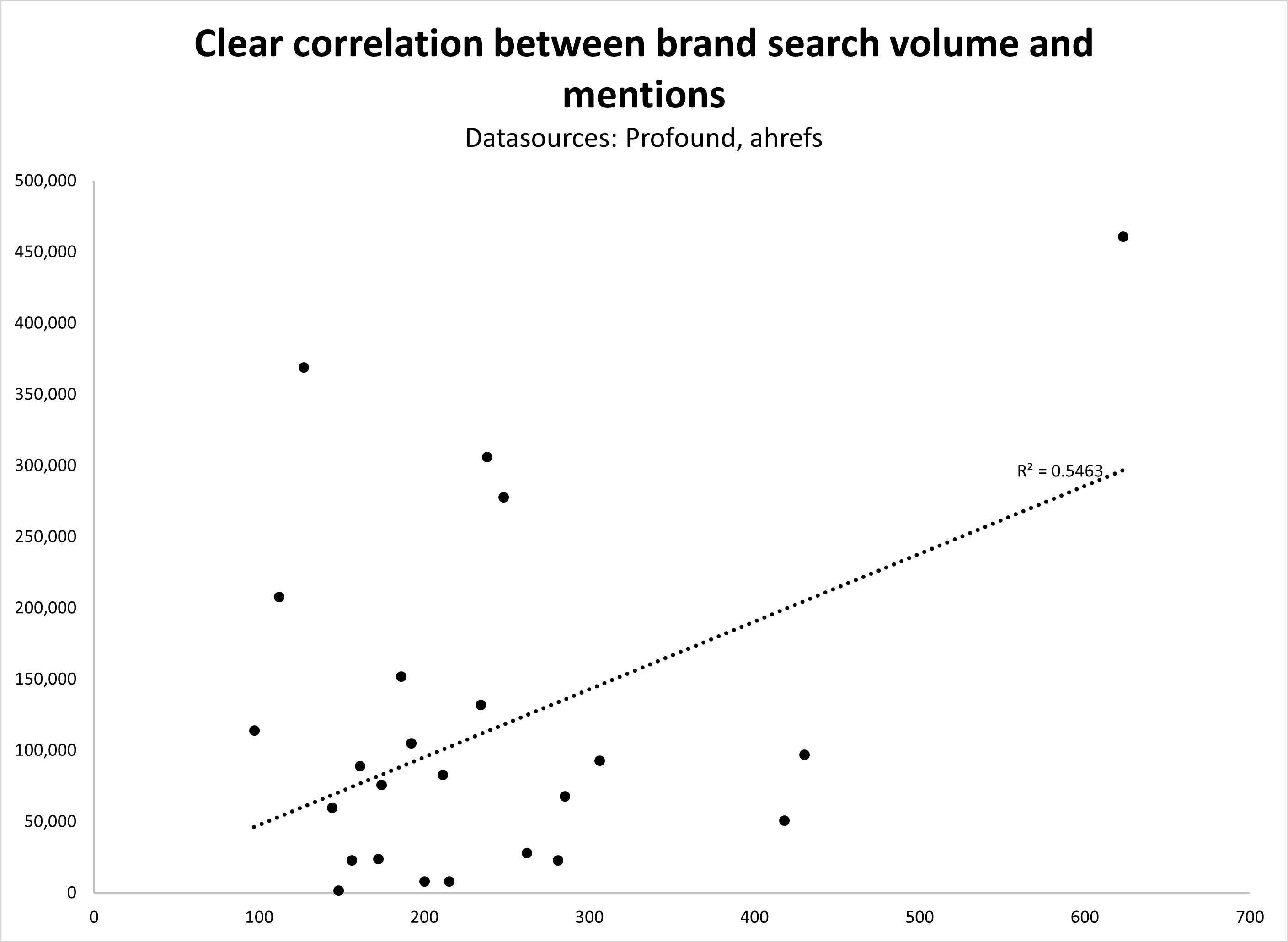

After matching many metrics with AI chatbot visibility, I found one factor that stands out more than anything else: Brand search volume.

The number of AI chatbot mentions, and brand search volume have a correlation of .334 – pretty good in this field. In other words, the popularity of a brand broadly decides how visible it is in AI chatbots.

Image Credit: Kevin Indig

Image Credit: Kevin IndigPopularity is the most significant predictor for ChatGPT, which also sends the most traffic and has the highest usage of all AI chatbots.

When breaking it down by AI chatbot, I found ChatGPT has the highest correlation with .542 (strong) ,but Perplexity (.196) and Google AIOs (.254) have lower correlations.

To be clear, there is a lot of nuance on the prompt and category level. But broadly, a brand’s visibility seems to be severely impacted by how popular it is.

Example of popular brands and their visibility in the health category (Image Credit: Kevin Indig)

Example of popular brands and their visibility in the health category (Image Credit: Kevin Indig)However, when brands are mentioned, all AI chatbots prefer popular brands and consistently rank them in the same order.

- There is a clear link between the categories of the users’ questions (mental health, skincare, weight loss, hair loss, erectile dysfunction) and brands.

- Early data shows that the most visible brands are digital-first and invest heavily in their online presence with content, SEO, reviews, social media, and digital advertising.

💡Takeaway: Popularity is the biggest criterion that decides whether a brand is mentioned in AI chatbots or not. The way consumers connect brands to product categories also matters.

Comparing brand search volume and product category presence with your competitors gives you the best idea of how competitive you are on ChatGPT & Co.

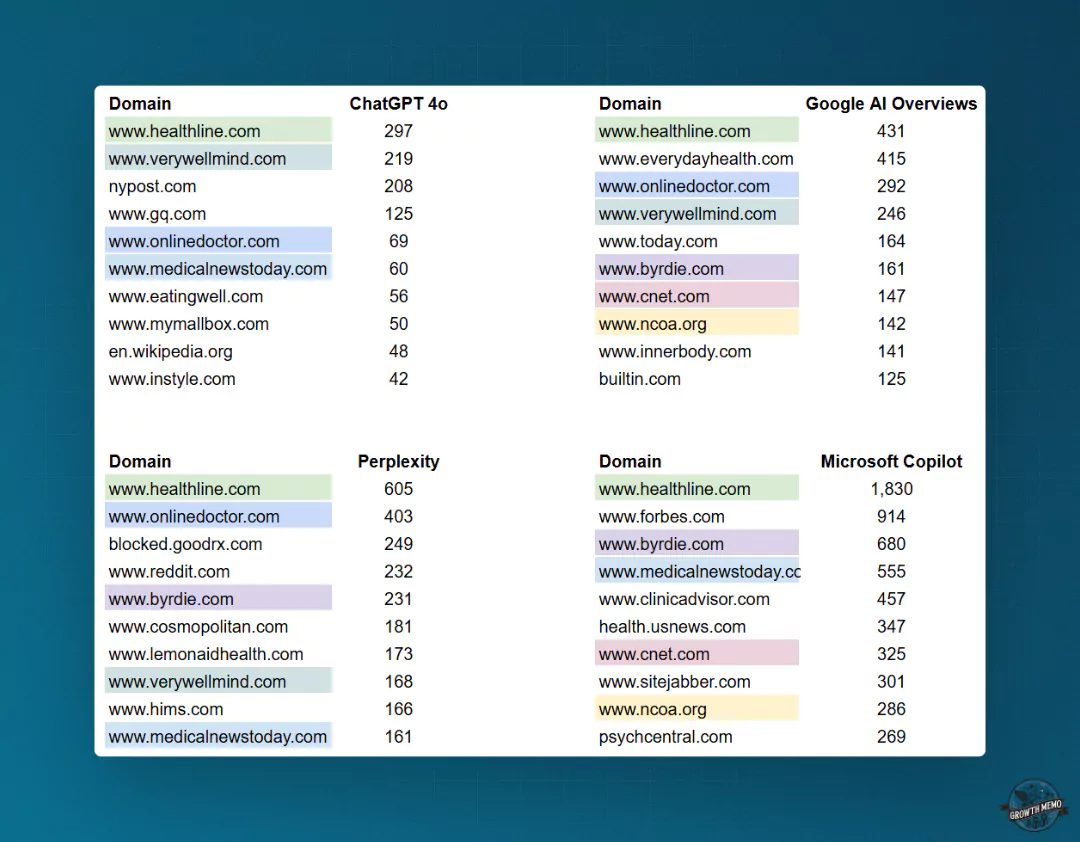

Examples: All models in my analysis cite Healthline most often. Not a single other domain was in the top 10 citations for all four models, showing their distinctly different tastes and how important it is to keep track of many models as opposed to only ChatGPT – if those models also send you traffic.

Image Credit: Kevin Indig

Image Credit: Kevin IndigOther well-cited domains across most models:

- verywellmind.com

- onlinedoctor.com

- medicalnewstoday.com

- byrdie.com

- cnet.com

- ncoa.org

Image Credit: Kevin Indig

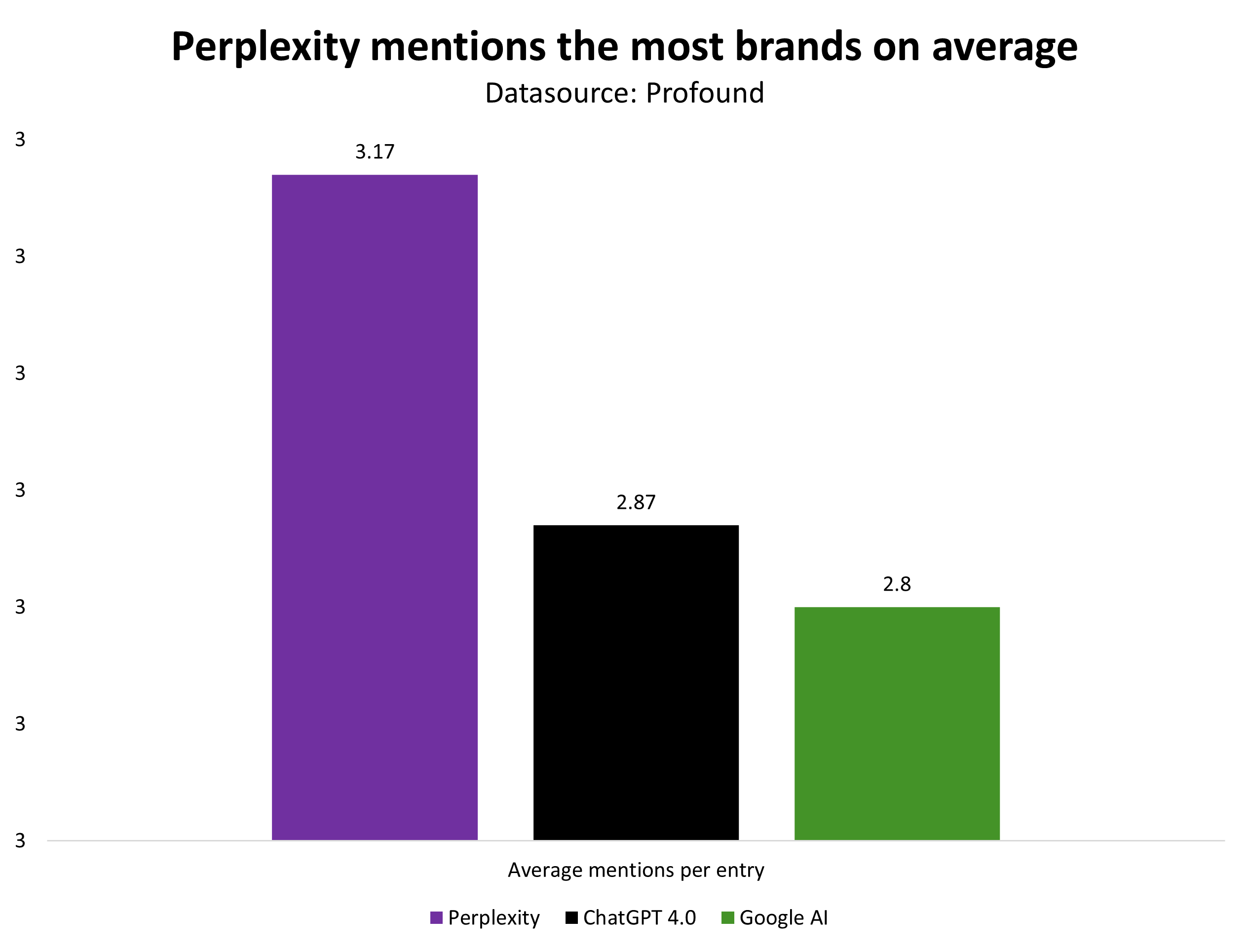

Image Credit: Kevin IndigContext: Not all AI chatbots mentioned brands with the same frequency. Even though ChatGPT has the highest adoption and sends the most referral traffic to sources, Perplexity mentions the most brands per average in answers.

Prompt structure matters for brand visibility:

- The word “best” was a strong trigger for brand mentions in 69.71% of prompts.

- Words like “trusted” (5.77%), “source” (2.88%), “recommend” (0.96%), and “reliable” (0.96%) were also associated with an increased likelihood of brand mentions.

- Prompts including “recommend” often mention public organizations like the FDA, especially when the prompt includes words like “trusted” or “leading.”

- Google AIOs show the highest brand diversity, followed by Perplexity, then ChatGPT.

💡Takeaway: Prompt structure has a meaningful impact on the brands that come up in the answer.

However, we’re not yet able to truly know what prompts users utilize. This is important to keep in mind: All prompts we look at and track are just proxies for what users might be doing.

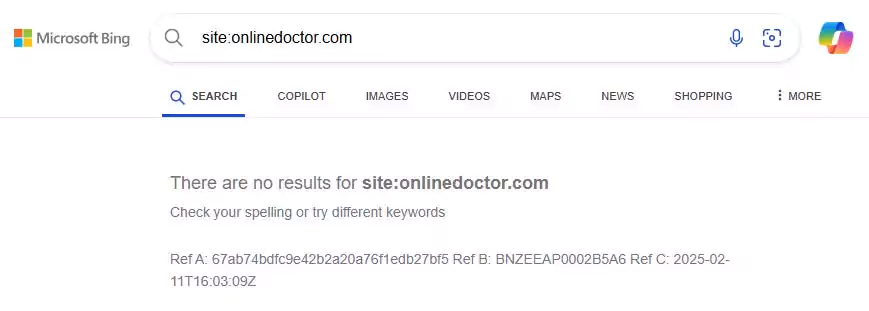

Technical Traps Can Severely Sabotage AI Visibility

Image Credit: Kevin Indig

Image Credit: Kevin Indig🔍Context: In my research, I encountered several ways brands unintentionally sabotage their AI chatbot visibility.

I surface them here because the pre-requisite to being visible in LLMs is, of course, their ability to crawl your site, whether that’s directly or through training data.

For example, Copilot doesn’t cite onlinedoctor.com because it’s not indexed in Bing. I couldn’t find indicators that this was done on purpose, so I assume it’s an accident that could quickly be fixed and rewarded with referral traffic.

On the other hand, ChatGPT 4o doesn’t cite cnet.com, and Perplexity doesn’t cite everydayhealth.com because both sites intentionally block the respective LLM in their robots.txt.

But there are also cases in which AI chatbots reference sites even though they technically shouldn’t.

The most cited domain in Perplexity in my dataset is blocked.goodrx.com. GoodRX blocks users from non-U.S. countries, and it seems it accidentally or intentionally blocks Perplexity.

Image Credit: Kevin Indig

Image Credit: Kevin IndigIt’s important to single out Google’s AI Overviews here: There is no opt-out for AIOs, meaning if you want to get organic traffic from Google, you need to allow it to crawl your site, potentially use your content to train its models and surface it in AI Overviews. Chegg recently filed a lawsuit against Google for this.

💡Takeaway: Monitor your site, especially if all wanted URLs are indexed, in Google Search Console and Bing Webmaster Tools.

Double-check whether you accidentally block an LLM crawler in your robots.txt or through your CDN.

If you intentionally block LLM crawlers, double-check whether you appear in their answers simply by asking them what they know about your domain.

Summary: 6 Key Learnings

- Classic SEO metrics don’t strongly influence AI chatbot citations.

- Content depth (higher word and sentence counts) and readability (good Flesch Score) matter more.

- Different AI chatbots have distinct preferences – monitoring multiple platforms is important.

- Brand popularity (measured by search volume) is the strongest predictor of brand mentions in AI chatbots, especially in ChatGPT.

- Prompt structure influences brand visibility, and we don’t yet know how user phrase prompts.

- Technical issues can sabotage AI visibility – ensure your site isn’t accidentally blocking LLM crawlers through robots.txt or CDN settings.

Featured Image: Paulo Bobita/Search Engine Journal

![[SEO, PPC & Attribution] Unlocking The Power Of Offline Marketing In A Digital World](https://www.searchenginejournal.com/wp-content/uploads/2025/03/sidebar1x-534.png)